📝 11 Sep 2024

Why do we need Continuous Integration?

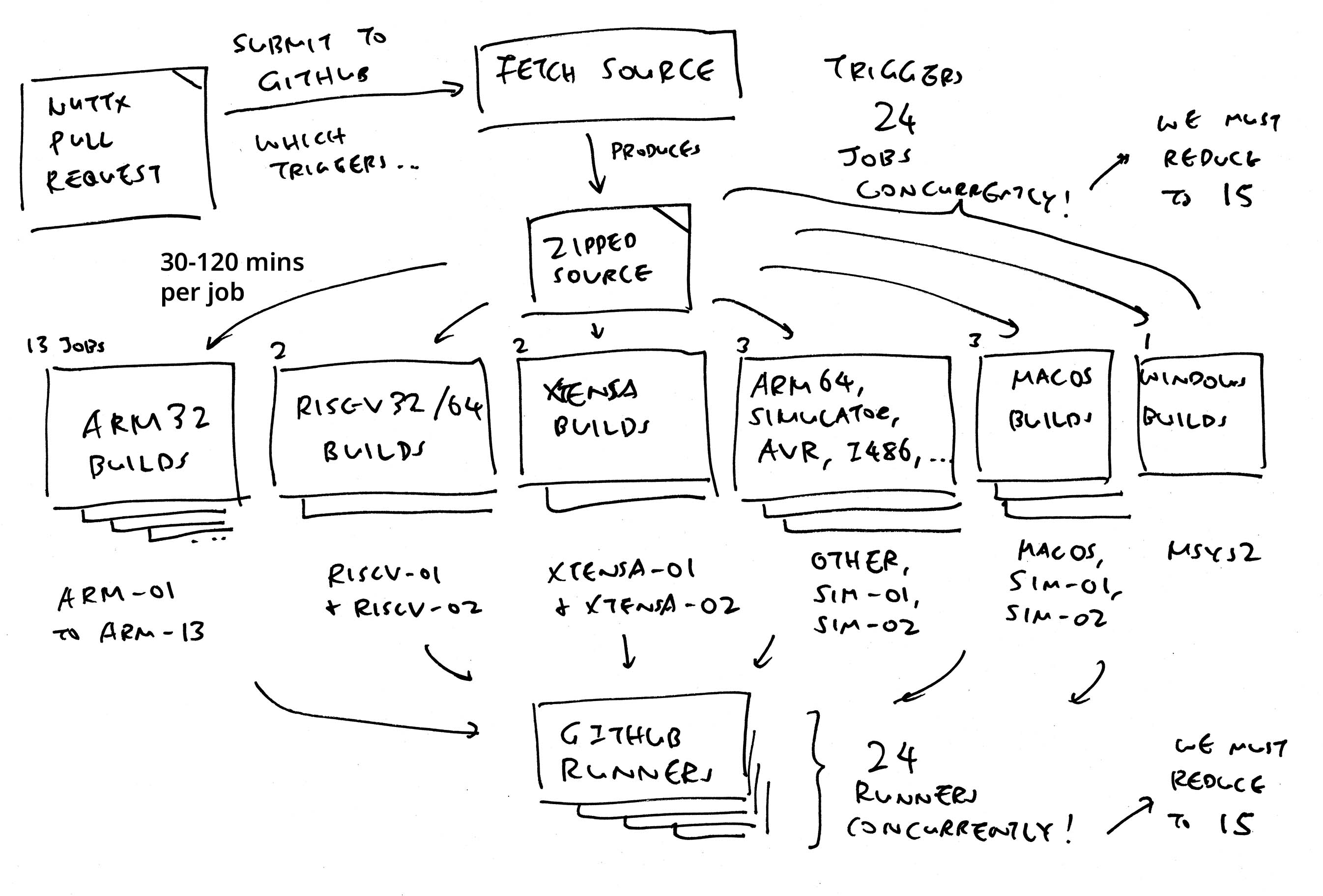

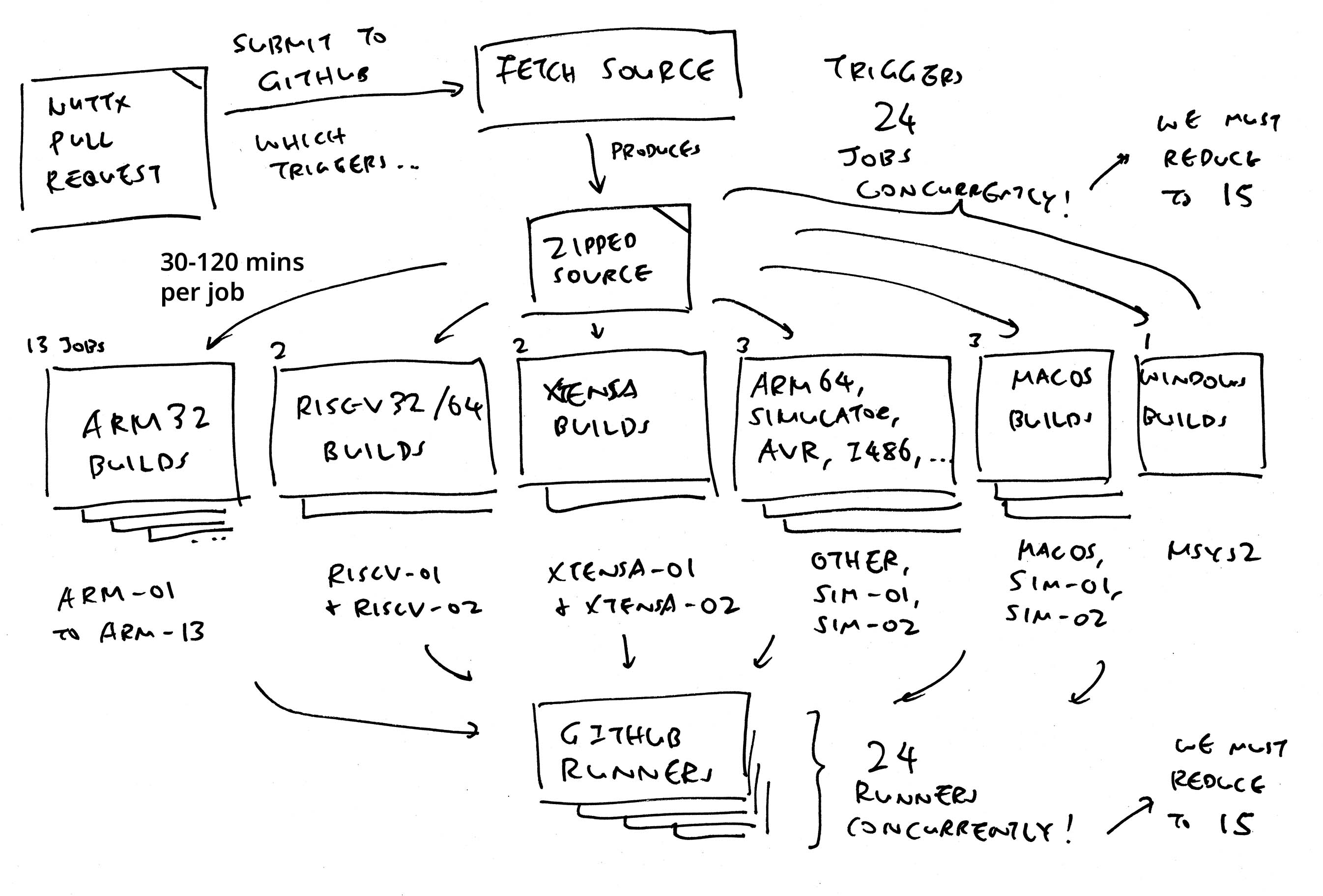

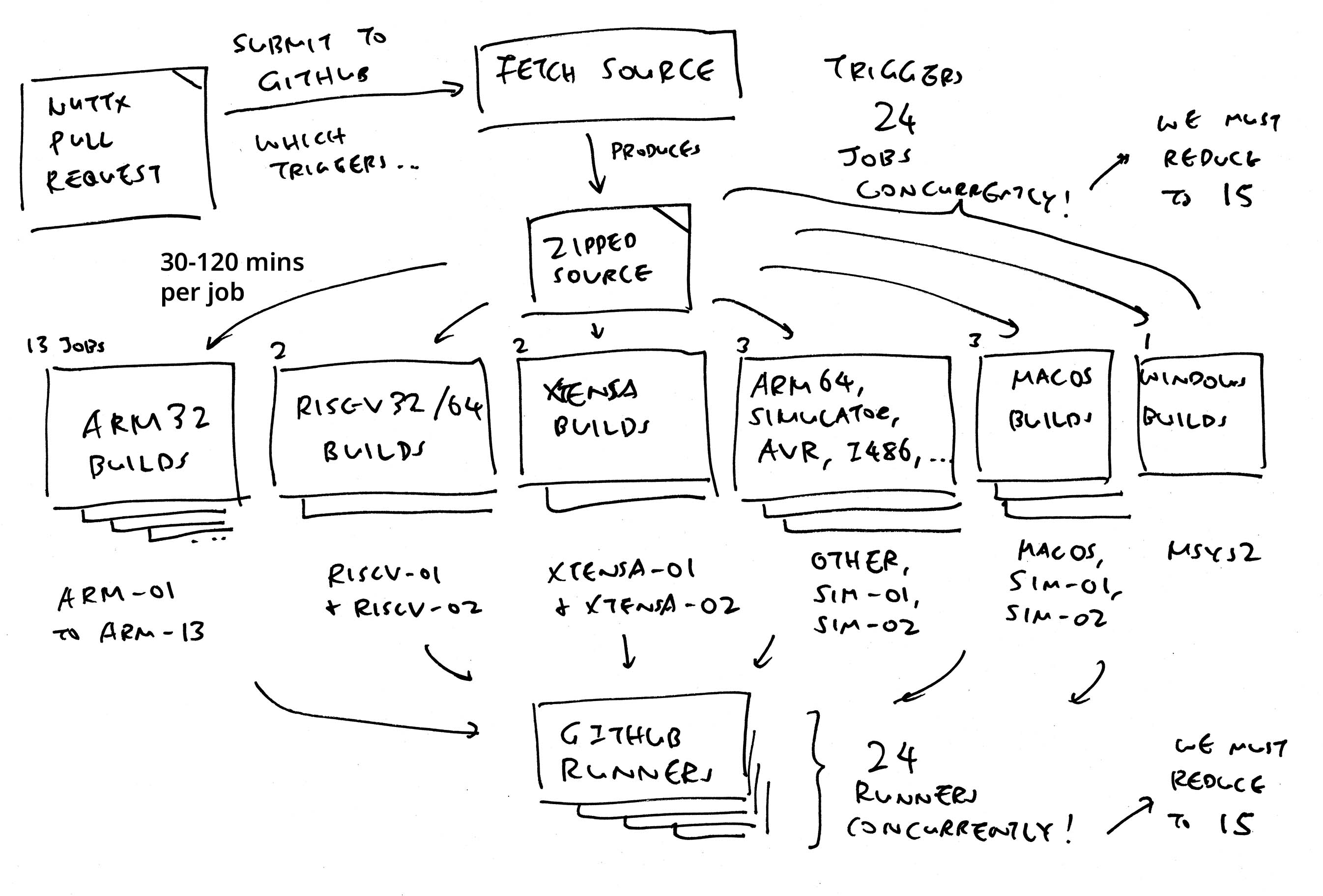

Suppose we Submit a Pull Request for NuttX. We need to be sure that our Modified Code won’t break the Existing Builds in NuttX.

That’s why our Pull Request will trigger the Continuous Integration Workflow, to recompile NuttX for All Hardware Platforms.

That’s 1,594 Build Targets across Arm, RISC-V, Xtensa, AVR, i486, Simulator and more!

What happens inside the Continuous Integration?

Head over to the NuttX Repository…

Click any one of the jobs

We’ll see this NuttX Build for Arm32…

====================================================================================

Configuration/Tool: pcduino-a10/nsh,CONFIG_ARM_TOOLCHAIN_GNU_EABI

2024-08-26 02:30:55

------------------------------------------------------------------------------------

Cleaning...

Configuring...

Enabling CONFIG_ARM_TOOLCHAIN_GNU_EABI

Building NuttX...

arm-none-eabi-ld: warning: /github/workspace/sources/nuttx/nuttx has a LOAD segment with RWX permissions

Normalize pcduino-a10/nshFollowed by Many More Arm32 Builds…

Config: beaglebone-black/nsh

Config: at32f437-mini/eth

Config: at32f437-mini/sdcard

Config: at32f437-mini/systemview

Config: at32f437-mini/rtc

...What’s in a NuttX Build?

Each NuttX Build will be a…

Regular NuttX Make for a NuttX Target

Or a NuttX CMake for Newer Targets

Or a Python Test for POSIX Validation

What about other NuttX Targets? Arm64, RISC-V, Xtensa, …

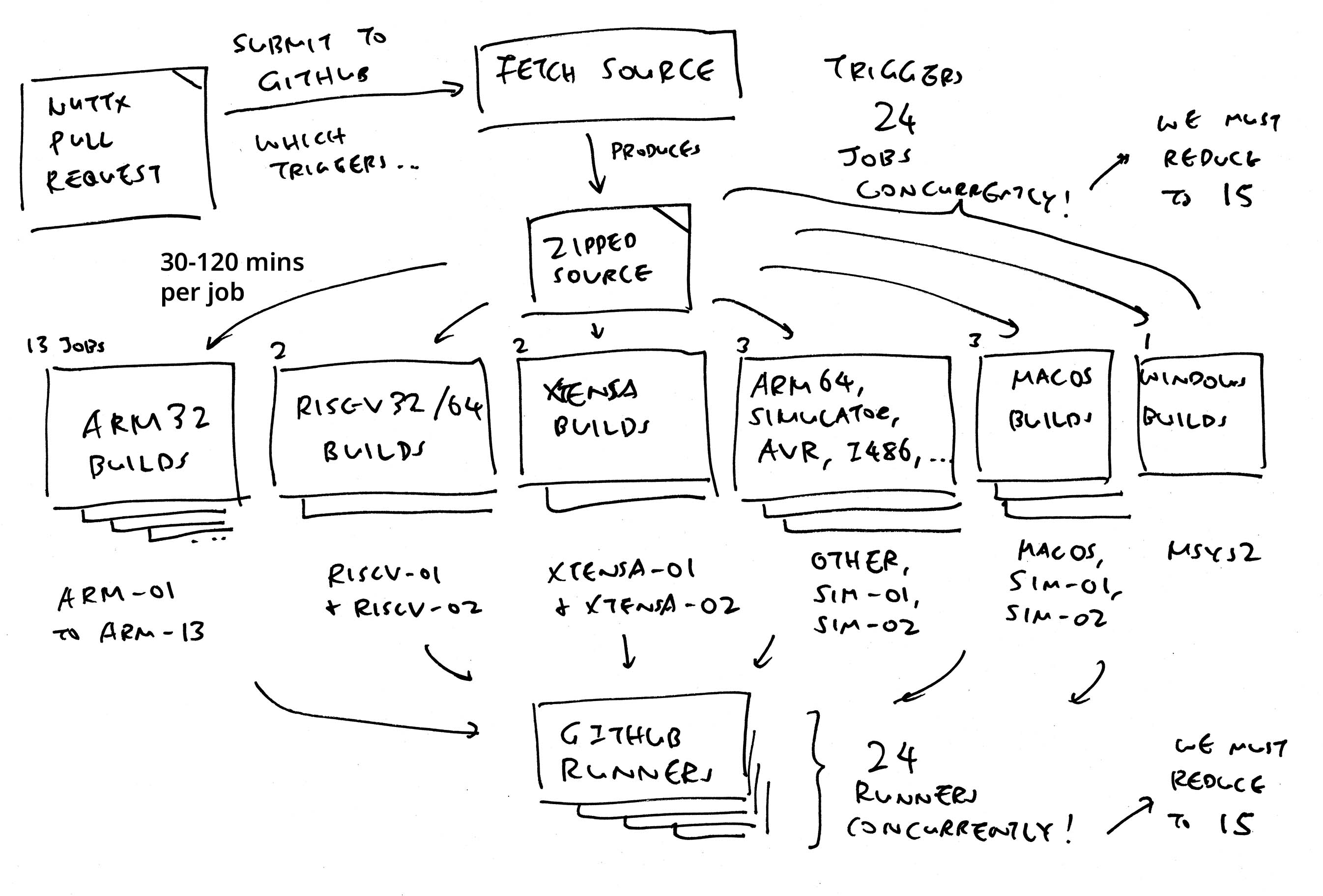

Every Pull Request will trigger 24 Jobs for Continuous Integration, all compiling concurrently…

Arm32 Targets: arm-01, arm-02, arm-03, …

RISC-V Targets: riscv-01, …

Xtensa Targets: xtensa-01, …

Simulator Targets: sim-01, …

Other Targets (Arm64, AVR, i486, …)

macOS and Windows (msys2)

Each of the above 24 jobs will run for 30 minutes to 2 hours. After 2 hours, we’ll know for sure whether our Modified Code will break any NuttX Build!

Each of the 24 jobs above will run up to 2 hours. Why?

That’s because the 24 Build Jobs will recompile 1,594 NuttX Targets from scratch!

Here’s the complete list of Build Targets…

Arm32: 932 targets

(arm-01 to arm-13)

RISC-V: 212 targets

(riscv-01, riscv-02)

Xtensa: 195 targets

(xtensa-01, xtensa-02)

Simulator: 86 targets

(sim-01, sim-02)

Others: 72 targets

(other)

macOS and Windows: 97 targets

(macos, sim-01, sim-02, msys2)

Is this a problem?

Every single Pull Request will execute 24 Build Jobs in parallel.

Which needs 24 GitHub Runners per Pull Request. And they ain’t cheap!

We experiment with Self-Hosted Runners to understand what happens inside NuttX Continuous Integration. We run them on two computers…

Older PC on Ubuntu x64 (Intel i7, 3.7 GHz)

Newer Mac Mini on macOS Arm64 (M2 Pro)

With plenty of Internet Bandwidth

In our Fork of NuttX Repo: Look for this code in the GitHub Actions Workflow: .github/workflows/build.yml

## Linux Build runs on GitHub Runners

Linux:

needs: Fetch-Source

runs-on: ubuntu-latestChange runs-on to…

## Linux Build now runs on Self-Hosted Runners (Linux x64)

runs-on: [self-hosted, Linux, X64]Install Self-Hosted Runners for Linux x64 and macOS Arm64…

Follow these Instructions from GitHub

Apply the Fixes for Linux Runners

And the Fixes for macOS Runners

They will run like this…

## Configure our Self-Hosted Runner for Linux x64

$ cd actions-runner

$ ./config.sh --url YOUR_REPO --token YOUR_TOKEN

Enter the name of the runner group: <Press Enter>

Enter the name of runner: <Press Enter>

This runner will have the following labels:

'self-hosted', 'Linux', 'X64'

Enter any additional labels: <Press Enter>

Enter name of work folder: <Press Enter>

## For macOS on Arm64: Runner Labels will be

## 'self-hosted', 'macOS', 'ARM64'

## Start our Self-Hosted Runner

$ ./run.sh

Current runner version: '2.319.1'

Listening for Jobs

Running job: Linux (arm-01)Beware of Security Concerns!

Ensure that Self-Hosted Runners will run only Approved Scripts and Commands

Remember to Disable External Users from triggering GitHub Actions on our repo

Shut Down the Runners when we’re done with testing

Our Self-Hosted Runners: Do they work for NuttX Builds?

According to the result here, yep they work yay!

That’s 2 hours on a 10-year-old MacBook Pro with Intel i7 on Ubuntu.

(Compare that with GitHub Runners, which will take 30 mins per job)

Do we need a faster PC?

Not necessarily. We see some Network Throttling for our Self-Hosted Runners (in spite of our super-fast internet)…

Docker Hub will throttle our downloading of the NuttX Docker Image. Which is required for building the NuttX Targets.

If it gets too slow, cancel the GitHub Workflow and restart. Throttling will magically disappear.

Downloading the NuttX Source Code (700 MB) from GitHub takes 25 minutes.

(For GitHub Runners: Under 10 seconds!)

Can we guesstimate the time to run a Build Job?

Just browse the GitHub Actions Log for the Build Job. See the Line Numbers?

Every Build Job will have roughly 1,000 Lines of Log (by sheer coincidence). We can use this to guess the Job Duration.

What about macOS on Arm64?

Sadly the Linux Builds won’t run on macOS Arm64 because they need Docker on Linux x64…

We’ll talk about Emulating x64 on macOS Arm64. But first we run Fetch Source on macOS…

We haven’t invoked the Runners for macOS Arm64?

Fetch Source works OK on macOS Arm64. Let’s try it now.

Head over to our NuttX Repo and update the GitHub Actions Workflow: .github/workflows/build.yml

## Fetch-Source runs on GitHub Runners

jobs:

Fetch-Source:

runs-on: ubuntu-latestChange runs-on to…

## Fetch-Source now runs on Self-Hosted Runners (macOS Arm64)

runs-on: [self-hosted, macOS, ARM64]According to our log, Fetch Source runs OK on macOS Arm64.

(Completes in about a minute, 700 MB GitHub Uploads are surprisingly quick)

How is Fetch Source used?

Fetch Source happens before any NuttX Build. It checks out the Source Code from the NuttX Kernel Repo and NuttX Apps Repo.

Then it zips up the Source Code and passes the Zipped Source Code to the NuttX Builds.

(700 MB of zipped source code)

Anything else we can run on macOS Arm64?

Unfortunately not, we need some more fixes…

So NuttX Builds run better with a huge x64 Ubuntu PC. Can we make macOS on Arm64 more useful?

Let’s test UTM Emulator for macOS Arm64, to emulate Ubuntu x64. (Spoiler: It’s really slow!)

On a super-duper Mac Mini (M2 Pro, 32 GB RAM): We can emulate an Intel i7 PC with 32 CPUs and 4 GB RAM (because we don’t need much RAM)

Remember to Force Multicore and bump up the JIT Cache…

Ubuntu Disk Space in the UTM Virtual Machine needs to be big enough for NuttX Docker Image…

$ neofetch

OS: Ubuntu 24.04.1 LTS x86_64

Host: KVM/QEMU (Standard PC (Q35 + ICH9, 2009) pc-q35-7.2)

Kernel: 6.8.0-41-generic

CPU: Intel i7 9xx (Nehalem i7, IBRS update) (16) @ 1.000GHz

GPU: 00:02.0 Red Hat, Inc. Virtio 1.0 GPU

Memory: 1153MiB / 3907MiB

$ df -H

Filesystem Size Used Avail Use% Mounted on

tmpfs 410M 1.7M 409M 1% /run

/dev/sda2 67G 31G 33G 49% /

tmpfs 2.1G 0 2.1G 0% /dev/shm

tmpfs 5.3M 8.2k 5.3M 1% /run/lock

efivarfs 263k 57k 201k 23% /sys/firmware/efi/efivars

/dev/sda1 1.2G 6.5M 1.2G 1% /boot/efi

tmpfs 410M 115k 410M 1% /run/user/1000

$ cd actions-runner

$ ./run.sh

Connected to GitHub

Current runner version: '2.319.1'

02:33:17Z: Listening for Jobs

02:33:23Z: Running job: Linux (arm-04)

06:47:38Z: Job Linux (arm-04) completed with result: Succeeded

06:47:43Z: Running job: Linux (arm-01)During Run Builds: CPU hits 100%…

(Don’t leave System Monitor running, it consumes quite a bit of CPU!)

If the CI Job doesn’t complete in 6 hours: GitHub will cancel it! So we should give it as much CPU as possible.

Why emulate 32 CPUs? That’s because we want to max out the macOS Arm64 CPU Utilisation. Our chance to watch Mac Mini run smokin’ hot!

Results of macOS Arm64 emulating Ubuntu x64 (24.04.1 LTS) with 4GB RAM…

(Incomplete. Timeout after 6 hours sigh)

(Completed in 1.7 hours, vs 1 hour for GitHub Runners)

(Completed in 4 hours, vs 0.5 hours for GitHub Runners)

(Completed in 4 hours, vs 0.5 hours for GitHub Runners)

Does UTM Emulator work for NuttX Builds? Yeah kinda…

But how long to build? 4 hours!

(Instead of 33 mins for GitHub Runners)

What if we run a Self-Hosted Runner inside a Docker Container on macOS Arm64? (Rancher Desktop)

But it becomes a Linux Arm64 Runner, not a Linux x64 Runner. Which won’t work with our current NuttX Docker Image, which is x64 only…

Unless we create a Linux Arm64 Docker Image? Like for Compiling RISC-V Platforms.

We’ll chat about this in NuttX Discord Channel.

According to ASF Policy: We should reduce to 15 Concurrent GitHub Runners (we’re now at 24 concurrent runners). How?

We could review the 1,594 Build Targets and decide which targets should be excluded. Or reprioritised to run earlier / later.

We could run All 1,594 Builds only when the PR is Approved. So we can save on Build Times for the Submission / Resubmission of the PR.

We need a quicker way to “Fail Fast” and (in case of failure) prevent other Build Jobs from running. Which will reduce the number of Runners.

What if we could Start Earlier the Build Jobs that are impacted by the Modified Code in the PR?

So if I modify something for Ox64 BL808 SBC, it should start the CI Job for ox64:nsh. If it fails, then don’t bother with the rest of the Arm / RISC-V / Simulator jobs.

(Maybe we dump the NuttX ELF Disassembly and figure out which Source Files are used for which NuttX Targets?)

Check out the updates for NuttX Continuous Integration in these articles…

“Optimising the Continuous Integration for Apache NuttX RTOS (GitHub Actions)”

“Continuous Integration Dashboard for Apache NuttX RTOS (Prometheus and Grafana)”

“Failing a Continuous Integration Test for Apache NuttX RTOS (QEMU RISC-V)”

“(Experimental) Mastodon Server for Apache NuttX Continuous Integration (macOS Rancher Desktop)”

“Test Bot for Pull Requests … Tested on Real Hardware (Apache NuttX RTOS / Oz64 SG2000 RISC-V SBC)”

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…

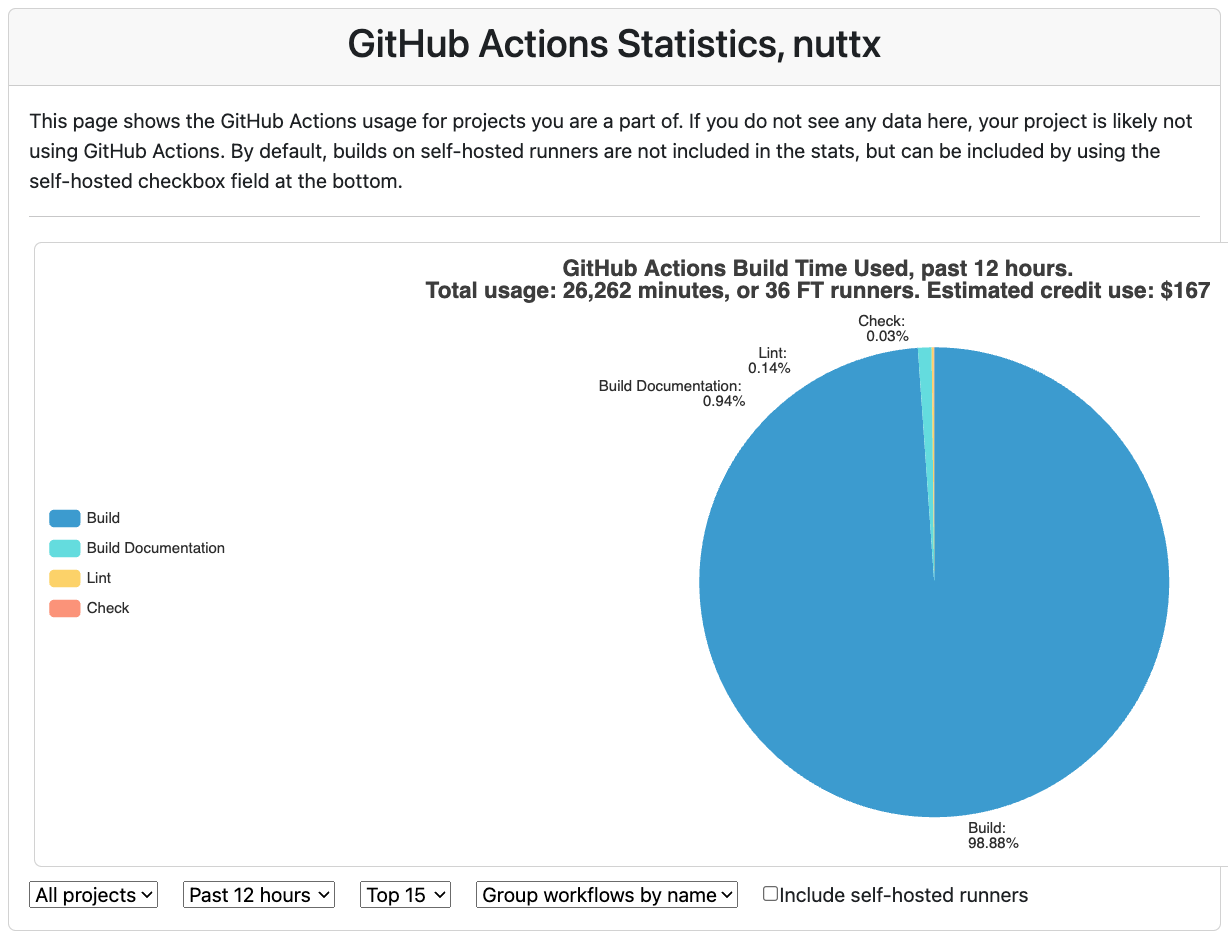

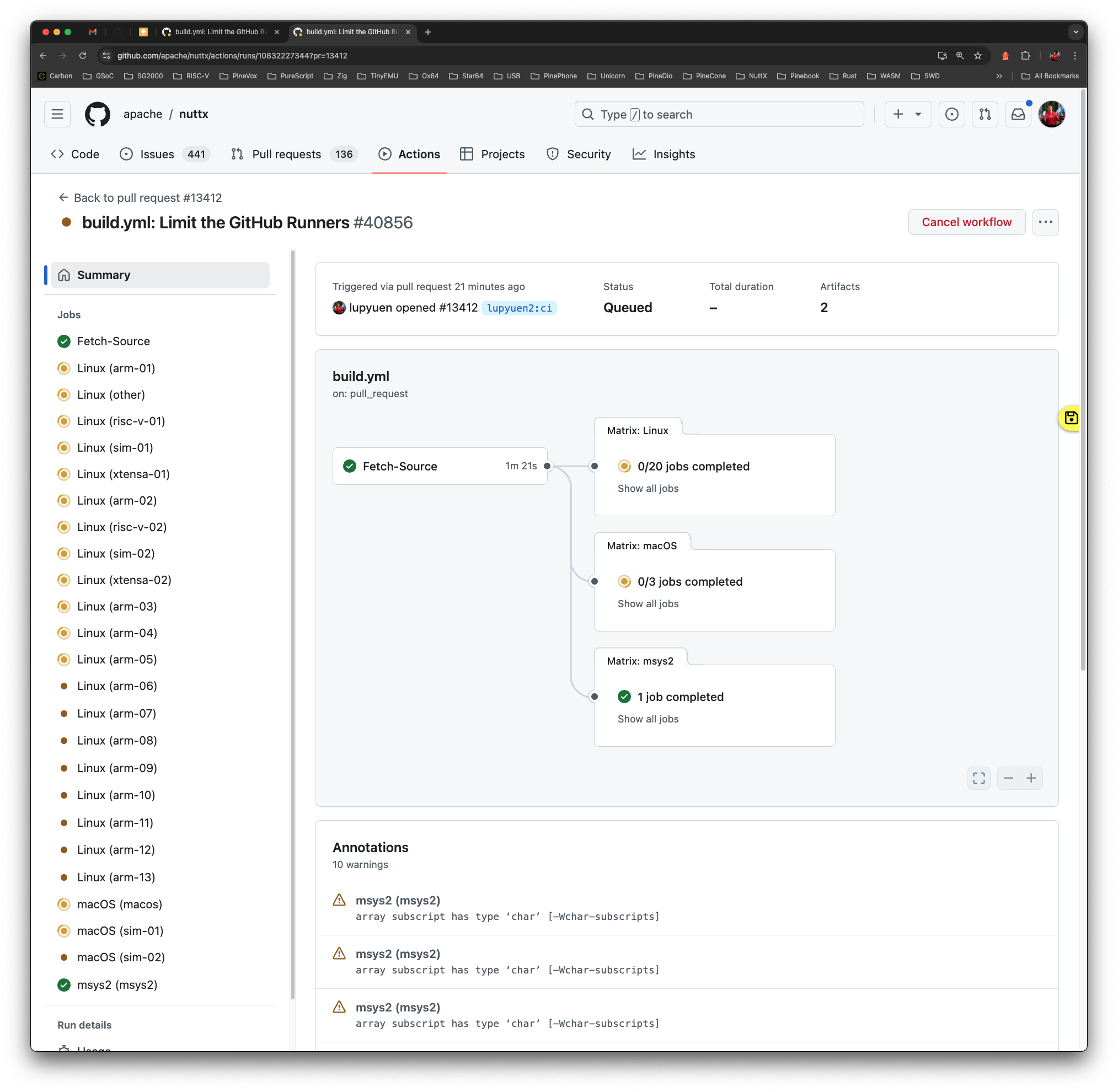

We’re modifying NuttX CI (Continuous Integration) and GitHub Actions, to comply with ASF Policy. Unfortunately, these changes will extend the Build Duration for a NuttX Pull Request by roughly 15 mins, from 2 hours to 2.25 hours.

Right now, every NuttX Pull Request will trigger 24 Concurrent Jobs (GitHub Runners), executing them in parallel.

According to ASF Policy: We should run at most 15 Concurrent Jobs.

Thus we’ll cut down the Concurrent Jobs from 24 down to 15 (pic above). That’s 12 Linux Jobs, 2 macOS, 1 Windows. (Each job takes 30 mins to 2 hours)

(1) Right now our “Linux > Strategy” is a flat list of 20 Linux Jobs, all executed in parallel…

Linux:

needs: Fetch-Source

runs-on: ubuntu-latest

env:

DOCKER_BUILDKIT: 1

strategy:

matrix:

boards: [arm-01, arm-02, arm-03, arm-04, arm-05, arm-06, arm-07, arm-08, arm-09, arm-10, arm-11, arm-12, arm-13, other, risc-v-01, risc-v-02, sim-01, sim-02, xtensa-01, xtensa-02](2) We change “Linux > Strategy” to prioritise by Target Architecture, and limit to 12 concurrent jobs…

Linux:

needs: Fetch-Source

runs-on: ubuntu-latest

env:

DOCKER_BUILDKIT: 1

strategy:

max-parallel: 12

matrix:

boards: [

arm-01, other, risc-v-01, sim-01, xtensa-01,

arm-02, risc-v-02, sim-02, xtensa-02,

arm-03, arm-04, arm-05, arm-06, arm-07, arm-08, arm-09, arm-10, arm-11, arm-12, arm-13

](3) So NuttX CI will initially execute 12 Build Jobs across Arm32, Arm64, RISC-V, Simulator and Xtensa. As they complete, NuttX CI will execute the remaining 8 Build Jobs (for Arm32).

(4) This will extend the Overall Build Duration from 2 hours to 2.25 hours

(5) We also limit macOS Jobs to 2, Windows Jobs to 1. Here’s the Merged PR for NuttX Kernel. And the same for NuttX Apps.

macOS:

permissions:

contents: none

runs-on: macos-13

needs: Fetch-Source

strategy:

max-parallel: 2

matrix:

boards: [macos, sim-01, sim-02]

...

msys2:

needs: Fetch-Source

runs-on: windows-latest

strategy:

fail-fast: false

max-parallel: 1

matrix:

boards: [msys2]Read on for Phase 2…

UPDATE: We should also skip the Builds for Unmodified Architectures. And we’ll skip macos + msys2 for Simple PRs.

For Phase 2: We should “rebalance” the Build Targets. Move the Newer or Higher Priority or Riskier Targets to arm-01, risc-v-01, sim-01, xtensa-01.

Hopefully this will allow NuttX CI to Fail Faster (for breaking changes), and prevent unnecessary builds (also reduce waiting time).

We should probably balance arm-01, risc-v-01, sim-01, xtensa-01 so they run in about 30 mins consistently.

Read on for Phase 3…

For Phase 3: We should migrate most of the NuttX Targets to a Daily Job for Build and Test.

Check out the discussion here.

And this discussion too.

To run the Self-Hosted Runners on Ubuntu x64, we need these fixes…

## TODO: Install Docker Engine: https://docs.docker.com/engine/install/ubuntu/

## TODO: Apply this fix: https://stackoverflow.com/questions/48957195/how-to-fix-docker-got-permission-denied-issue

## Note: podman won't work

## NuttX CI needs to save files in `/github`, so we create it

## TODO: How to give each runner its own `/github` folder? Do we mount in Docker?

mkdir -p $HOME/github/home

mkdir -p $HOME/github/workspace

sudo ln -s $HOME/github /github

ls -l /github/home

## TODO: Clean up after every job, then restart the runner

sudo rm -rf $HOME/actions-runner/_work/runner-nuttx

cd $HOME/actions-runner

./run.sh

## TODO: In case of timeout after 6 hours:

## Restart the Ubuntu Machine, because the tasks are still running in background!Why Docker Engine? Not Podman Docker?

Podman Docker on Linux x64 fails with this error. Might be a problem with Podman…

Writing manifest to image destination

Error: statfs /var/run/docker.sock: permission deniedDocker Engine fails with a similar error…

permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post "http://%2Fvar%2Frun%2Fdocker.sock/v1.47/images/create?fromImage=ghcr.io%2Fapache%2Fnuttx%2Fapache-nuttx-ci-linux&tag=latest": dial unix /var/run/docker.sock: connect: permission deniedThat’s why we apply this Docker Fix.

To run the Self-Hosted Runners on macOS Arm64, we need these fixes…

sudo mkdir /Users/runner

sudo chown $USER /Users/runner

sudo chgrp staff /Users/runner

ls -ld /Users/runnerWe have challenges running NuttX CI on macOS Arm64…

Build macOS (macos / sim-01 / sim-02) on macOS Arm64: setup-python will hang because it’s prompting for password. So we comment out setup-python.

Run actions/setup-python@v5

Installed versions

Version 3.[8](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:9) was not found in the local cache

Version 3.8 is available for downloading

Download from "https://github.com/actions/python-versions/releases/download/3.8.10-887[9](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:10)978422/python-3.8.10-darwin-arm64.tar.gz"

Extract downloaded archive

/usr/bin/tar xz -C /Users/luppy/actions-runner2/_work/_temp/2e[13](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:14)8b05-b7c9-4759-956a-7283af148721 -f /Users/luppy/actions-runner2/_work/_temp/792ffa3a-a28f-4443-91c8-0d81f55e422f

Execute installation script

Check if Python hostedtoolcache folder exist...

Install Python binaries from prebuilt packageThen it fails while downloading the toolchain:

+ wget --quiet https://developer.arm.com/-/media/Files/downloads/gnu/13.2.rel1/binrel/arm-gnu-toolchain-13.2.rel1-darwin-x86_64-arm-none-eabi.tar.xz

+ xz -d arm-gnu-toolchain-13.2.rel1-darwin-x86_64-arm-none-eabi.tar.xz

xz: arm-gnu-toolchain-13.2.rel1-darwin-x86_64-arm-none-eabi.tar.xz: Unexpected end of inputRetry and it fails at objcopy sigh:

+ rm -f /Users/luppy/actions-runner3/_work/runner-nuttx/runner-nuttx/sources/tools/bintools/bin/objcopy

+ ln -s /usr/local/opt/binutils/bin/objcopy /Users/luppy/actions-runner3/_work/runner-nuttx/runner-nuttx/sources/tools/bintools/bin/objcopy

+ command objcopy --version

+ objcopy --version

/Users/luppy/actions-runner3/_work/runner-nuttx/runner-nuttx/sources/nuttx/tools/ci/platforms/darwin.sh: line 93: objcopy: command not foundTODO: Do we change the toolchain from x64 to Arm64?

Our Self-Hosted Runners: Do they work for Documentation Build?

Documentation on macOS Arm64: Hangs at setup-python because it prompts for password:

Run actions/setup-python@v5

Installed versions

Version 3.[8](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:9) was not found in the local cache

Version 3.8 is available for downloading

Download from "https://github.com/actions/python-versions/releases/download/3.8.10-887[9](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:10)978422/python-3.8.10-darwin-arm64.tar.gz"

Extract downloaded archive

/usr/bin/tar xz -C /Users/luppy/actions-runner2/_work/_temp/2e[13](https://github.com/lupyuen3/runner-nuttx/actions/runs/10589440489/job/29343575677#step:3:14)8b05-b7c9-4759-956a-7283af148721 -f /Users/luppy/actions-runner2/_work/_temp/792ffa3a-a28f-4443-91c8-0d81f55e422f

Execute installation script

Check if Python hostedtoolcache folder exist...

Install Python binaries from prebuilt packageAnd it won’t work on macOS because it needs apt: workflows/doc.yml

- name: Install LaTeX packages

run: |

sudo apt-get update -y

sudo apt-get install -y \

texlive-latex-recommended texlive-fonts-recommended \

texlive-latex-base texlive-latex-extra latexmk texlive-luatex \

fonts-freefont-otf xindyDocumentation on Linux Arm64: Fails at setup-python

Run actions/setup-python@v5

Installed versions

Version 3.[8](https://github.com/lupyuen3/runner-nuttx/actions/runs/10590973119/job/29347607289#step:3:9) was not found in the local cache

Error: The version '3.8' with architecture 'arm64' was not found for Debian 12.

The list of all available versions can be found here: https://raw.githubusercontent.com/actions/python-versions/main/versions-manifest.jsonSo we comment out setup-python. Then it fails with pip3 not found:

pip3: command not foundTODO: Switch to pipenv

Documentation on Linux x64: Fails with rmdir error

Copying '/home/luppy/.gitconfig' to '/home/luppy/actions-runner/_work/_temp/8c370e2f-3f8f-4e01-b8f2-1ccb301640a1/.gitconfig'

Temporarily overriding HOME='/home/luppy/actions-runner/_work/_temp/8c370e2f-3f8f-4e01-b8f2-1ccb301640a1' before making global git config changes

Adding repository directory to the temporary git global config as a safe directory

/usr/bin/git config --global --add safe.directory /home/luppy/actions-runner/_work/runner-nuttx/runner-nuttx

Deleting the contents of '/home/luppy/actions-runner/_work/runner-nuttx/runner-nuttx'

Error: File was unable to be removed Error: EACCES: permission denied, rmdir '/home/luppy/actions-runner/_work/runner-nuttx/runner-nuttx/buildartifacts/at32f437-mini'TODO: Check the rmdir directory

Let’s watch the Ubuntu x64 Runner in action…

On an Intel PC

On macOS Arm64 with UTM Emulator

Our 10-year-old MacBook Pro (Intel i7) hits 100% when running the Linux Build for NuttX CI. But it works!

On a super-duper Mac Mini (M2 Pro, 32 GB RAM): We can emulate an Intel i7 PC with 32 CPUs and 4 GB RAM (because we don’t need much RAM)

Remember to Force Multicore and bump up the JIT Cache…

Ubuntu Disk Space in the UTM Virtual Machine needs to be big enough for NuttX Docker Image…

$ neofetch

.-/+oossssoo+/-. user@ubuntu-emu-arm64

`:+ssssssssssssssssss+:` ---------------------

-+ssssssssssssssssssyyssss+- OS: Ubuntu 24.04.1 LTS x86_64

.ossssssssssssssssssdMMMNysssso. Host: KVM/QEMU (Standard PC (Q35 + ICH9, 2009) pc-q35-7.2)

/ssssssssssshdmmNNmmyNMMMMhssssss/ Kernel: 6.8.0-41-generic

+ssssssssshmydMMMMMMMNddddyssssssss+ Uptime: 1 min

/sssssssshNMMMyhhyyyyhmNMMMNhssssssss/ Packages: 1546 (dpkg), 10 (snap)

.ssssssssdMMMNhsssssssssshNMMMdssssssss. Shell: bash 5.2.21

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ Resolution: 1280x800

ossyNMMMNyMMhsssssssssssssshmmmhssssssso Terminal: /dev/pts/1

ossyNMMMNyMMhsssssssssssssshmmmhssssssso CPU: Intel i7 9xx (Nehalem i7, IBRS update) (16) @ 1.000GHz

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ GPU: 00:02.0 Red Hat, Inc. Virtio 1.0 GPU

.ssssssssdMMMNhsssssssssshNMMMdssssssss. Memory: 1153MiB / 3907MiB

/sssssssshNMMMyhhyyyyhdNMMMNhssssssss/

+sssssssssdmydMMMMMMMMddddyssssssss+

/ssssssssssshdmNNNNmyNMMMMhssssss/

.ossssssssssssssssssdMMMNysssso.

-+sssssssssssssssssyyyssss+-

`:+ssssssssssssssssss+:`

.-/+oossssoo+/-.

$ df -H

Filesystem Size Used Avail Use% Mounted on

tmpfs 410M 1.7M 409M 1% /run

/dev/sda2 67G 31G 33G 49% /

tmpfs 2.1G 0 2.1G 0% /dev/shm

tmpfs 5.3M 8.2k 5.3M 1% /run/lock

efivarfs 263k 57k 201k 23% /sys/firmware/efi/efivars

/dev/sda1 1.2G 6.5M 1.2G 1% /boot/efi

tmpfs 410M 115k 410M 1% /run/user/1000During Download Source Artifact: GitHub seems to be throttling the download (total 700 MB over 25 mins)

During Run Builds: CPU hits 100%

Note: Don’t leave System Monitor running, it consumes quite a bit of CPU!

If the CI Job doesn’t complete in 6 hours: GitHub will cancel it! So we should give it as much CPU as possible.

Why emulate 32 CPUs? That’s because we want to max out the macOS Arm64 CPU Utilisation. Here’s our chance to watch Mac Mini run smokin’ hot!

Here’s how it runs:

$ cd actions-runner/

$ sudo rm -rf _work/runner-nuttx

$ df -H

Filesystem Size Used Avail Use% Mounted on

tmpfs 410M 1.7M 408M 1% /run

/dev/sda2 67G 28G 35G 45% /

tmpfs 2.1G 0 2.1G 0% /dev/shm

tmpfs 5.3M 8.2k 5.3M 1% /run/lock

efivarfs 263k 130k 128k 51% /sys/firmware/efi/efivars

/dev/sda1 1.2G 6.5M 1.2G 1% /boot/efi

tmpfs 410M 119k 410M 1% /run/user/1000

$ ./run.sh

Connected to GitHub

Current runner version: '2.319.1'

2024-08-30 02:33:17Z: Listening for Jobs

2024-08-30 02:33:23Z: Running job: Linux (arm-04)

2024-08-30 06:47:38Z: Job Linux (arm-04) completed with result: Succeeded

2024-08-30 06:47:43Z: Running job: Linux (arm-01)Here are the Runner Options:

$ ./run.sh --help

Commands:

./config.sh Configures the runner

./config.sh remove Unconfigures the runner

./run.sh Runs the runner interactively. Does not require any options.

Options:

--help Prints the help for each command

--version Prints the runner version

--commit Prints the runner commit

--check Check the runner's network connectivity with GitHub server

Config Options:

--unattended Disable interactive prompts for missing arguments. Defaults will be used for missing options

--url string Repository to add the runner to. Required if unattended

--token string Registration token. Required if unattended

--name string Name of the runner to configure (default ubuntu-emu-arm64)

--runnergroup string Name of the runner group to add this runner to (defaults to the default runner group)

--labels string Custom labels that will be added to the runner. This option is mandatory if --no-default-labels is used.

--no-default-labels Disables adding the default labels: 'self-hosted,Linux,X64'

--local Removes the runner config files from your local machine. Used as an option to the remove command

--work string Relative runner work directory (default _work)

--replace Replace any existing runner with the same name (default false)

--pat GitHub personal access token with repo scope. Used for checking network connectivity when executing `./run.sh --check`

--disableupdate Disable self-hosted runner automatic update to the latest released version`

--ephemeral Configure the runner to only take one job and then let the service un-configure the runner after the job finishes (default false)

Examples:

Check GitHub server network connectivity:

./run.sh --check --url <url> --pat <pat>

Configure a runner non-interactively:

./config.sh --unattended --url <url> --token <token>

Configure a runner non-interactively, replacing any existing runner with the same name:

./config.sh --unattended --url <url> --token <token> --replace [--name <name>]

Configure a runner non-interactively with three extra labels:

./config.sh --unattended --url <url> --token <token> --labels L1,L2,L3

Runner listener exit with 0 return code, stop the service, no retry needed.

Exiting runner...