📝 10 Nov 2024

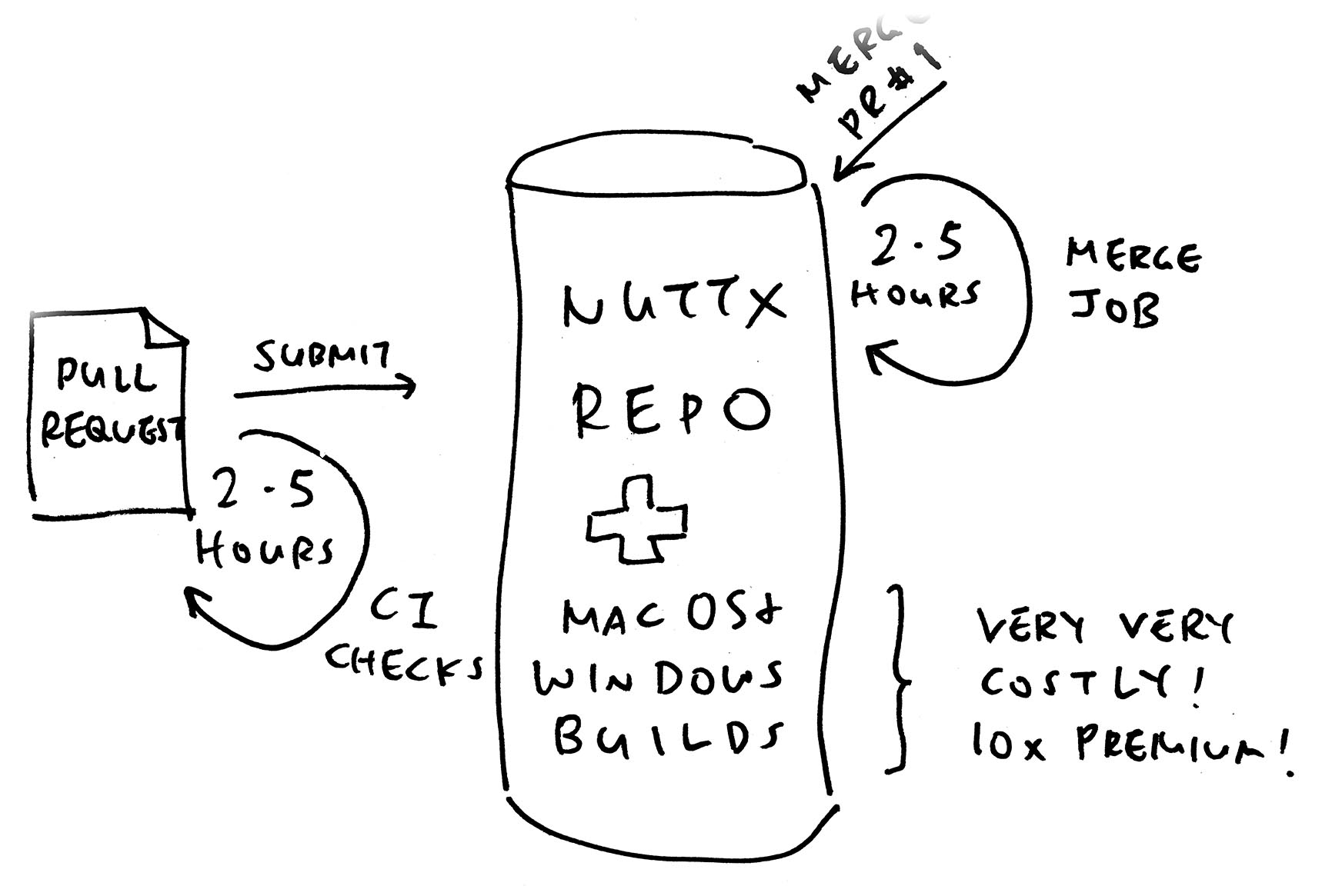

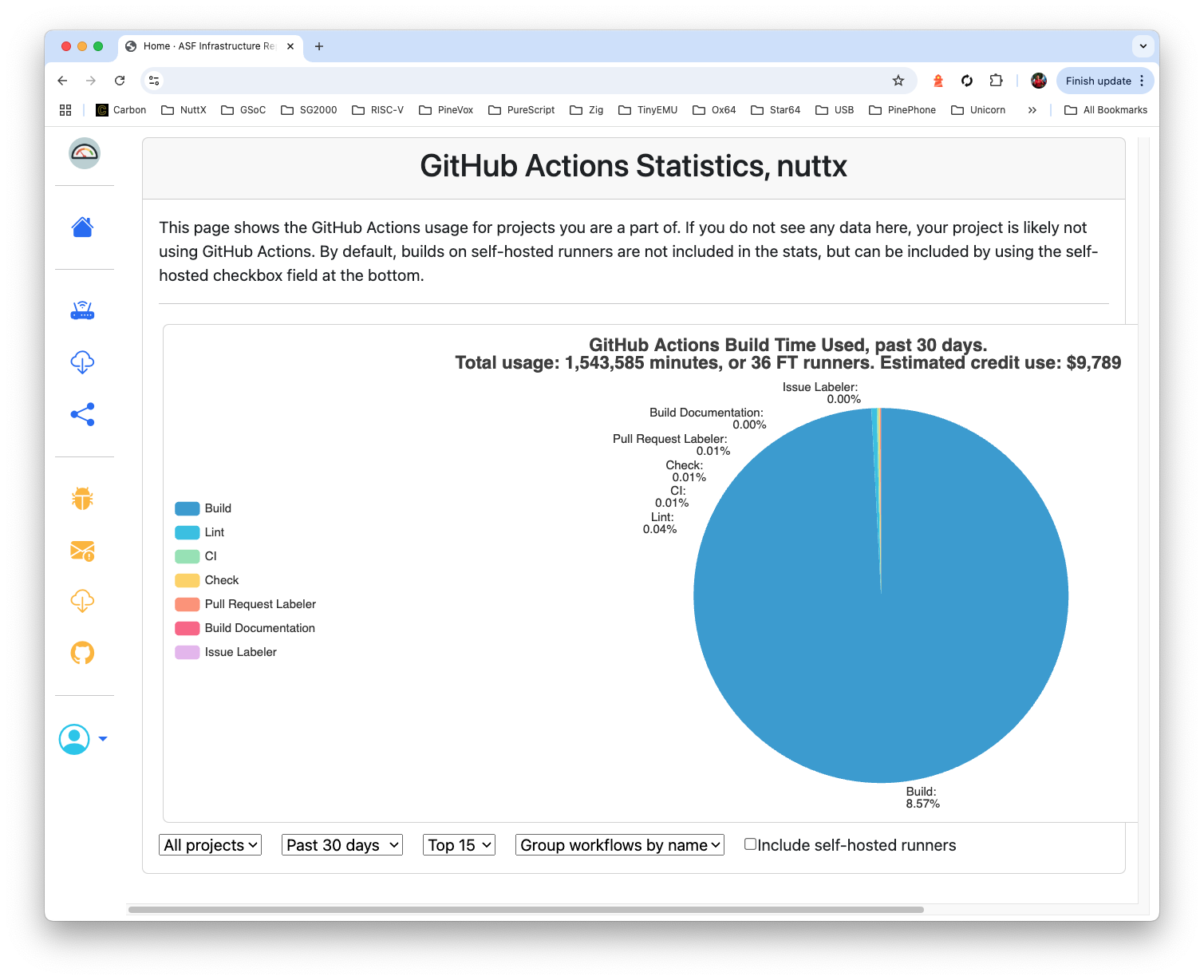

Within Two Weeks: We squashed our GitHub Actions spending from $4,900 (weekly) down to $890…

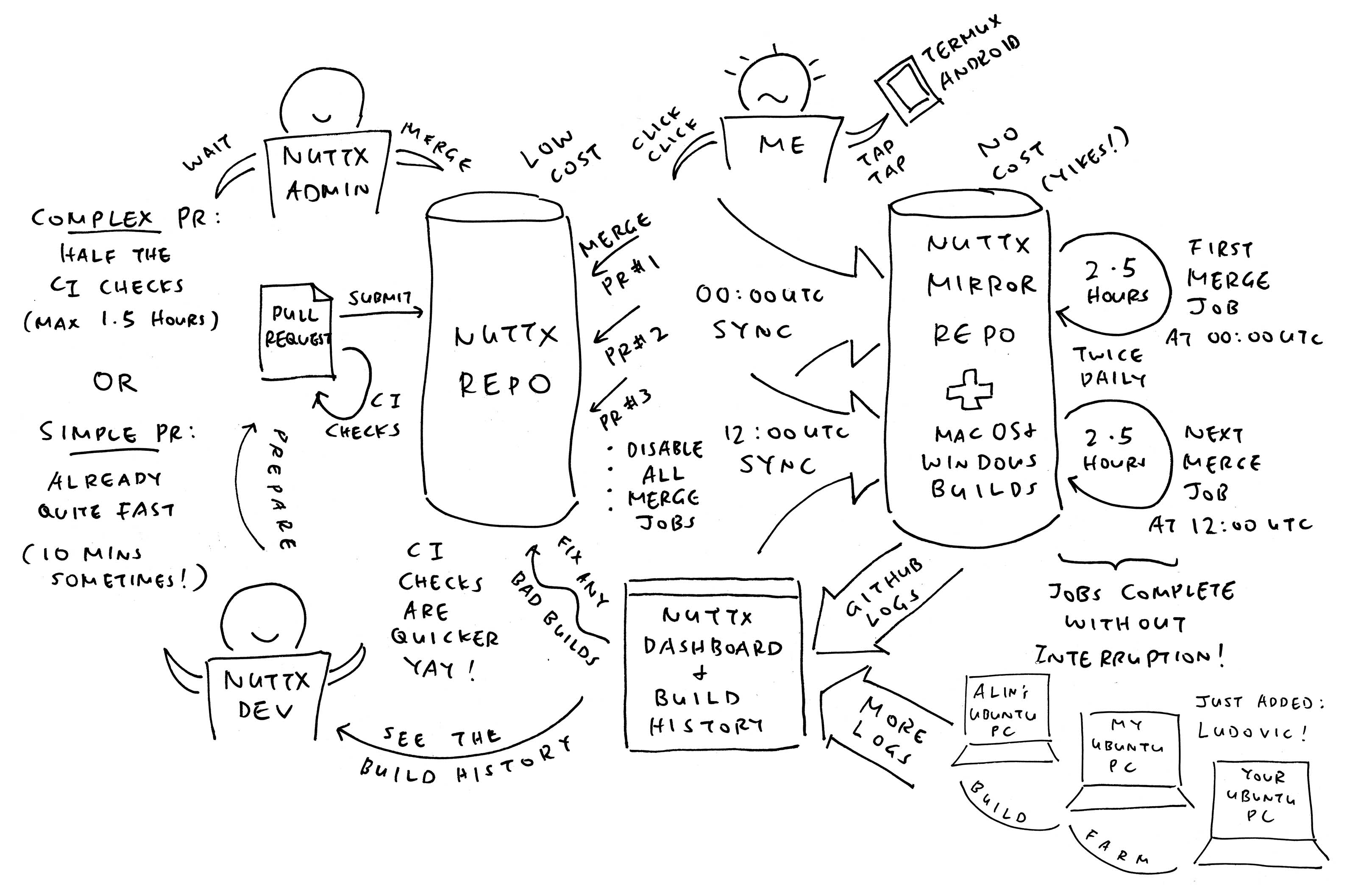

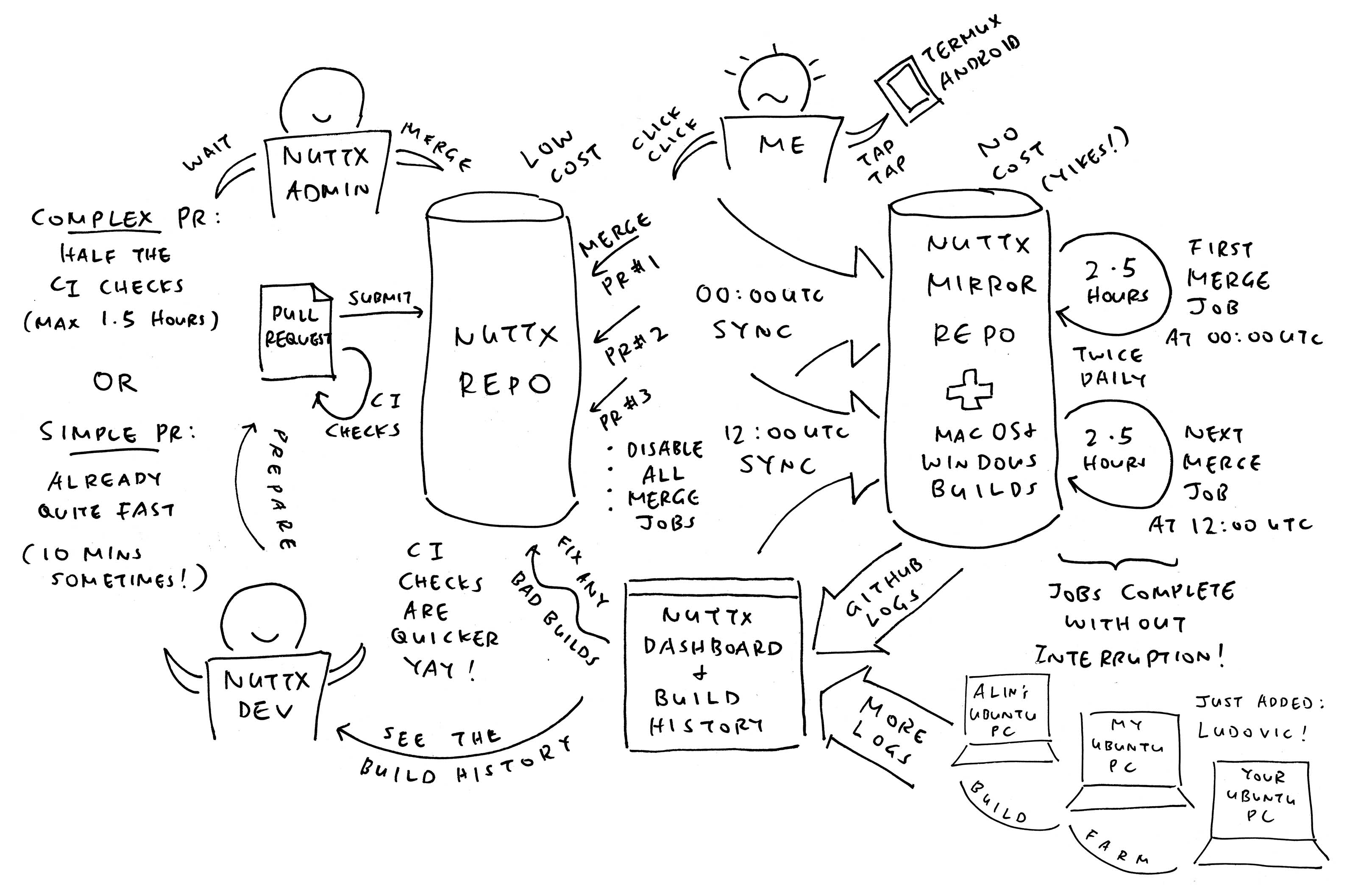

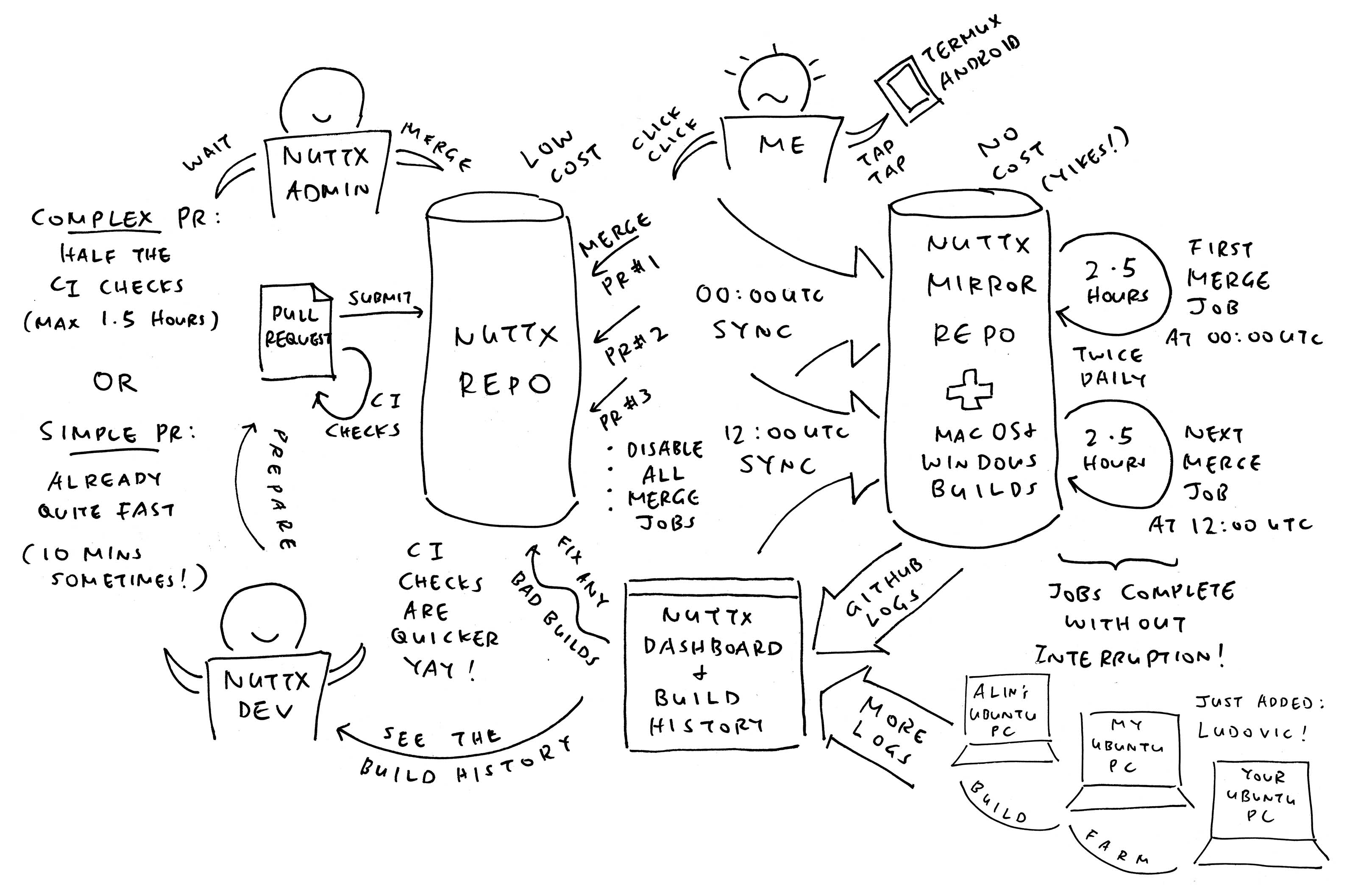

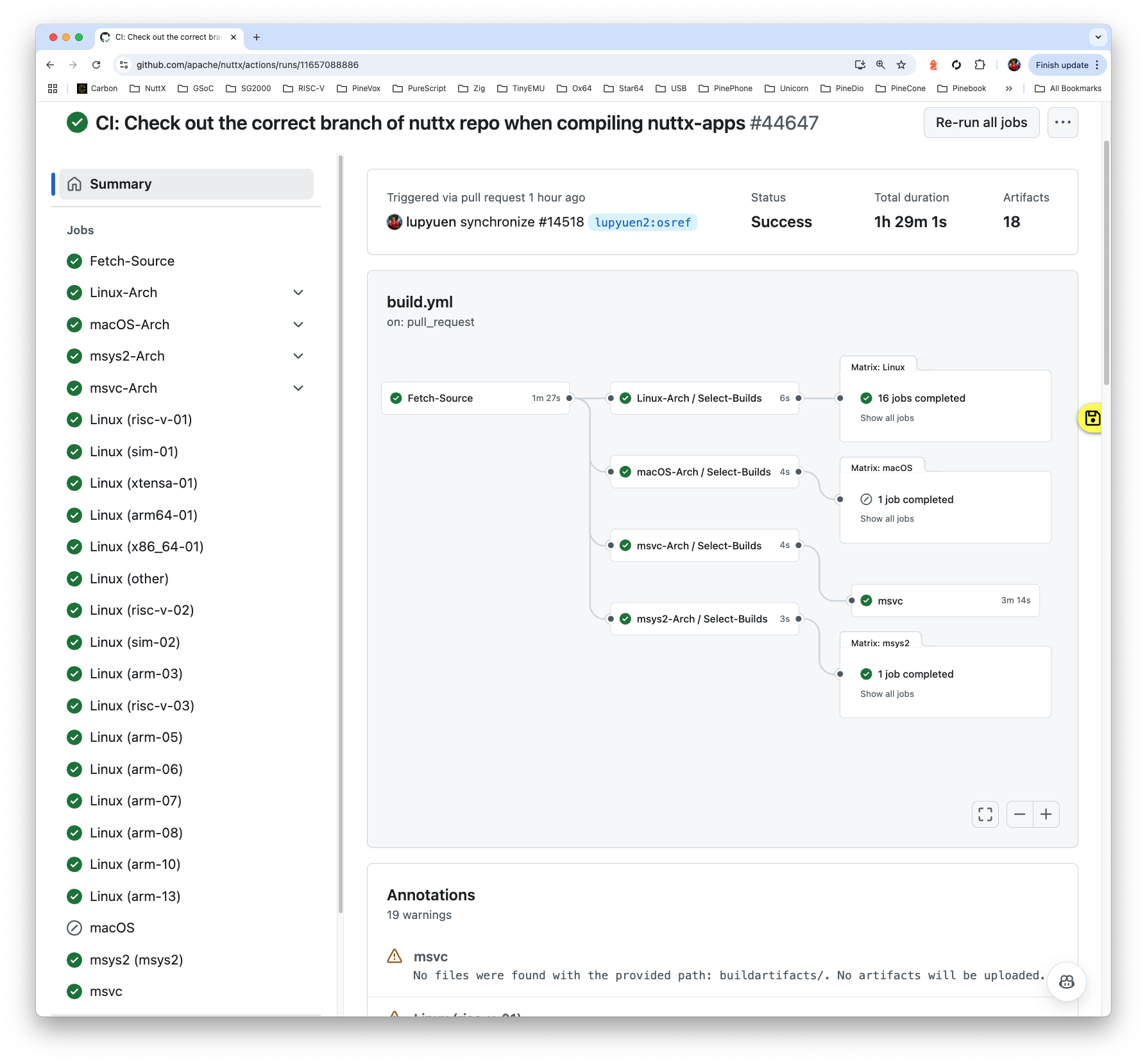

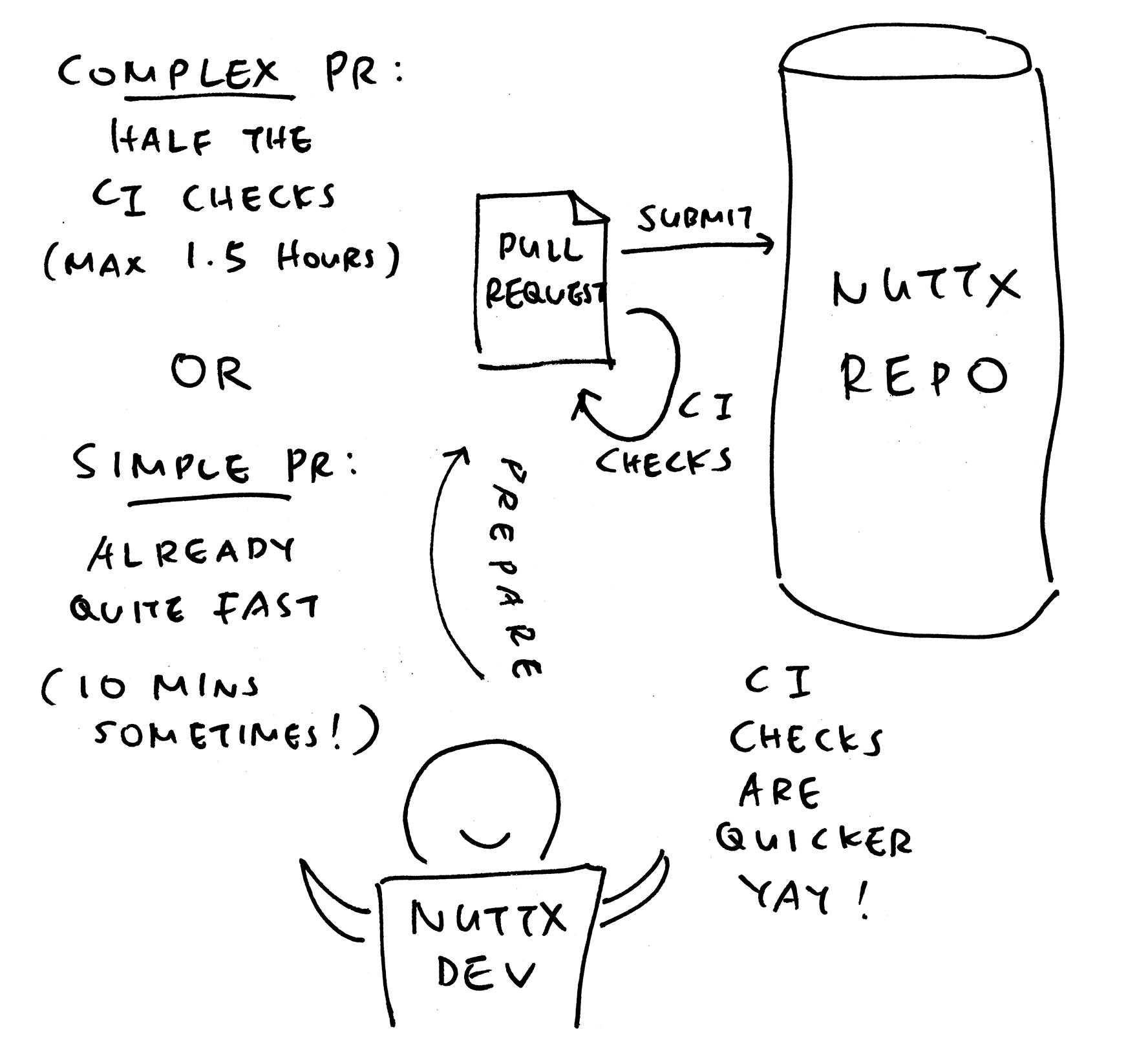

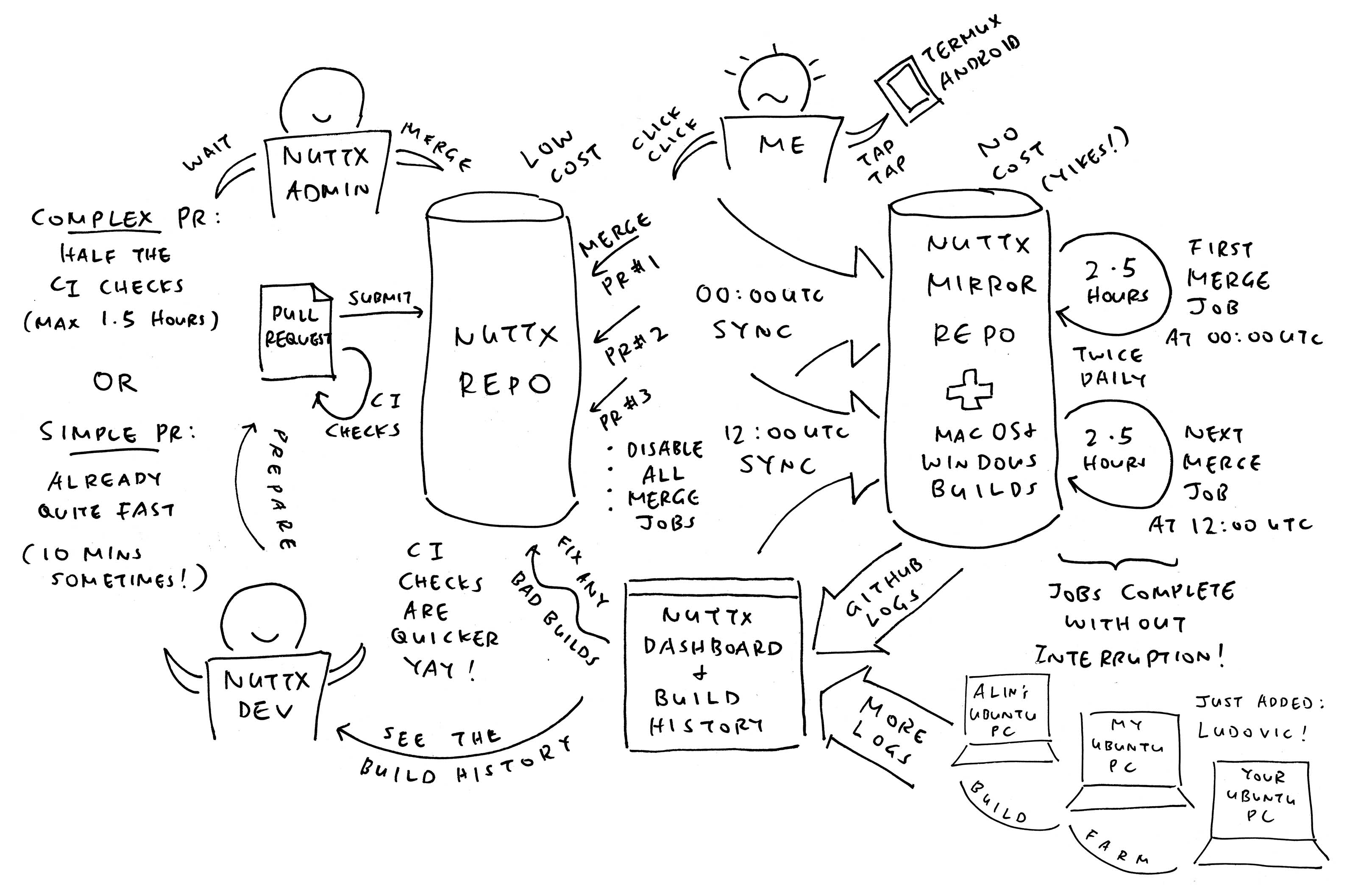

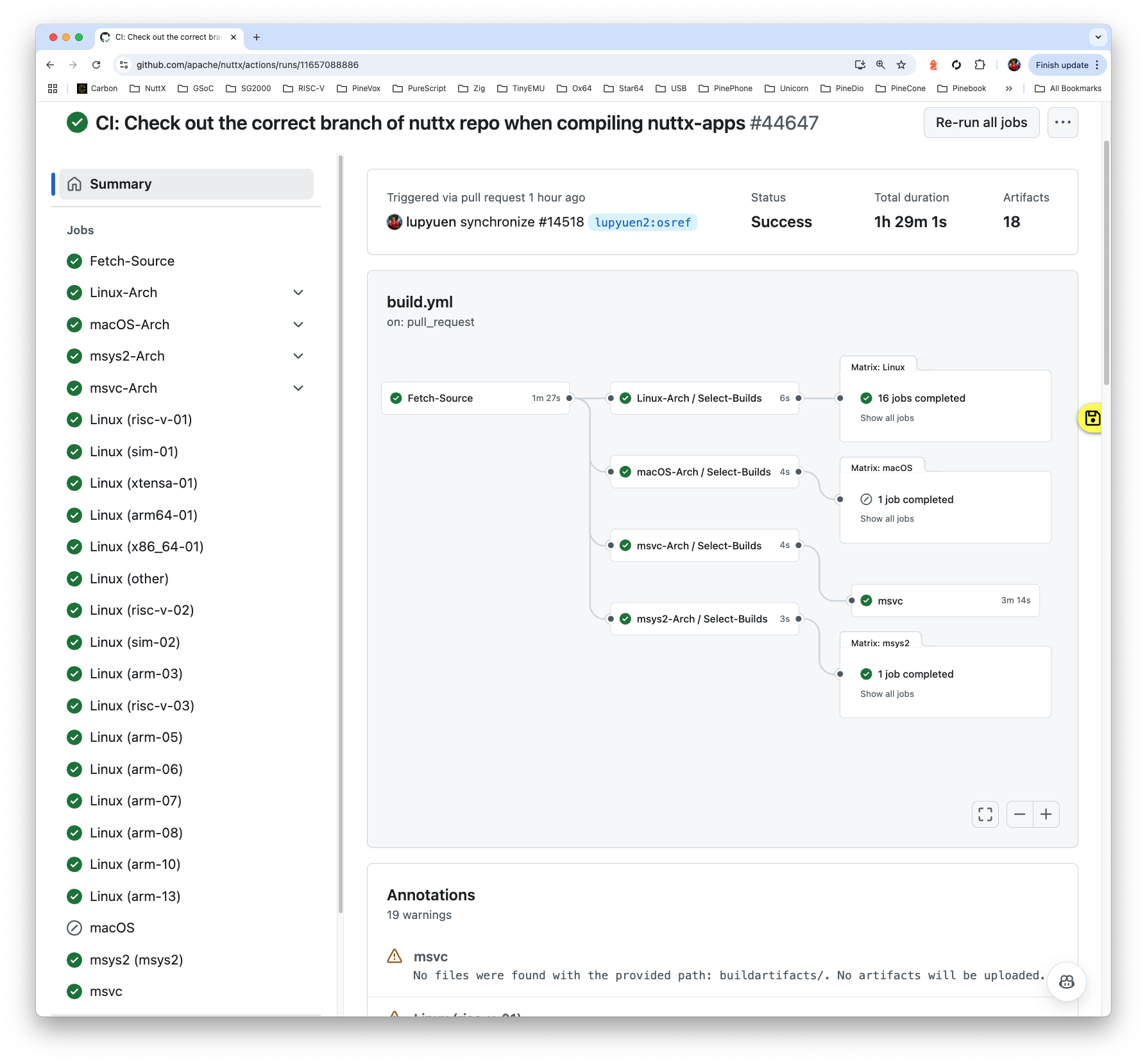

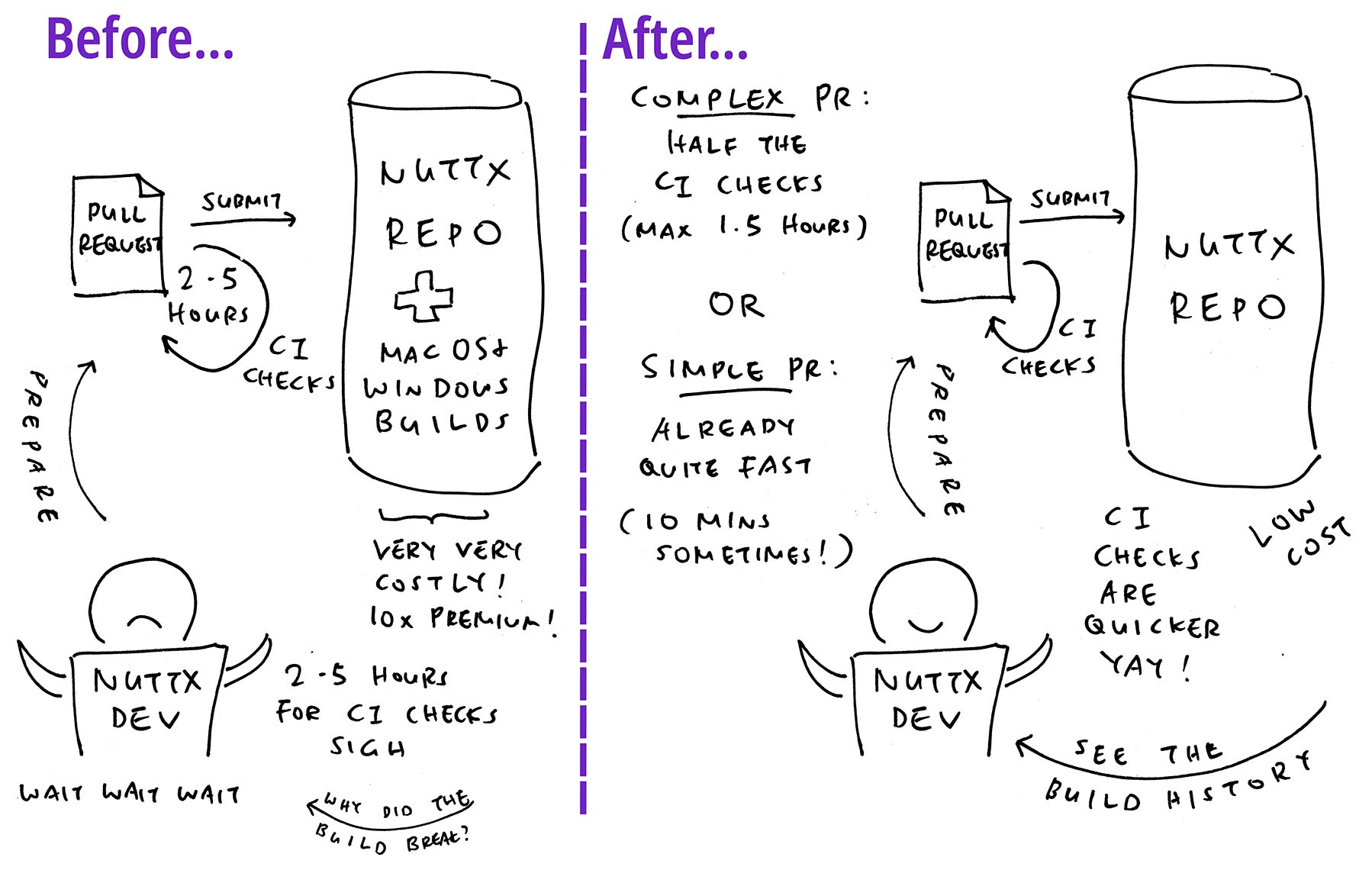

Previously: Our developers waited 2.5 Hours for a Pull Request to be checked. Now we wait at most 1.5 Hours! (Pic below)

This article explains everything we did in the (Semi-Chaotic) Two Weeks for Apache NuttX RTOS…

Shut down the macOS and Windows Builds, revive them in a different form

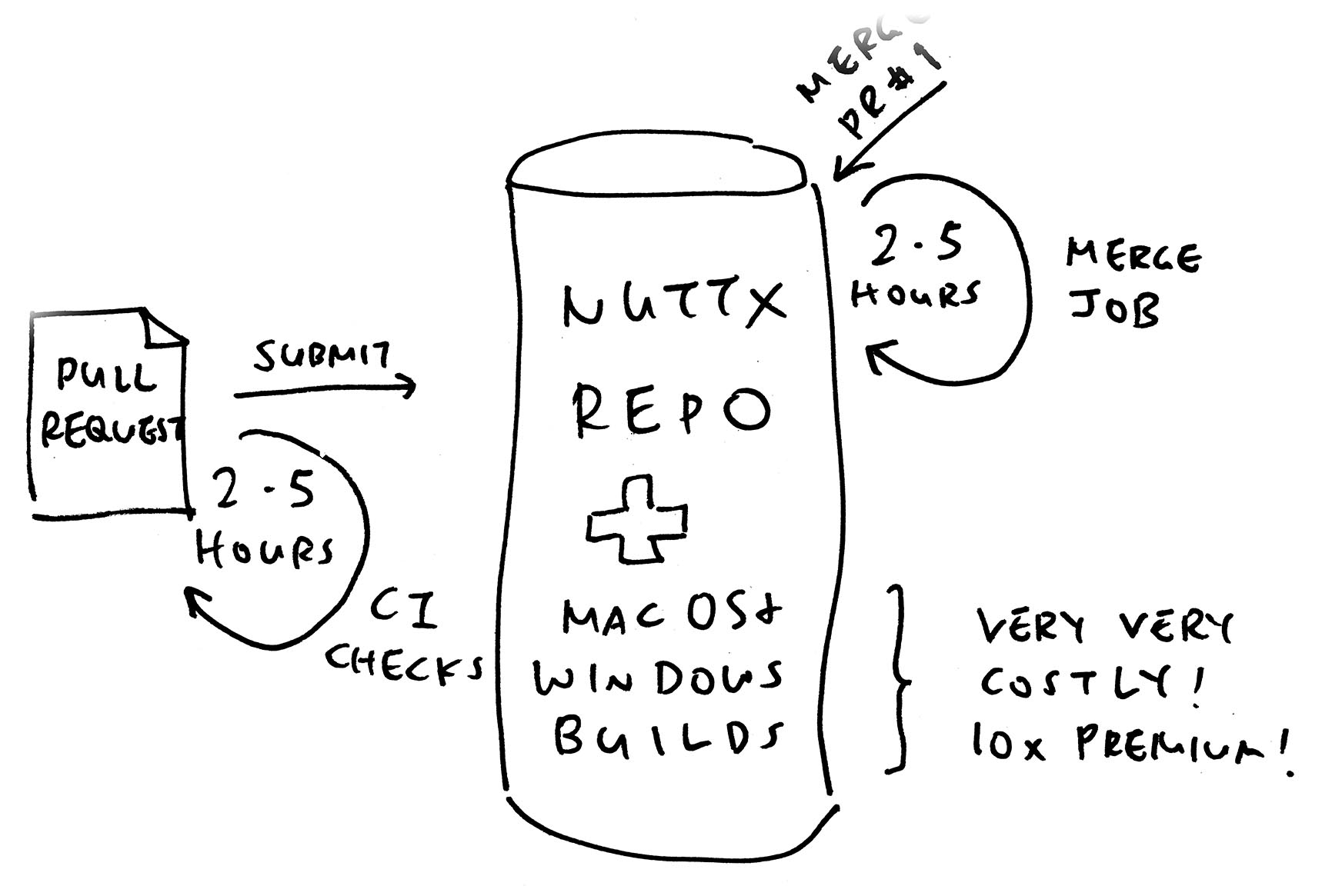

Merge Jobs are super costly, we moved them to the NuttX Mirror Repo

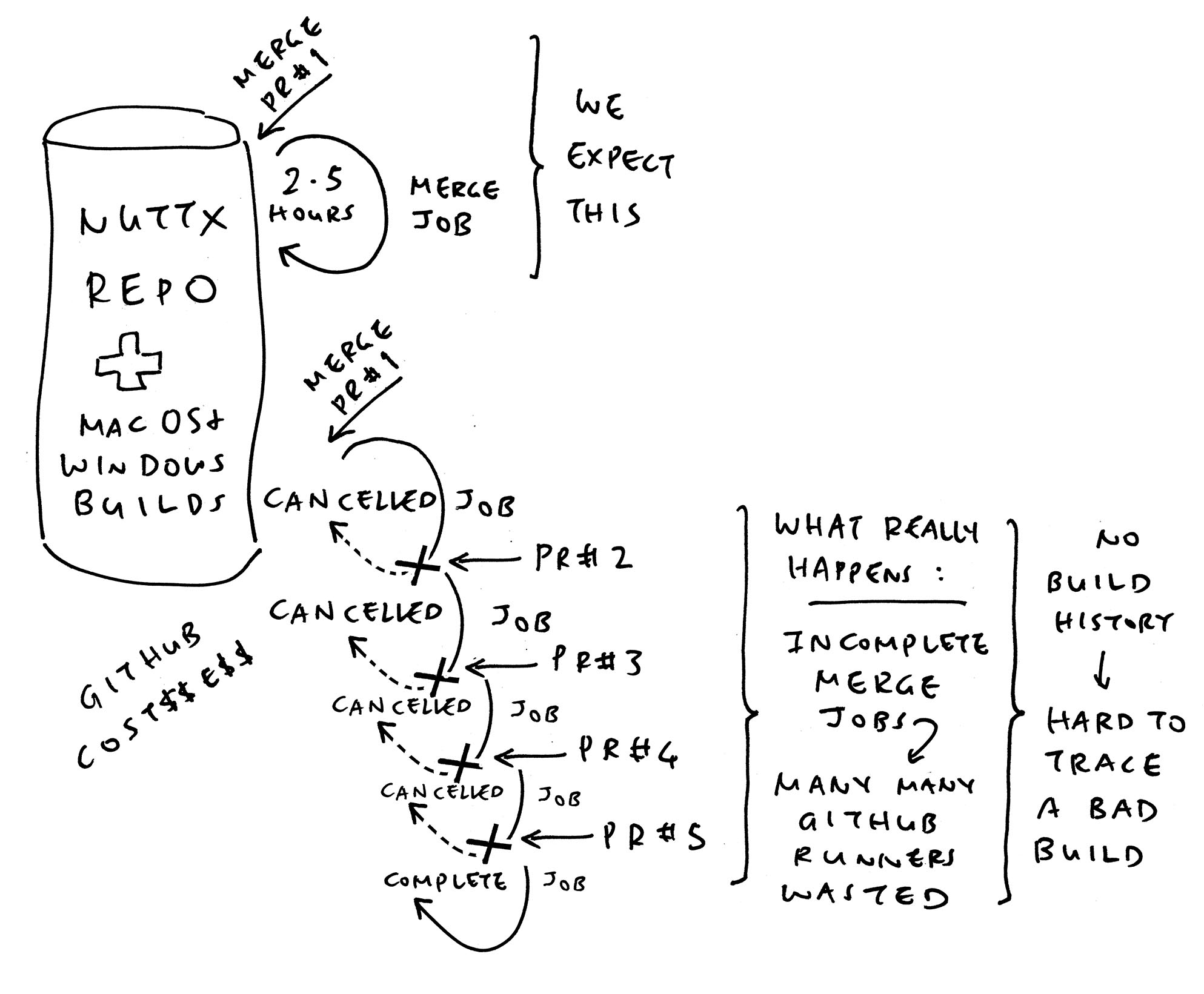

We Halved the CI Checks for Complex PRs. (Continuous Integration)

Simple PRs are already quite fast. (Sometimes 12 Mins!)

Coding the Build Rules for our CI Workflow, monitoring our CI Servers 24 x 7

We can’t run All CI Checks, but NuttX Devs can help ourselves!

We had an ultimatum to reduce (drastically) our usage of GitHub Actions. Or our Continuous Integration would Halt Totally in Two Weeks!

After deliberating overnight: We swiftly activated our rescue plan…

Submit / Update a Complex PR:

CI Workflow shall trigger only Half the Jobs for CI Checks.

(A Complex PR affects All Architectures: Arm32, Arm64, RISC-V, Xtensa, etc. Will reduce GitHub Cost by 32%)

Merge a Complex PR:

CI Workflow shall Run All Jobs like before.

(arm-01 … arm-14, risc-v, xtensa, etc)

Simple PRs:

No change. Thus Simple Arm32 PRs shall build only arm-01 … arm-14.

(A Simple PR concerns only One Single Architecture: Arm32 OR Arm64 OR RISC-V etc)

After Merging Any PR:

Merge Jobs shall run at NuttX Mirror Repo.

(Instead of OG Repo apache/nuttx)

Two Scheduled Merge Jobs:

Daily at 00:00 UTC and 12:00 UTC.

(No more On-Demand Merge Jobs)

macOS and Windows Jobs:

Shall be Totally Disabled.

(Until we find a way to manage their costs)

We have reasons for doing these, backed by solid data…

We studied the CI Jobs for the previous day…

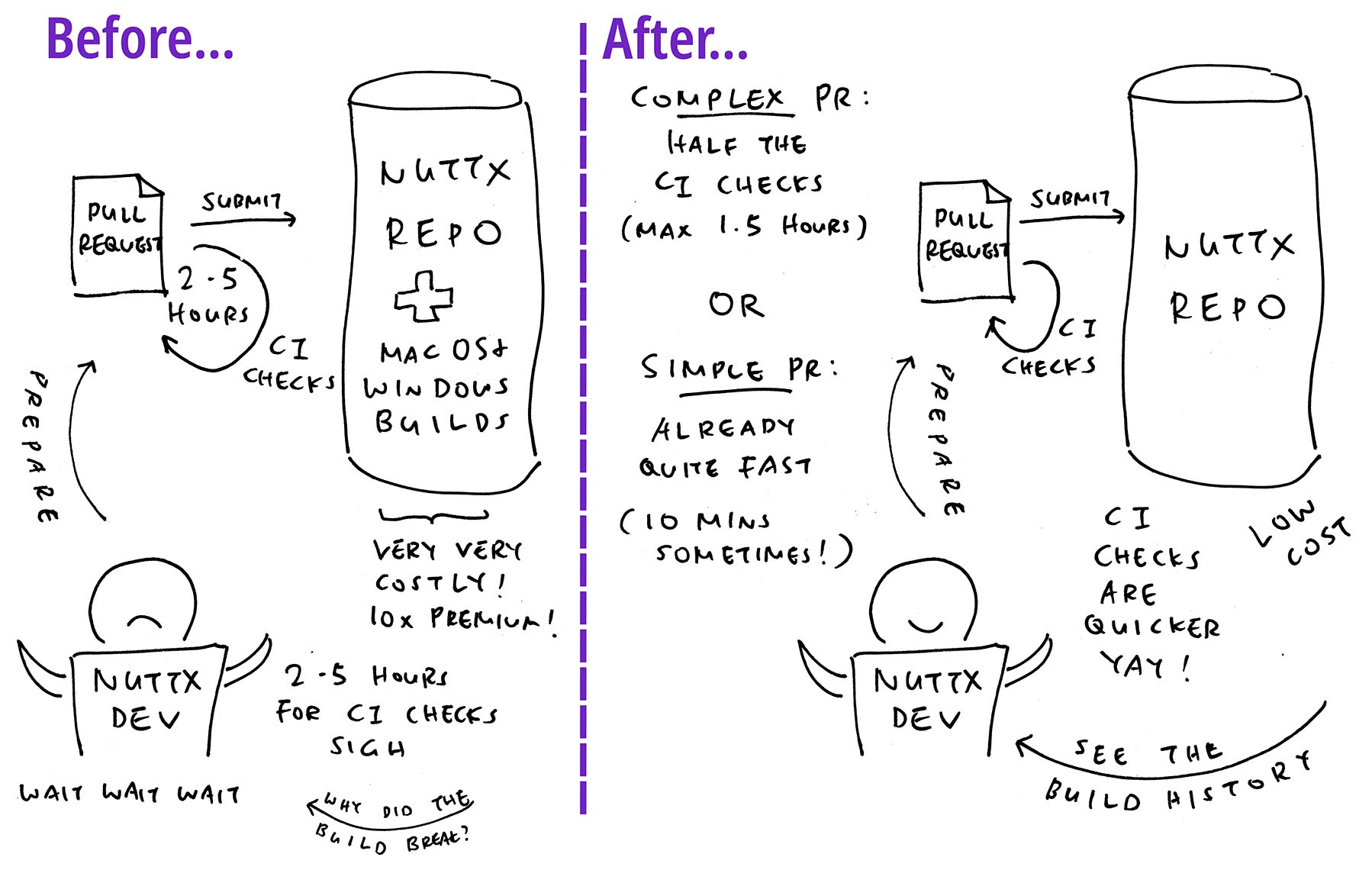

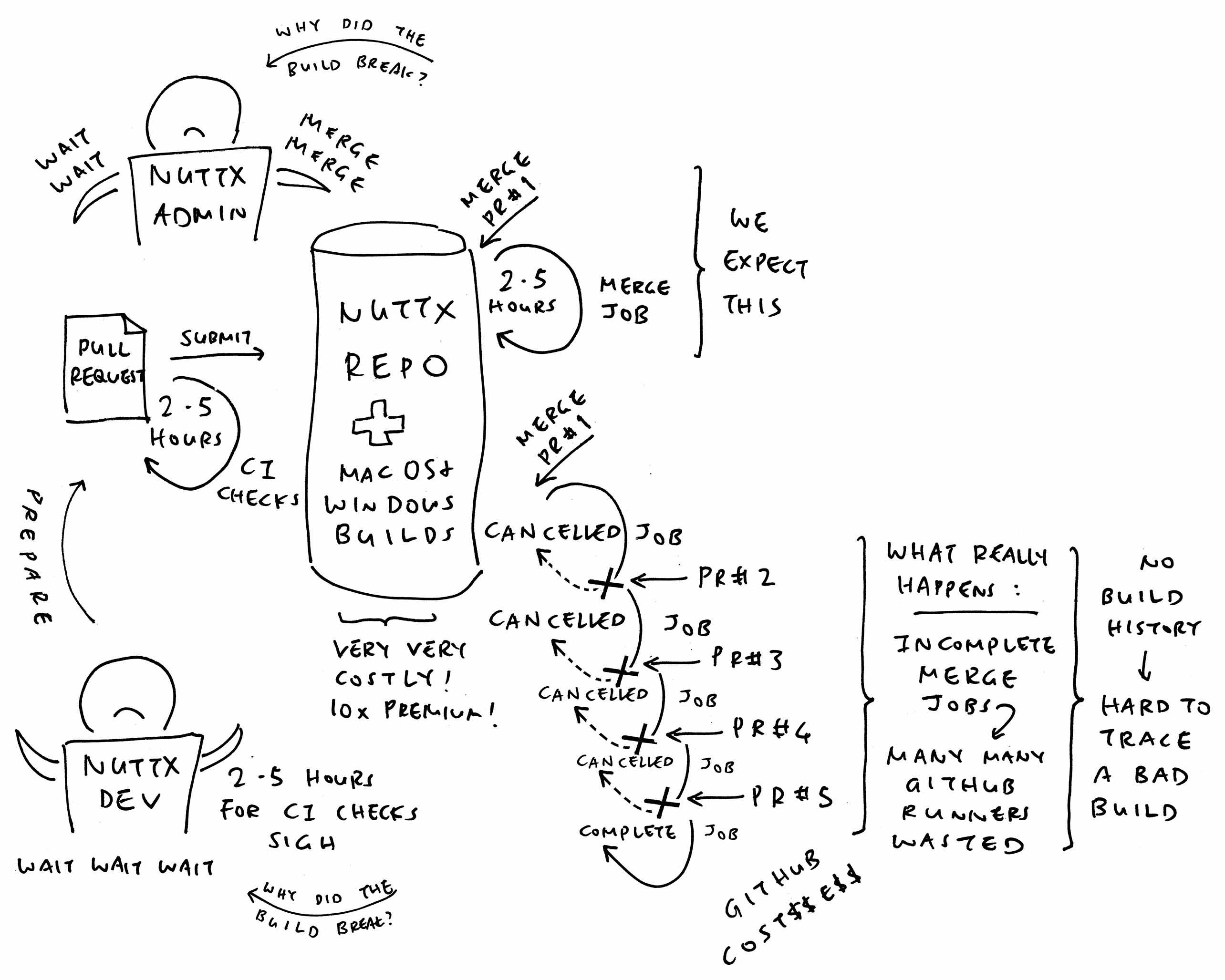

Many CI Jobs were Incomplete: We wasted GitHub Runners on Merge Jobs that were eventually superseded and cancelled (pic above, we’ll come back to this)

Scheduled Merge Jobs will reduce wastage of GitHub Runners, since most Merge Jobs didn’t complete. Only One Merge Job completed on that day…

When we Halve the CI Jobs: We reduce the wastage of GitHub Runners…

This analysis was super helpful for complying with the ASF Policy for GitHub Actions! Next we follow through…

Quitting the macOS Builds? That’s horribly drastic!

Yeah sorry we can’t enable macOS Builds in NuttX Repo right now…

macOS Runners cost 10 times as much as Linux Runners.

To enable One macOS Job: We need to disable 10 Linux Jobs! Which is not feasible.

Our macOS Jobs are in an untidy state right now, showing many many warnings.

We need someone familiar with Intel Macs to clean up the macOS Jobs.

(See the macOS Log)

That’s why we moved the macOS Builds to the NuttX Mirror Repo, which won’t be charged to NuttX Project.

Can we still prevent breakage of ALL Builds? Linux, macOS AND Windows?

Nope this is simply impossible…

In the good old days: We were using far too many GitHub Runners.

This is not sustainable, we don’t have the budget to do all the CI Checks we used to.

Hence we should expect some breakage.

We should be prepared to backtrack and figure out which PR broke the build.

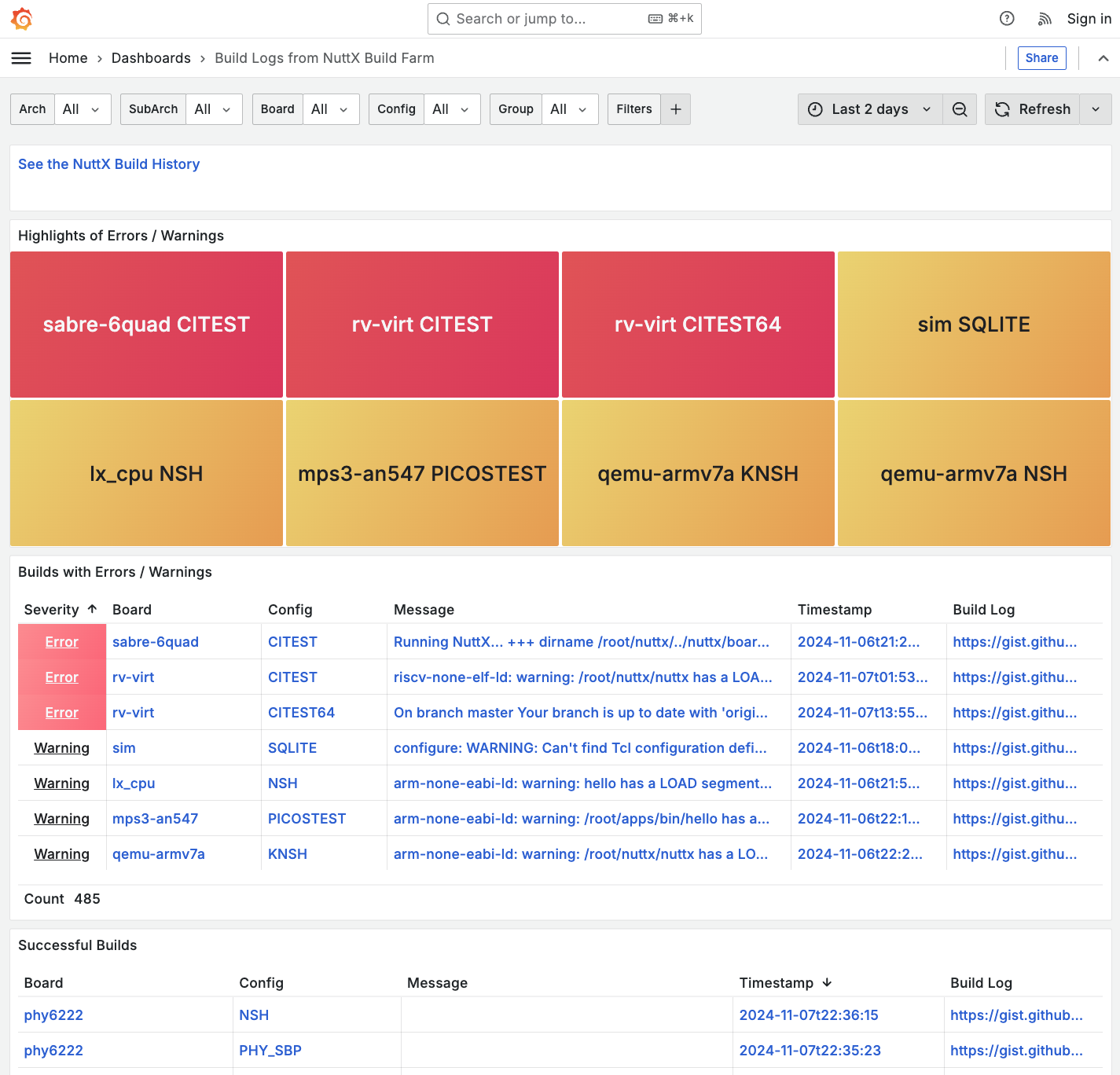

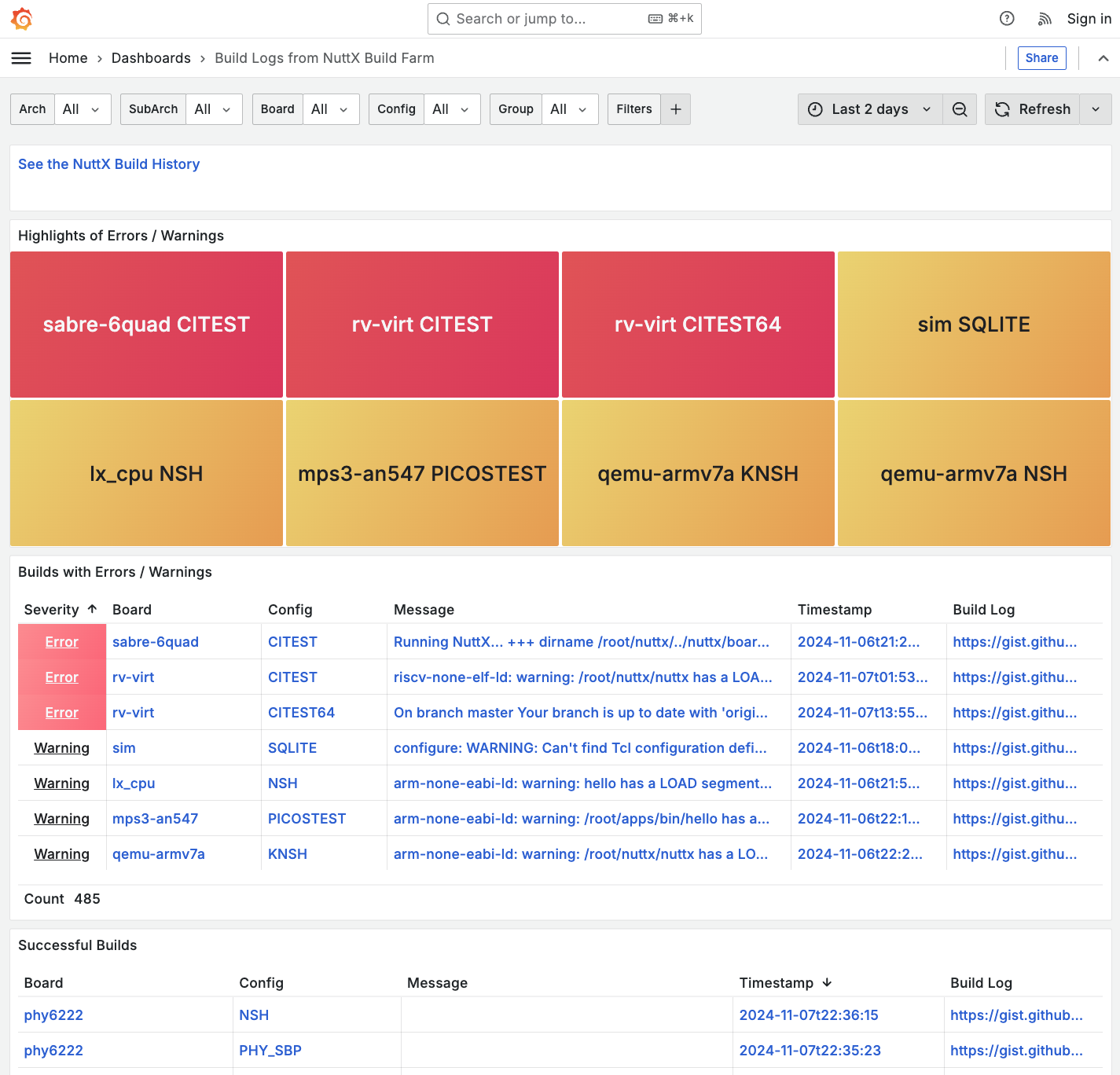

That’s why we have tools like NuttX Dashboard (pic above), to detect breakage earlier.

(Without depending on GitHub CI)

Remember to show Love and Respect for NuttX Devs!

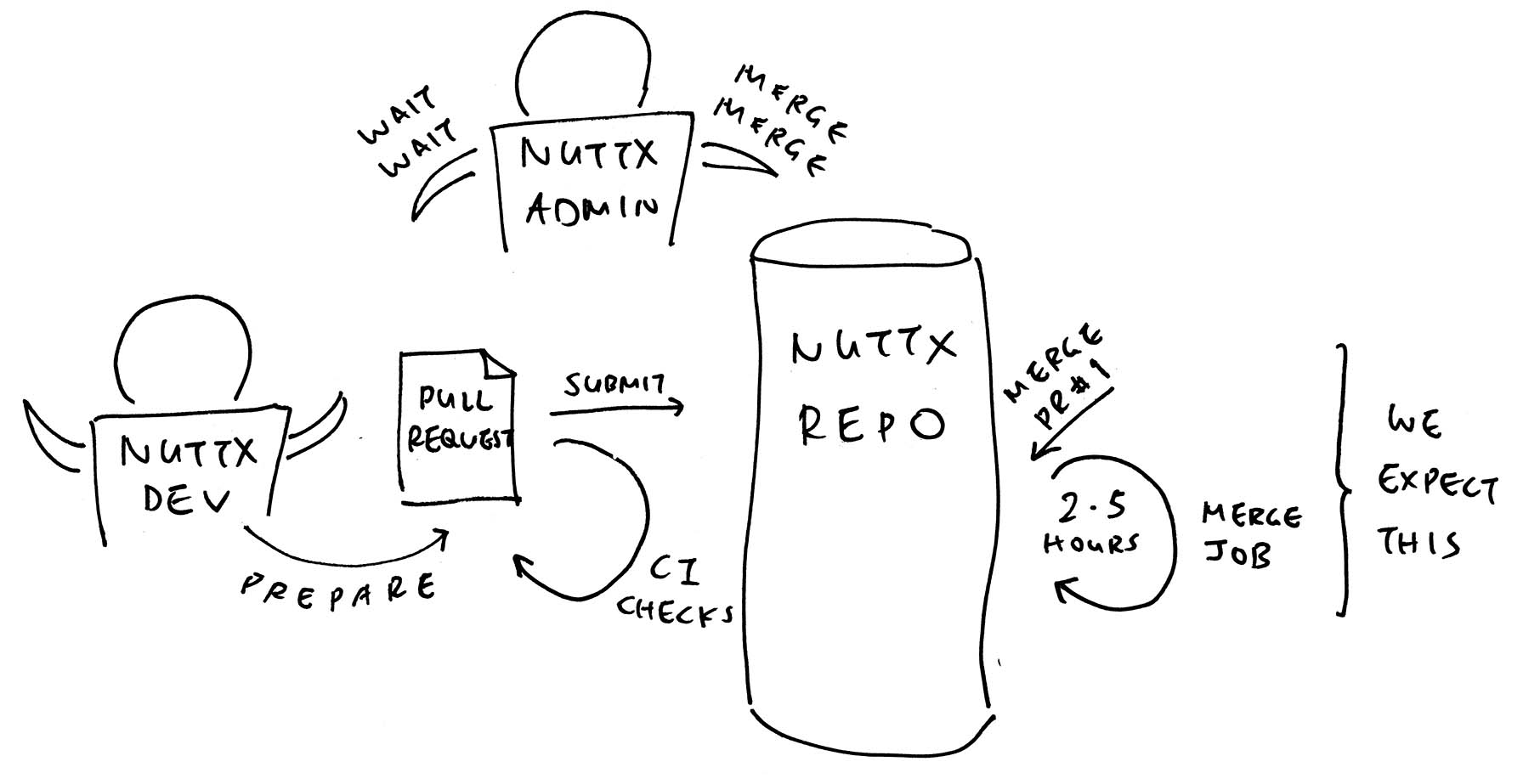

Previously we waited 2.5 Hours for All CI Checks. Now we wait at most 1.5 Hours, let’s stick to this.

What about the Windows Builds?

Recently we re-enabled the Windows Builds, because they’re not as costly as macOS Builds.

We’ll continue to monitor our GitHub Costs. And shut down the Windows Builds if necessary.

(Windows Runners are twice the cost of Linux Runners)

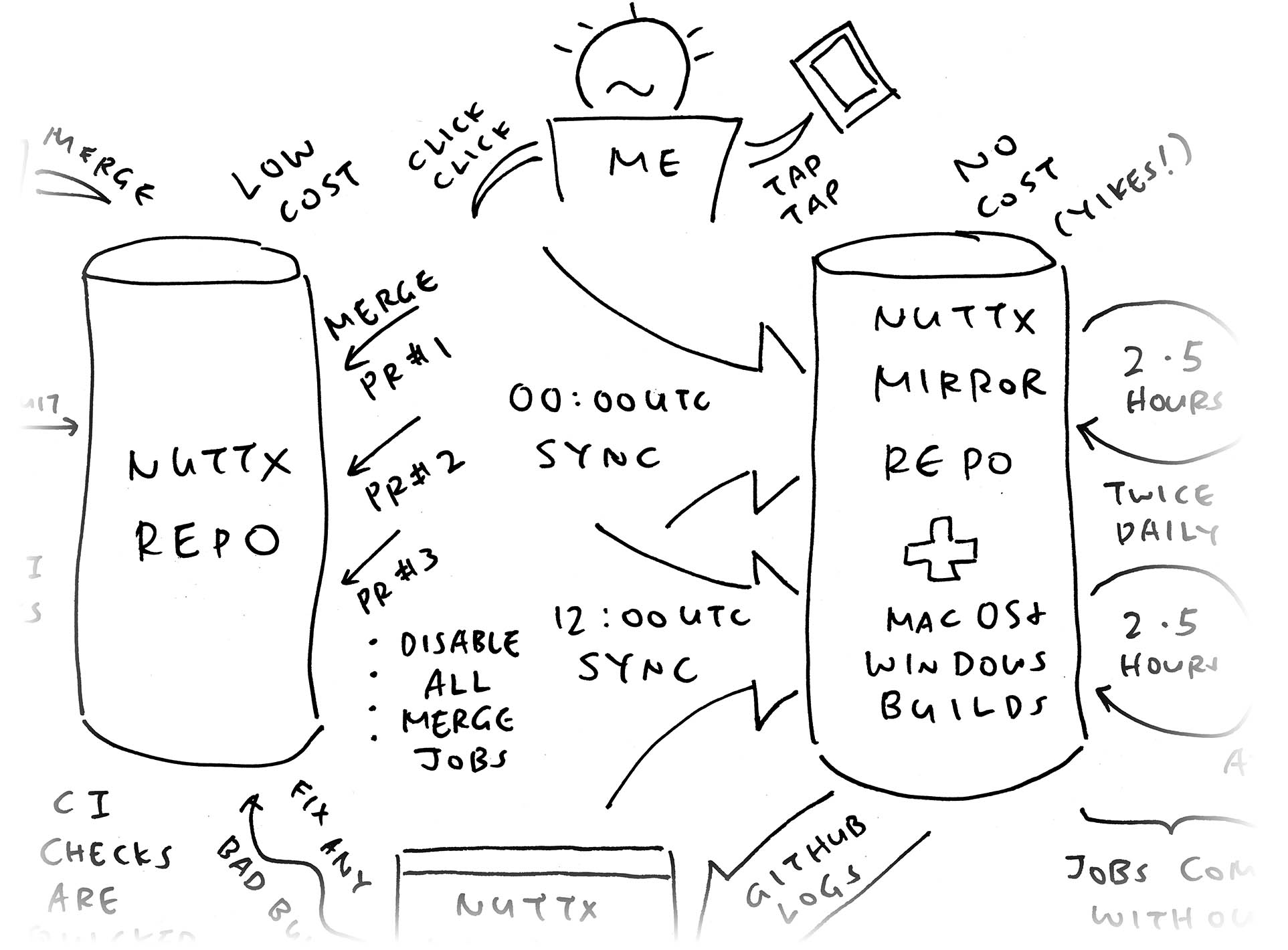

What are Merge Jobs? Why move them?

Suppose our NuttX Admin Merges a PR. (Pic above)

Normally our CI Workflow will trigger a Merge Job, to verify that everything compiles OK after Merging the PR.

Which means ploughing through 34 Sub-Jobs (2.5 elapsed hours) across All Architectures: Arm32, Arm64, RISC-V, Xtensa, macOS, Windows, …

This is extremely costly, hence we decided to trigger them as Scheduled Merge Jobs. I trigger them Twice Daily: 00:00 UTC and 12:00 UTC.

Is there a problem?

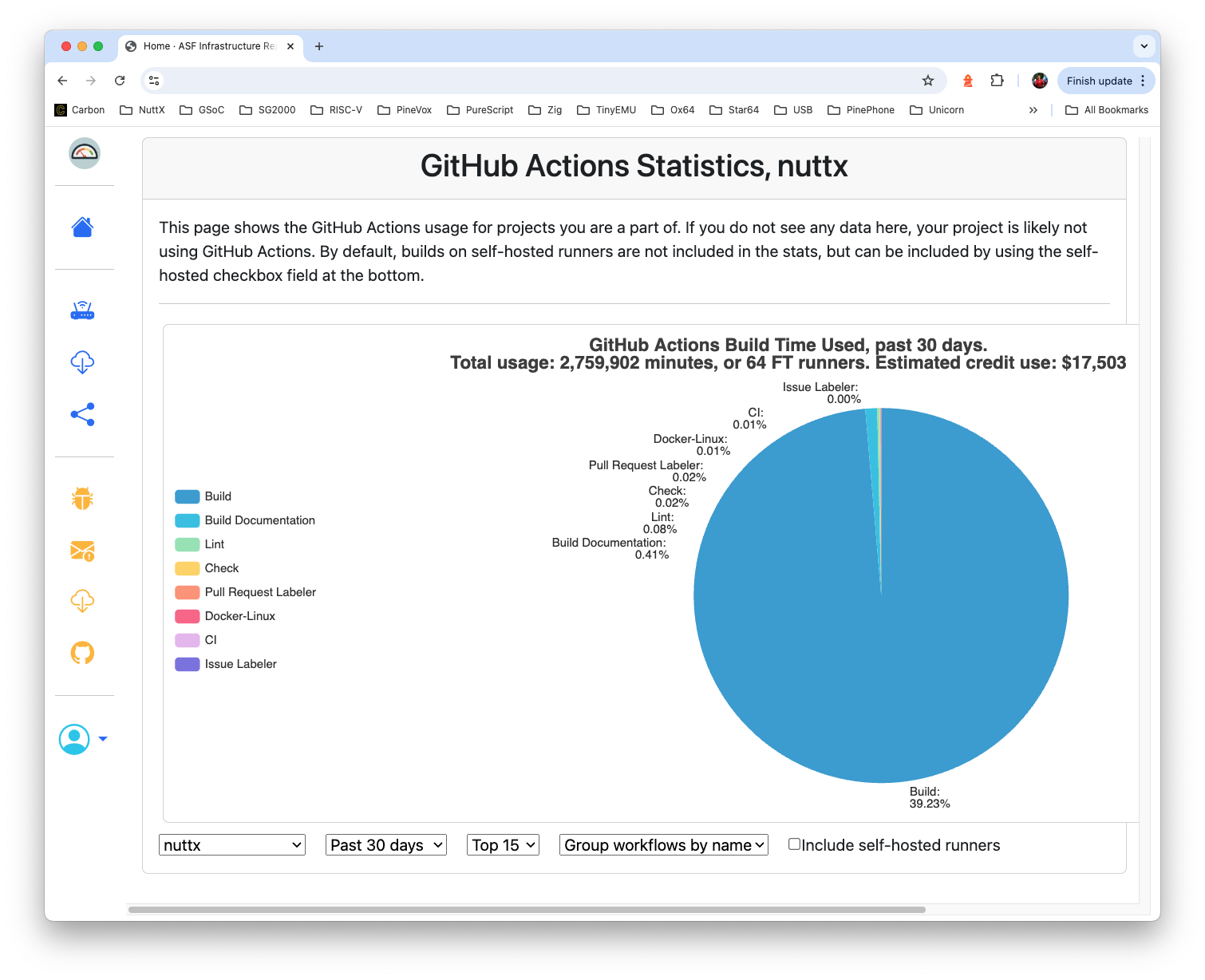

We spent One-Third of our GitHub Runner Minutes on Scheduled Merge Jobs! (Pic above)

Our CI Data shows that the Scheduled Merge Job kept getting disrupted by Newer Merged PRs. (Pic below)

And when we restart a Scheduled Merge Job, we waste precious GitHub Minutes.

(101 GitHub Hours for one single Scheduled Merge Job!)

Our Merge Jobs are overwhelming!

Yep this is clearly not sustainable. We moved the Scheduled Merge Jobs to a new NuttX Mirror Repo. (Pic below)

Where the Merge Jobs can run free without disruption.

(In an Unpaid GitHub Org Account, not charged to NuttX Project)

What about the Old Merge Jobs?

Initially I ran a script that will quickly Cancel any Merge Jobs that appear in NuttX Repo and NuttX Apps.

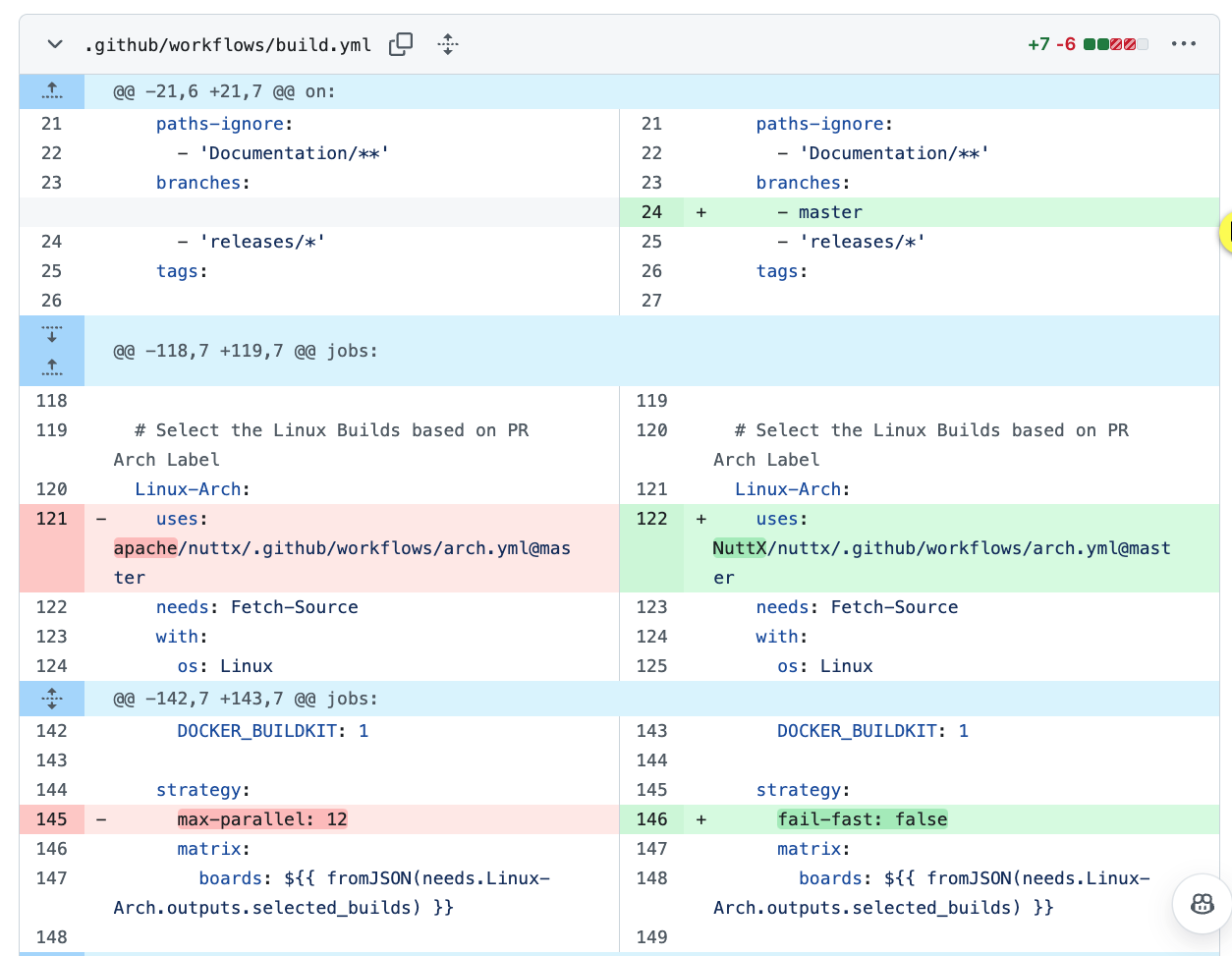

Eventually we disabled the Merge Jobs for NuttX Repo.

(Restoring Auto-Build on Sync)

How to trigger the Scheduled Merge Job?

Every Day at 00:00 UTC and 12:00 UTC: I do this…

Browse to the NuttX Mirror Repo

Click “Sync Fork > Discard Commits”

Which will Sync our Mirror Repo based on the Upstream NuttX Repo

Run this script to enable the macOS Builds: enable-macos-windows.sh

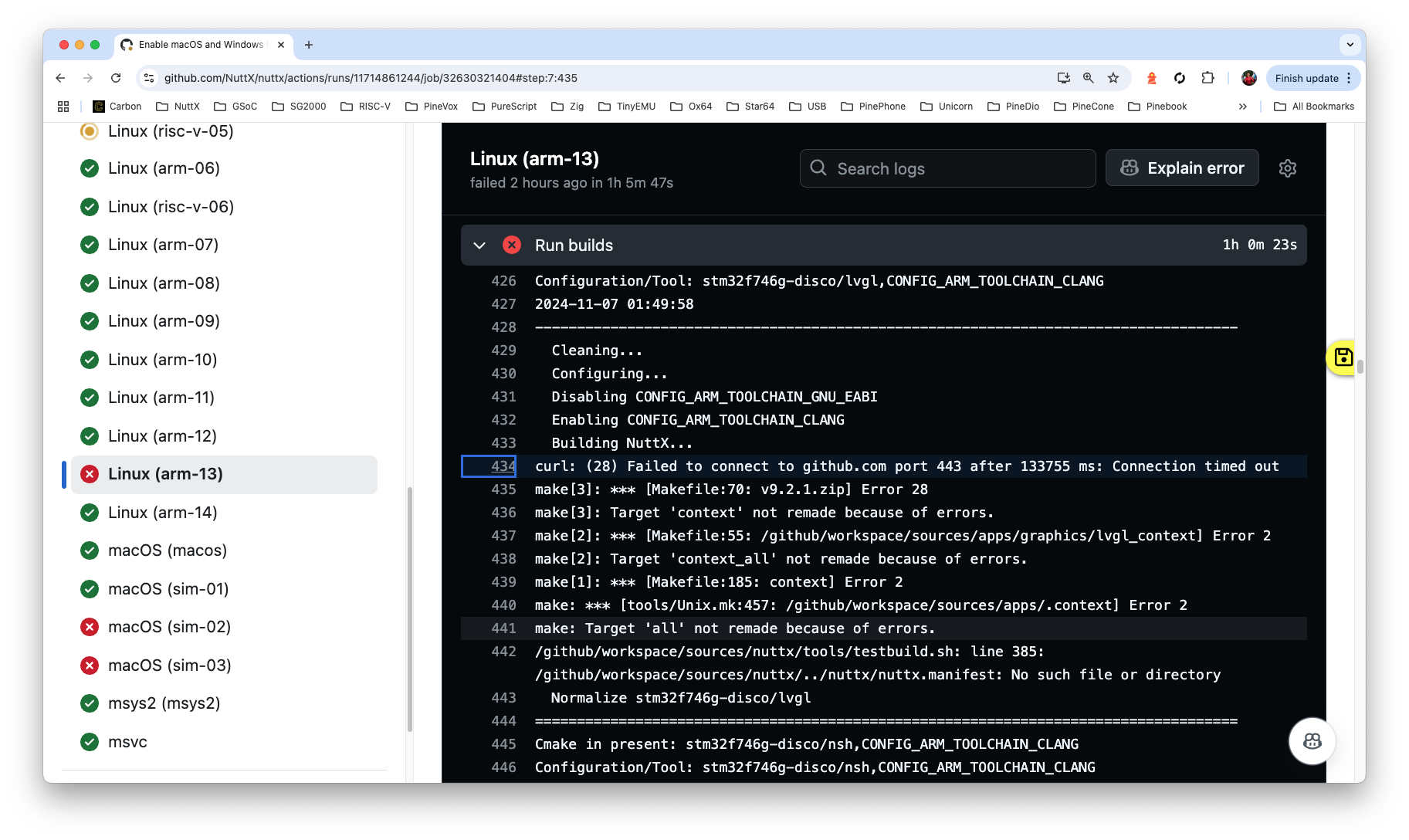

Which will also Disable Fail-Fast and grind through all builds. (Regardless of error, pic below)

And Remove Max Parallel to use unlimited concurrent runners. (Because it’s free! Pic below)

If the Merge Job fails with a Mystifying Network Timeout: I restart the Failed Sub-Jobs. (CI Test might overrun)

Wait for the Merge Job to complete. Then Ingest the GitHub Logs (like an Amoeba) into our NuttX Dashboard. (Next article)

Track down any bugs that Fail the Merge Job.

Is it really OK to Disable the Merge Jobs? What about Docs and Docker Builds?

Docker Builds: When Dockerfile is updated, it will trigger the CI Workflow docker_linux.yml. Which is not affected by this new setup, and will continue to execute. (Exactly like before)

Documentation: When the docs are updated, they are published to NuttX Website via the CI Workflow main.yml from the NuttX Website repo (scheduled daily). Which is not affected by our grand plan.

Release Branch: Merging a PR to the Release Branch will still run the PR Merge Job (exactly like before). Release Branch shall always be verified through Complete CI Checks.

Isn’t this cheating? Offloading to a Free GitHub Account?

Yeah that’s why we need a NuttX Build Farm. (Details below)

(Update: Right now we run 100% of CI Jobs for Complex PRs)

One-Thirds of our GitHub Runner Minutes were spent on Merge Jobs. What about the rest?

Two-Thirds of our GitHub Runner Minutes were spent on validating New and Updated PRs.

Hence we’re skipping Half the CI Checks for Complex PRs.

(A Complex PR affects All Architectures: Arm32, Arm64 RISC-V, Xtensa, etc)

Which CI Checks did we select?

Today we start only these CI Checks when submitting or updating a Complex PR (pic above)

Why did we choose these CI Checks?

We selected the CI Checks above because they validate NuttX Builds on Popular Boards (and for special tests)

| Target Group | Board / Test |

|---|---|

| arm-01 | Sony Spresense (TODO) |

| arm-05 | Nordic nRF52 |

| arm-06 | Raspberry Pi RP2040 |

| arm-07 | Microchip SAMD |

| arm-08, 10, 13 | STM32 |

| risc-v-02, 03 | ESP32-C3, C6, H2 |

| sim-01, 02 | CI Test, Matter |

We might rotate the list above to get better CI Coverage.

(See the Complete List of CI Builds)

(Sorry we can’t run xtensa-02 and arm-01)

What about Simple PRs?

A Simple PR concerns only One Single Architecture: Arm32 OR Arm64 OR RISC-V OR Xtensa etc.

When we create a Simple PR for Arm32: It will trigger only the CI Checks for arm-01 … arm-14.

Which will complete earlier than a Complex PR.

(x86_64 Devs are the happiest. Their PRs complete in 10 Mins!)

Sounds awfully complicated. How did we code the rules?

Indeed! The Build Rules are explained here…

Hitting the Target Metrics in 2 weeks… Everyone needs to help out right?

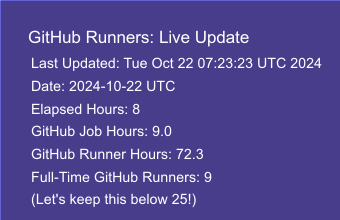

Our quota is 25 Full-Time GitHub Runners per day.

We published our own Live Metric for Full-Time Runners, for everyone to track…

Date: We compute the Full-Time Runners only for Today’s Date (UTC)

Elapsed Hours: Number of hours elapsed since 00:00 UTC

GitHub Job Hours: Elapsed Duration of all GitHub Jobs at NuttX Repo and NuttX Apps. (Cancelled / Completed / Failed)

This data is available only AFTER the job has been Cancelled / Completed / Failed. (Might have lagged by 1.5 hours)

But this is the Elapsed Job Duration. It doesn’t say that we’re running 8 Sub-Jobs in parallel. That’s why we need…

GitHub Runner Hours: Number of GitHub Runners * Job Duration. Effectively the Chargeable Minutes by GitHub.

We compute this as 8 * GitHub Job Hours. This is averaged from past data.

(Remember: One GitHub Runner will run One Single Sub-Job, like arm-01)

Full-Time GitHub Runners: Equals GitHub Runner Hours / Elapsed Hours.

It means “How many GitHub Runners, running Full-Time, in order to consume the GitHub Runner Hours”.

(We should keep this below 25 per day, per week, per month)

We publish the data every 15 minutes…

compute-github-runners.sh calls GitHub API to add up the Elapsed Duration of All Completed GitHub Jobs for today.

Then it extrapolates the Number of Full-Time GitHub Runners.

(1 GitHub Job Hour roughly equals 8 GitHub Runner Hours, which equals 8 Full-Time Runners Per Hour)

run.sh calls the script above and render the Full-Time GitHub Runners as a PNG.

(Thanks to ImageMagick)

compute-github-runners2.sh: Is the Linux Version of the above macOS Script.

(But less accurate, due to BC Rounding)

Next comes the Watchmen…

(Can we run All CI Checks for All PRs?)

Doesn’t sound right that an Unpaid Volunteer is monitoring our CI Servers 24 x 7 … But someone’s gotta do it! 👍

This runs on a 4K TV (Xiaomi 65-inch) all day, all night…

On Overnight Hikes: I check my phone at every water break…

If something goes wrong?

We have GitHub Scripts for Termux Android. Remember to “pkg install gh” and set GITHUB_TOKEN…

enable-macos-windows2.sh: Enable the macOS Builds in the NuttX Mirror Repo

compute-github-runners2.sh: Compute the number of Full-Time GitHub Runners for the day (less accurately than macOS version)

kill-push-master.sh: Cancel all Merge Jobs in NuttX Repo and NuttX Apps

It’s past Diwali and Halloween and Elections… Our CI Servers are still alive. We made it yay! 🎉

Within Two Weeks: We squashed our GitHub Actions spending from $4,900 (weekly) down to $890…

“Monthly Bill” for GitHub Actions used to be $18K…

Presently our Monthly Bill is $9.8K. Slashed by half (almost) and still dropping! Thank you everyone for making this happen! 🙏

(At Mid Nov 2024: Monthly Bill is now $3.1K 🎉)

Bonus Love & Respect: Previously our devs waited 2.5 Hours for a Pull Request to be checked. Now we wait at most 1.5 Hours!

Everything is hunky dory?

Trusting a Single Provider for Continuous Integration is a terrible thing. We got plenty more to do…

Become more resilient and self-sufficient with Our Own Build Farm

(Away from GitHub)

Analyse our Build Logs with Our Own Tools

(Instead of GitHub)

Excellent Initiative by Mateusz Szafoni: We Merge Multiple Targets into One Target

(And cut the Build Time)

🙏🙏🙏 Please join Your Ubuntu PC to our Build Farm! 🙏🙏🙏

But our Merge Jobs are still running in a Free Account?

We learnt a Painful Lesson today: Freebies Won’t Last Forever!

We should probably maintain an official Paid GitHub Org Account to execute our Merge Jobs…

New GitHub Org shall be sponsored by our generous Stakeholder Companies

(Espressif, Sony, Xiaomi, …)

New GitHub Org shall be maintained by a Paid Employee of our Stakeholder Companies

(Instead of an Unpaid Volunteer)

Which means clicking Twice Per Day to trigger the Scheduled Merge Jobs

(My fingers are tired, pic above)

And restarting the Failed Merge Jobs

Track down any bugs that Fail the Merge Job

Maintaining the NuttX Build Farm and NuttX Dashboard

New GitHub Org shall host the Official Downloads of NuttX Compiled Binaries

(For upcoming Board Testing Farm)

New GitHub Org will eventually Offload CI Checks from our NuttX Repos

(Maybe do macOS CI Checks for PRs)

Next Article: We’ll chat about NuttX Dashboard. And how we made it with Grafana and Prometheus…

“Continuous Integration Dashboard for Apache NuttX RTOS (Prometheus and Grafana)”

“Failing a Continuous Integration Test for Apache NuttX RTOS (QEMU RISC-V)”

“(Experimental) Mastodon Server for Apache NuttX Continuous Integration (macOS Rancher Desktop)”

“Test Bot for Pull Requests … Tested on Real Hardware (Apache NuttX RTOS / Oz64 SG2000 RISC-V SBC)”

Many Thanks to the awesome NuttX Admins and NuttX Devs! I couldn’t have survived the two choatic and stressful weeks without your help. And my GitHub Sponsors, for sticking with me all these years.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…

To run the Complete Suite of CI Checks on every PR… We could use Self-Hosted GitHub Runners?

Yep I tested Self-Hosted GitHub Runners, I wrote about my experience here: “Continuous Integration for Apache NuttX RTOS”

Self-Hosted GitHub Runners are actually quite complex to setup. And the machine needs to be properly secured, in case any unauthorised code is pushed down from GitHub.

We don’t have budget to set up Virtual Machines maintained by IT Security Professionals for GitHub Runners anyway

NuttX Project might be a little too dependent on GitHub. Even if we had the funds, the ASF contract with GitHub won’t allow us to pay more for extra usage. So we’re trying alternatives.

Right now we’re testing a Community-Hosted Build Farm based on Ubuntu PCs and macOS: “Your very own Build Farm for Apache NuttX RTOS”

Before submitting a PR to NuttX: How to check our PR thoroughly?

Yep it’s super important to thoroughly test our PRs before submitting to NuttX.

But NuttX Project doesn’t have the budget to run all CI Checks for New PRs. The onus is on us to test our PRs (without depending on the CI Workflow)

Run the CI Builds ourselves with Docker Engine

Or run the CI Builds with GitHub Actions

(1) might be slower, depending on our PC. With (2) we don’t need to worry about Wasting GitHub Runners, so long as the CI Workflow runs entirely in our own personal repo, before submitting to NuttX Repo.

Here are the instructions…

What if our PR fails the check, caused by Another PR?

We wait for the Other PR to be patched…

Set our PR to Draft Mode

Keep checking the NuttX Dashboard (above)

Wait patiently for the Red Error Boxes to disappear

Rebase our PR with the Master Branch

Our PR should pass the CI Check. Set our PR to Ready for Review.

Otherwise we might miss a Serious Bug.

When NuttX merges our PR, the Merge Job won’t run until 00:00 UTC and 12:00 UTC. How can we be really sure that our PR was merged correctly?

Let’s create a GitHub Org (at no cost), fork the NuttX Repo and trigger the CI Workflow. (Which won’t charge any extra GitHub Runner Minutes to NuttX Project!)

This will probably work if our CI Servers ever go dark.

Something super strange about Network Timeouts (pic above) in our CI Docker Workflows at GitHub Actions. Here’s an example…

First Run fails while downloading something from GitHub…

Configuration/Tool: imxrt1050-evk/libcxxtest,CONFIG_ARM_TOOLCHAIN_GNU_EABI

curl: (28) Failed to connect to github.com port 443 after 134188 ms: Connection timed out

make[1]: *** [libcxx.defs:28: libcxx-17.0.6.src.tar.xz] Error 28Second Run fails again, while downloading NimBLE from GitHub…

Configuration/Tool: nucleo-wb55rg/nimble,CONFIG_ARM_TOOLCHAIN_GNU_EABI

curl: (28) Failed to connect to github.com port [443](https://github.com/nuttxpr/nuttx/actions/runs/11535899222/job/32112716849#step:7:444) after 134619 ms: Connection timed out

make[2]: *** [Makefile:55: /github/workspace/sources/apps/wireless/bluetooth/nimble_context] Error 2Third Run succeeds. Why do we keep seeing these errors: GitHub Actions with Docker, can’t connect to GitHub itself?

Is there a Concurrent Connection Limit for GitHub HTTPS Connections?

We see 4 Concurrent Connections to GitHub HTTPS…

The Fifth Connection failed: arm-02 at 00:42:52

Should we use a Caching Proxy Server for curl?

$ export https_proxy=https://1.2.3.4:1234

$ curl https://github.com/...Is something misconfigured in our Docker Image?

But the exact same Docker Image runs fine on our own Build Farm. It doesn’t show any errors.

Is GitHub Actions starting our Docker Container with the wrong MTU (Network Packet Size)? 🤔

Meanwhile I’m running a script to Restart Failed Jobs on our NuttX Mirror Repo: restart-failed-job.sh

These Timeout Errors will cost us precious GitHub Minutes. The remaining jobs get killed, and restarting these killed jobs from scratch will consume extra GitHub Minutes. (The restart below costs us 6 extra GitHub Runner Hours)

How do we Retry these Timeout Errors?

Can we have Restartable Builds?

Doesn’t quite make sense to kill everything and rebuild from scratch (arm6, arm7, riscv7) just because one job failed (xtensa2)

Or xtensa2 should wait for others to finish, before it declares a timeout and croaks?

Configuration/Tool: esp32s2-kaluga-1/lvgl_st7789

curl: Failed to connect to github.com port 443 after 133994 ms:

Connection timed out

Initially we created the Build Rules for CI Workflow to solve these problems that we observed in Sep 2024…

NuttX Devs need to wait (2.5 hours) for the CI Build to complete Across all Architectures (Arm32, Arm64, RISC-V, Xtensa)…

Even though we’re modifying a Single Architecture.

We’re using too many GitHub Runners and Build Minutes, exceeding the ASF Policy for GitHub Actions

Our usage of GitHub Runners is going up ($12K per month)

We need to stay within the ASF Budget for GitHub Runners ($8.2K per month)

What if CI could build only the Modified Architecture?

Right now most of our CI Builds are taking 2.5 mins.

Can we complete the build within 1 hour, when we Create / Modify a Simple PR?

This section explains how we coded the Build Rules. Which were mighty helpful for cutting costs in Nov 2024.

We propose a Partial Solution, based on the Arch and Board Labels (recently added to CI)…

We target only the Simple PRs: One Arch Label + One Board Label + One Size Label.

Like “Arch: risc-v, Board: risc-v, Size: XS”

If “Arch: arm” is the only non-size label, then we build only arm-01, arm-02, …

Same for “Board: arm”

If Arch and Board Labels are both present: They must be the same

Similar rules for RISC-V, Simulator, x86_64 and Xtensa

Simple PR + Docs is still considered a Simple PR (so devs won’t be penalised for adding docs)

In our Build Rules: This is how we fetch the Arch Labels from a PR. And identify the PR as Arm, Arm64, RISC-V or Xtensa: arch.yml

# Get the Arch for the PR: arm, arm64, risc-v, xtensa, ...

- name: Get arch

id: get-arch

run: |

# If PR is Not Created or Modified: Build all targets

pr=${{github.event.pull_request.number}}

if [[ "$pr" == "" ]]; then

echo "Not a Created or Modified PR, will build all targets"

exit

fi

# Ignore the Label "Area: Documentation", because it won't affect the Build Targets

query='.labels | map(select(.name != "Area: Documentation")) | '

select_name='.[].name'

select_length='length'

# Get the Labels for the PR: "Arch: risc-v \n Board: risc-v \n Size: XS"

# If GitHub CLI Fails: Build all targets

labels=$(gh pr view $pr --repo $GITHUB_REPOSITORY --json labels --jq $query$select_name || echo "")

numlabels=$(gh pr view $pr --repo $GITHUB_REPOSITORY --json labels --jq $query$select_length || echo "")

echo "numlabels=$numlabels" | tee -a $GITHUB_OUTPUT

# Identify the Size, Arch and Board Labels

if [[ "$labels" == *"Size: "* ]]; then

echo 'labels_contain_size=1' | tee -a $GITHUB_OUTPUT

fi

if [[ "$labels" == *"Arch: "* ]]; then

echo 'labels_contain_arch=1' | tee -a $GITHUB_OUTPUT

fi

if [[ "$labels" == *"Board: "* ]]; then

echo 'labels_contain_board=1' | tee -a $GITHUB_OUTPUT

fi

# Get the Arch Label

if [[ "$labels" == *"Arch: arm64"* ]]; then

echo 'arch_contains_arm64=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Arch: arm"* ]]; then

echo 'arch_contains_arm=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Arch: risc-v"* ]]; then

echo 'arch_contains_riscv=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Arch: simulator"* ]]; then

echo 'arch_contains_sim=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Arch: x86_64"* ]]; then

echo 'arch_contains_x86_64=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Arch: xtensa"* ]]; then

echo 'arch_contains_xtensa=1' | tee -a $GITHUB_OUTPUT

fi

# Get the Board Label

if [[ "$labels" == *"Board: arm64"* ]]; then

echo 'board_contains_arm64=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Board: arm"* ]]; then

echo 'board_contains_arm=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Board: risc-v"* ]]; then

echo 'board_contains_riscv=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Board: simulator"* ]]; then

echo 'board_contains_sim=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Board: x86_64"* ]]; then

echo 'board_contains_x86_64=1' | tee -a $GITHUB_OUTPUT

elif [[ "$labels" == *"Board: xtensa"* ]]; then

echo 'board_contains_xtensa=1' | tee -a $GITHUB_OUTPUT

fi

env:

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}Why “ || echo ""”? That’s because if the GitHub CLI gh fails for any reason, we shall build all targets.

This ensures that our CI Workflow won’t get disrupted due to errors in GitHub CLI.

We handle only Simple PRs: One Arch Label + One Board Label + One Size Label.

Like “Arch: risc-v, Board: risc-v, Size: XS”.

If it’s Not a Simple PR: We build everything. Like so: arch.yml

# inputs.boards is a JSON Array: ["arm-01", "risc-v-01", "xtensa-01", ...]

# We compact and remove the newlines

boards=$( echo '${{ inputs.boards }}' | jq --compact-output ".")

numboards=$( echo "$boards" | jq "length" )

# We consider only Simple PRs with:

# Arch + Size Labels Only

# Board + Size Labels Only

# Arch + Board + Size Labels Only

if [[ "$labels_contain_size" != "1" ]]; then

echo "Size Label Missing, will build all targets"

quit=1

elif [[ "$numlabels" == "2" && "$labels_contain_arch" == "1" ]]; then

echo "Arch + Size Labels Only"

elif [[ "$numlabels" == "2" && "$labels_contain_board" == "1" ]]; then

echo "Board + Size Labels Only"

elif [[ "$numlabels" == "3" && "$labels_contain_arch" == "1" && "$labels_contain_board" == "1" ]]; then

# Arch and Board must be the same

if [[

"$arch_contains_arm" != "$board_contains_arm" ||

"$arch_contains_arm64" != "$board_contains_arm64" ||

"$arch_contains_riscv" != "$board_contains_riscv" ||

"$arch_contains_sim" != "$board_contains_sim" ||

"$arch_contains_x86_64" != "$board_contains_x86_64" ||

"$arch_contains_xtensa" != "$board_contains_xtensa"

]]; then

echo "Arch and Board are not the same, will build all targets"

quit=1

else

echo "Arch + Board + Size Labels Only"

fi

else

echo "Not a Simple PR, will build all targets"

quit=1

fi

# If Not a Simple PR: Build all targets

if [[ "$quit" == "1" ]]; then

# If PR was Created or Modified: Exclude some boards

pr=${{github.event.pull_request.number}}

if [[ "$pr" != "" ]]; then

echo "Excluding arm-0[1249], arm-1[124-9], risc-v-04..06, sim-03, xtensa-02"

boards=$(

echo '${{ inputs.boards }}' |

jq --compact-output \

'map(

select(

test("arm-0[1249]") == false and test("arm-1[124-9]") == false and

test("risc-v-0[4-9]") == false and

test("sim-0[3-9]") == false and

test("xtensa-0[2-9]") == false

)

)'

)

fi

echo "selected_builds=$boards" | tee -a $GITHUB_OUTPUT

exit

fiSuppose the PR says “Arch: arm” or “Board: arm”.

We filter out the builds that should be skipped (RISC-V, Xtensa, etc): arch.yml

# For every board

for (( i=0; i<numboards; i++ ))

do

# Fetch the board

board=$( echo "$boards" | jq ".[$i]" )

skip_build=0

# For "Arch / Board: arm": Build arm-01, arm-02, ...

if [[ "$arch_contains_arm" == "1" || "$board_contains_arm" == "1" ]]; then

if [[ "$board" != *"arm"* ]]; then

skip_build=1

fi

# Omitted: Arm64, RISC-V, Simulator x86_64, Xtensa

...

# For Other Arch: Allow the build

else

echo Build by default: $board

fi

# Add the board to the selected builds

if [[ "$skip_build" == "0" ]]; then

echo Add $board to selected_builds

if [[ "$selected_builds" == "" ]]; then

selected_builds=$board

else

selected_builds=$selected_builds,$board

fi

fi

done

# Return the selected builds as JSON Array

# If Selected Builds is empty: Skip all builds

echo "selected_builds=[$selected_builds]" | tee -a $GITHUB_OUTPUT

if [[ "$selected_builds" == "" ]]; then

echo "skip_all_builds=1" | tee -a $GITHUB_OUTPUT

fiEarlier we saw the code in arch.yml Reusable Workflow that identifies the builds to be skipped.

The code above is called by build.yml (Build Workflow). Which will actually skip the builds: build.yml

# Select the Linux Builds based on PR Arch Label

Linux-Arch:

uses: apache/nuttx/.github/workflows/arch.yml@master

needs: Fetch-Source

with:

os: Linux

boards: |

[

"arm-01", "risc-v-01", "sim-01", "xtensa-01", "arm64-01", "x86_64-01", "other",

"arm-02", "risc-v-02", "sim-02", "xtensa-02",

"arm-03", "risc-v-03", "sim-03",

"arm-04", "risc-v-04",

"arm-05", "risc-v-05",

"arm-06", "risc-v-06",

"arm-07", "arm-08", "arm-09", "arm-10", "arm-11", "arm-12", "arm-13", "arm-14"

]

# Run the selected Linux Builds

Linux:

needs: Linux-Arch

if: ${{ needs.Linux-Arch.outputs.skip_all_builds != '1' }}

runs-on: ubuntu-latest

env:

DOCKER_BUILDKIT: 1

strategy:

max-parallel: 12

matrix:

boards: ${{ fromJSON(needs.Linux-Arch.outputs.selected_builds) }}

steps:

## Omitted: Run cibuild.sh on LinuxWhy “needs: Fetch-Source”? That’s because the PR Labeler runs concurrently in the background.

When we add Fetch-Source as a Job Dependency: We give the PR Labeler sufficient time to run (1 min), before we read the PR Label in arch.yml.

We do the same for Arm64, RISC-V, Simulator, x86_64 and Xtensa: arch.yml

# For "Arch / Board: arm64": Build arm64-01

elif [[ "$arch_contains_arm64" == "1" || "$board_contains_arm64" == "1" ]]; then

if [[ "$board" != *"arm64-"* ]]; then

skip_build=1

fi

# For "Arch / Board: risc-v": Build risc-v-01, risc-v-02, ...

elif [[ "$arch_contains_riscv" == "1" || "$board_contains_riscv" == "1" ]]; then

if [[ "$board" != *"risc-v-"* ]]; then

skip_build=1

fi

# For "Arch / Board: simulator": Build sim-01, sim-02

elif [[ "$arch_contains_sim" == "1" || "$board_contains_sim" == "1" ]]; then

if [[ "$board" != *"sim-"* ]]; then

skip_build=1

fi

# For "Arch / Board: x86_64": Build x86_64-01

elif [[ "$arch_contains_x86_64" == "1" || "$board_contains_x86_64" == "1" ]]; then

if [[ "$board" != *"x86_64-"* ]]; then

skip_build=1

fi

# For "Arch / Board: xtensa": Build xtensa-01, xtensa-02

elif [[ "$arch_contains_xtensa" == "1" || "$board_contains_xtensa" == "1" ]]; then

if [[ "$board" != *"xtensa-"* ]]; then

skip_build=1

fi

For Simple PRs and Complex PRs: We skip the macOS builds (macos, macos/sim-*) since these builds are costly: build.yml

(macOS builds will take 2 hours to complete due to the queueing for macOS Runners)

# Select the macOS Builds based on PR Arch Label

macOS-Arch:

uses: apache/nuttx/.github/workflows/arch.yml@master

needs: Fetch-Source

with:

os: macOS

boards: |

["macos", "sim-01", "sim-02", "sim-03"]

# Run the selected macOS Builds

macOS:

permissions:

contents: none

runs-on: macos-13

needs: macOS-Arch

if: ${{ needs.macOS-Arch.outputs.skip_all_builds != '1' }}

strategy:

max-parallel: 2

matrix:

boards: ${{ fromJSON(needs.macOS-Arch.outputs.selected_builds) }}

steps:

## Omitted: Run cibuild.sh on macOSskip_all_builds for macOS will be set to 1: arch.yml

# Select the Builds for the PR: arm-01, risc-v-01, xtensa-01, ...

- name: Select builds

id: select-builds

run: |

# Skip all macOS Builds

if [[ "${{ inputs.os }}" == "macOS" ]]; then

echo "Skipping all macOS Builds"

echo "skip_all_builds=1" | tee -a $GITHUB_OUTPUT

exit

fiNuttX Devs shouldn’t be penalised for adding docs!

That’s why we ignore the label “Area: Documentation”. Which means that Simple PR + Docs is still a Simple PR.

And will skip the unnecessary builds: arch.yml

# Ignore the Label "Area: Documentation", because it won't affect the Build Targets

query='.labels | map(select(.name != "Area: Documentation")) | '

select_name='.[].name'

select_length='length'

# Get the Labels for the PR: "Arch: risc-v \n Board: risc-v \n Size: XS"

# If GitHub CLI Fails: Build all targets

labels=$(gh pr view $pr --repo $GITHUB_REPOSITORY --json labels --jq $query$select_name || echo "")

numlabels=$(gh pr view $pr --repo $GITHUB_REPOSITORY --json labels --jq $query$select_length || echo "")

echo "numlabels=$numlabels" | tee -a $GITHUB_OUTPUTRemember to sync build.yml and arch.yml from NuttX Repo to NuttX Apps!

How are they connected?

build.yml points to arch.yml for the Build Rules.

When we sync build.yml from NuttX Repo to NuttX Apps, we won’t need to remove the references to arch.yml.

We could make nuttx-apps/build.yml point to nuttx/arch.yml.

But that would make the CI Fragile: Changes to nuttx/arch.yml might cause nuttx-apps/build.yml to break.

That’s why we point nuttx-apps/build.yml to nuttx-apps/arch.yml instead.

But NuttX Apps don’t need Build Rules?

arch.yml is kinda redundant in NuttX Apps. Everything is a Complex PR!

I have difficulty keeping nuttx/build.yml and nuttx-apps/build.yml in sync. That’s why I simply copied over arch.yml as-is.

In future we could extend arch.yml with App-Specific Build Ruiles

CI Build Workflow looks very different now?

Yeah our CI Build Workflow used to be simpler: build.yml

Linux:

needs: Fetch-Source

strategy:

matrix:

boards: [arm-01, arm-02, arm-03, arm-04, arm-05, arm-06, arm-07, arm-08, arm-09, arm-10, arm-11, arm-12, arm-13, other, risc-v-01, risc-v-02, sim-01, sim-02, xtensa-01, xtensa-02]Now with Build Rules, it becomes more complicated: build.yml

# Select the Linux Builds based on PR Arch Label

Linux-Arch:

uses: apache/nuttx-apps/.github/workflows/arch.yml@master

needs: Fetch-Source

with:

boards: |

[

"arm-01", "other", "risc-v-01", "sim-01", "xtensa-01", ...

]

# Run the selected Linux Builds

Linux:

needs: Linux-Arch

if: ${{ needs.Linux-Arch.outputs.skip_all_builds != '1' }}

strategy:

matrix:

boards: ${{ fromJSON(needs.Linux-Arch.outputs.selected_builds) }}One thing remains the same: We configure the Target Groups in build.yml. (Instead of arch.yml)

For our Initial Implementation of Build Rules: We recorded the CI Build Performance for Simple PRs.

Then we made the Simple PRs faster…

| Build Time | Before | After |

|---|---|---|

| Arm32 | 2 hours | 1.5 hours |

| Arm64 | 2.2 hours | 30 mins |

| RISC-V | 1.8 hours | 50 mins |

| Xtensa | 2.2 hours | 1.5 hours |

| x86_64 | 2.2 hours | 10 mins |

| Simulator | 2.2 hours | 1 hour |

How did we make the Simple PRs faster?

We broke up Big Jobs (arm-05, riscv-01, riscv-02) into Multiple Smaller Jobs.

Small Jobs will really fly! (See the Build Job Details)

(We moved the RP2040 jobs from arm-05 to arm-06, then added arm-14. Followed by jobs riscv-03 … riscv-06)

We saw a 27% Reduction in GitHub Runner Hours! From 15 Runner Hours down to 11 Runner Hours per Arm32 Build.

We split the Board Labels according to Arch, like “Board: arm”.

Thus “Board: arm” should build the exact same way as “Arch: arm”.

Same for “Board: arm, Arch: arm”. We updated the Build Rules to use the Board Labels.

We split the others job into arm64 and x86_64

Up Next: Reorg and rename the CI Build Jobs, for better performance and easier maintenance. But how?

I have a hunch that CI works better when we pack the jobs into One-Hour Time Slices

Kinda like packing yummy goodies into Bento Boxes, making sure they don’t overflow the Time Boxes :-)

We should probably shift the Riskiest / Most Failure Prone builds into the First Build Job (arm-00, risc-v-00, sim-00).

And we shall Fail Faster (in case of problems), skipping the rest of the jobs.

Recently we see many builds for Arm32 Goldfish.

Can we limit the builds to the Goldfish Boards only?

To identify Goldfish PRs, we can label the PRs like this: “Arch: arm, SubArch: goldfish” and “Board: arm, SubBoard: goldfish”

Instead of Building an Entire Arch (arm-01)…

Can we build One Single SubArch (stm32)?

How will we Filter the Build Jobs (e.g. arm-01) that should be built for a SubArch (e.g. stm32)? (Maybe like this)

Spot the exact knotty moment that we were told about the CI Shutdown