📝 6 Oct 2023

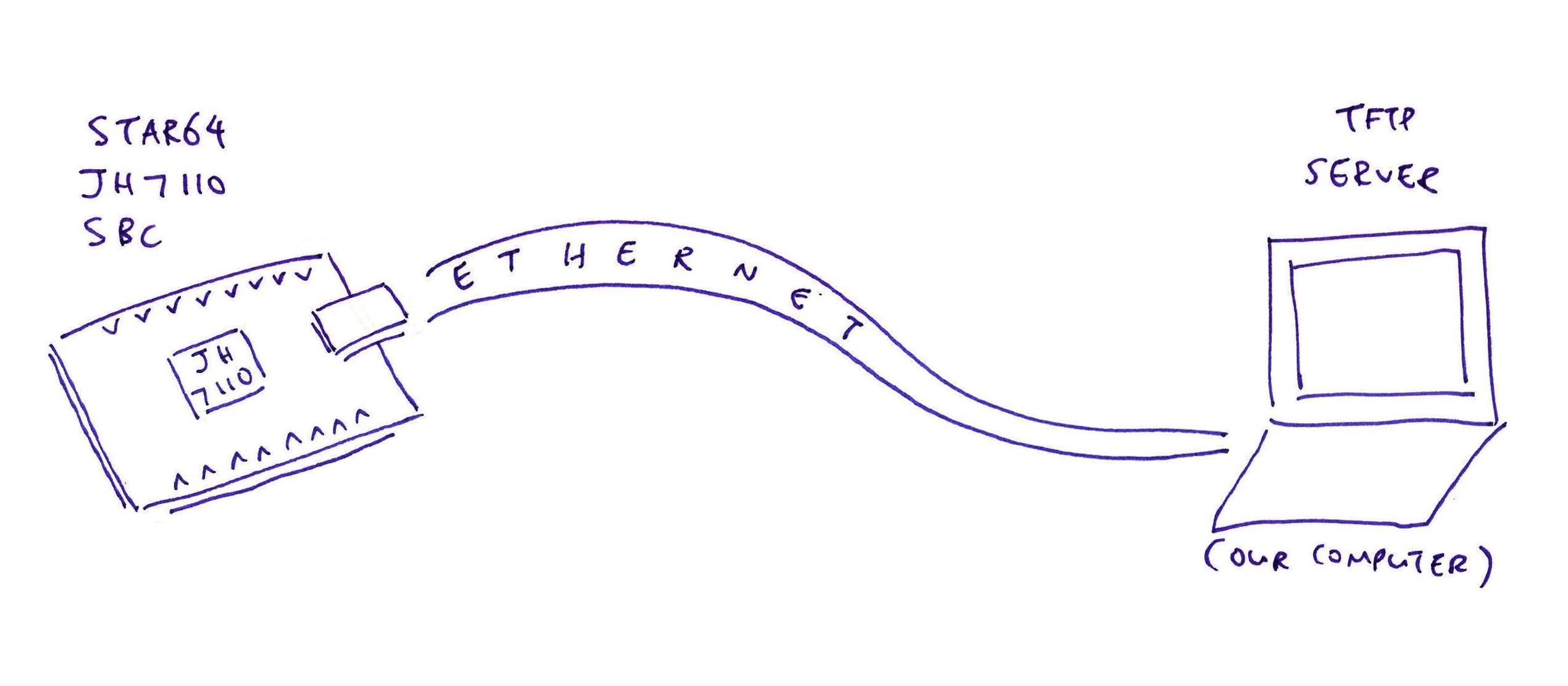

We’re porting Apache NuttX Real-Time Operating System to the Pine64 Star64 RISC-V Single-Board Computer. (Pic below)

(Powered by StarFive JH7110 SoC, same as the VisionFive2 SBC)

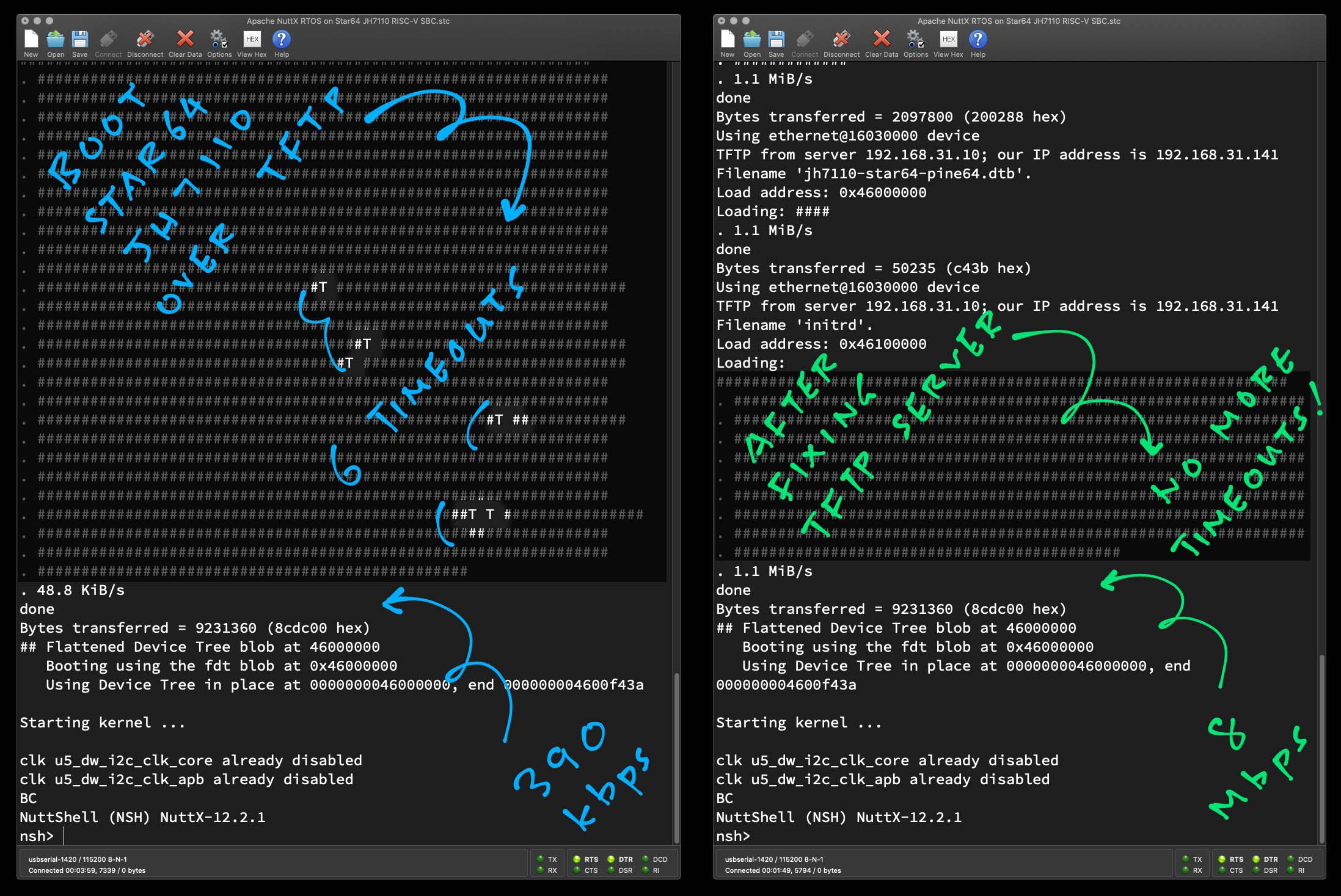

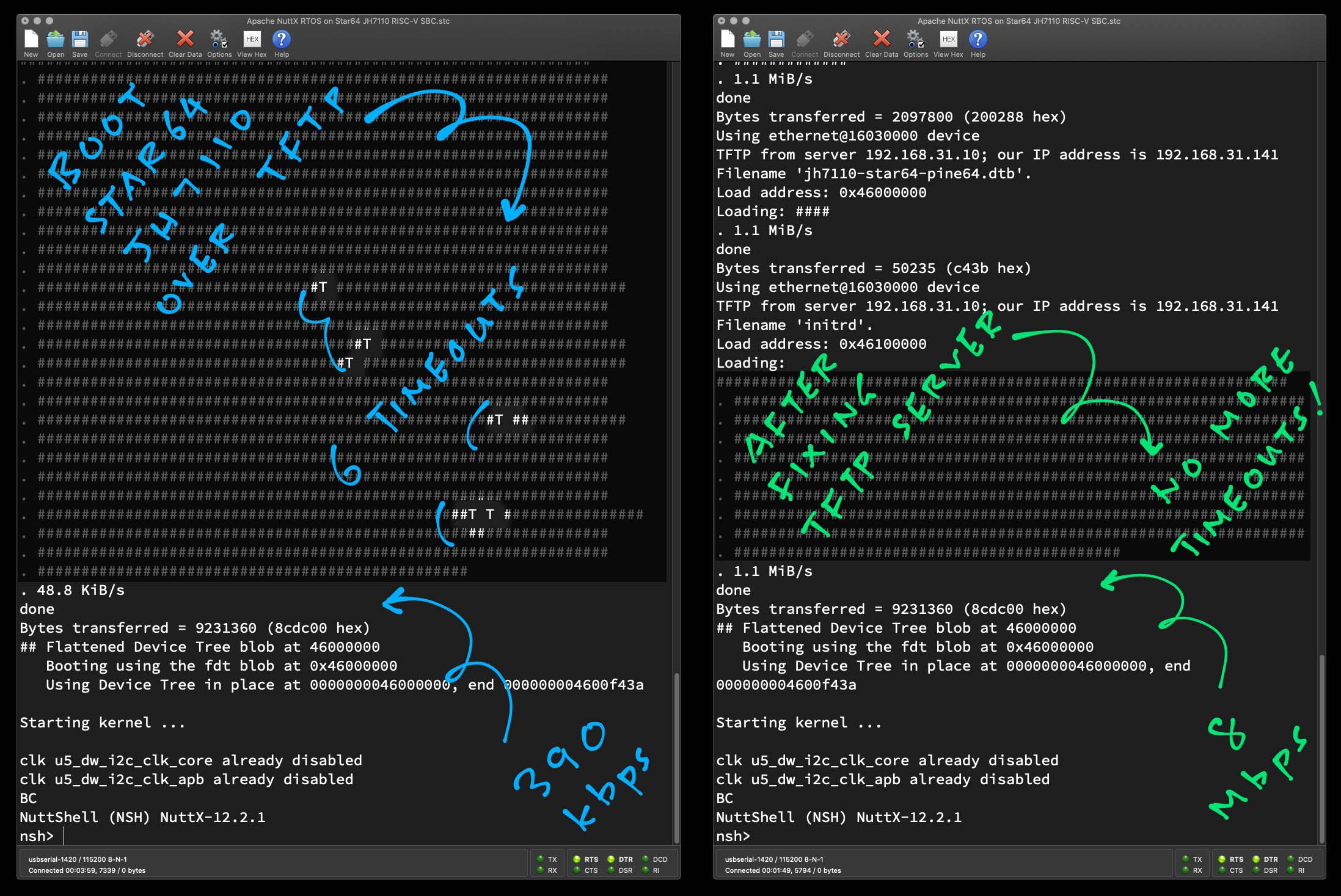

But we’re hitting frequent TFTP Timeouts (“T” below) while booting NuttX over the Local Network. Effective Transfer Rate is only 390 kbps!

Loading:

. ##T #################################

. #######T ############################

. #####T ##############################

. ######################T #############

. ###################T T ##############

. 48.8 KiB/sThis makes NuttX Testing super slow… Our SBC takes 4 minutes to boot over the Wired Local Network!

How are we booting the SBC over the Network?

We’re booting our Star64 SBC (pic below) over a Wired Ethernet Local Network with U-Boot Bootloader and TFTP.

(That’s the Trivial File Transfer Protocol)

Can we fix the TFTP Timeouts?

Yep! In this article we talk about the Strange Workaround for the TFTP Timeouts…

First we throttled our TFTP Server to send packets slower

(Which made it worse)

Next we reduced the TFTP Timeout Duration in our server

(Nope doesn’t work)

But when we send every TFTP Data Packet twice…

The problem mysteriously disappears!

NuttX now boots over the network in 20 seconds

(Previously 4 minutes!)

We verified this with 2 TFTP Servers: Linux and macOS

So yes we have a (curiously unsatisfactory) solution.

Here’s what we tested with Star64 SBC and U-Boot Bootloader…

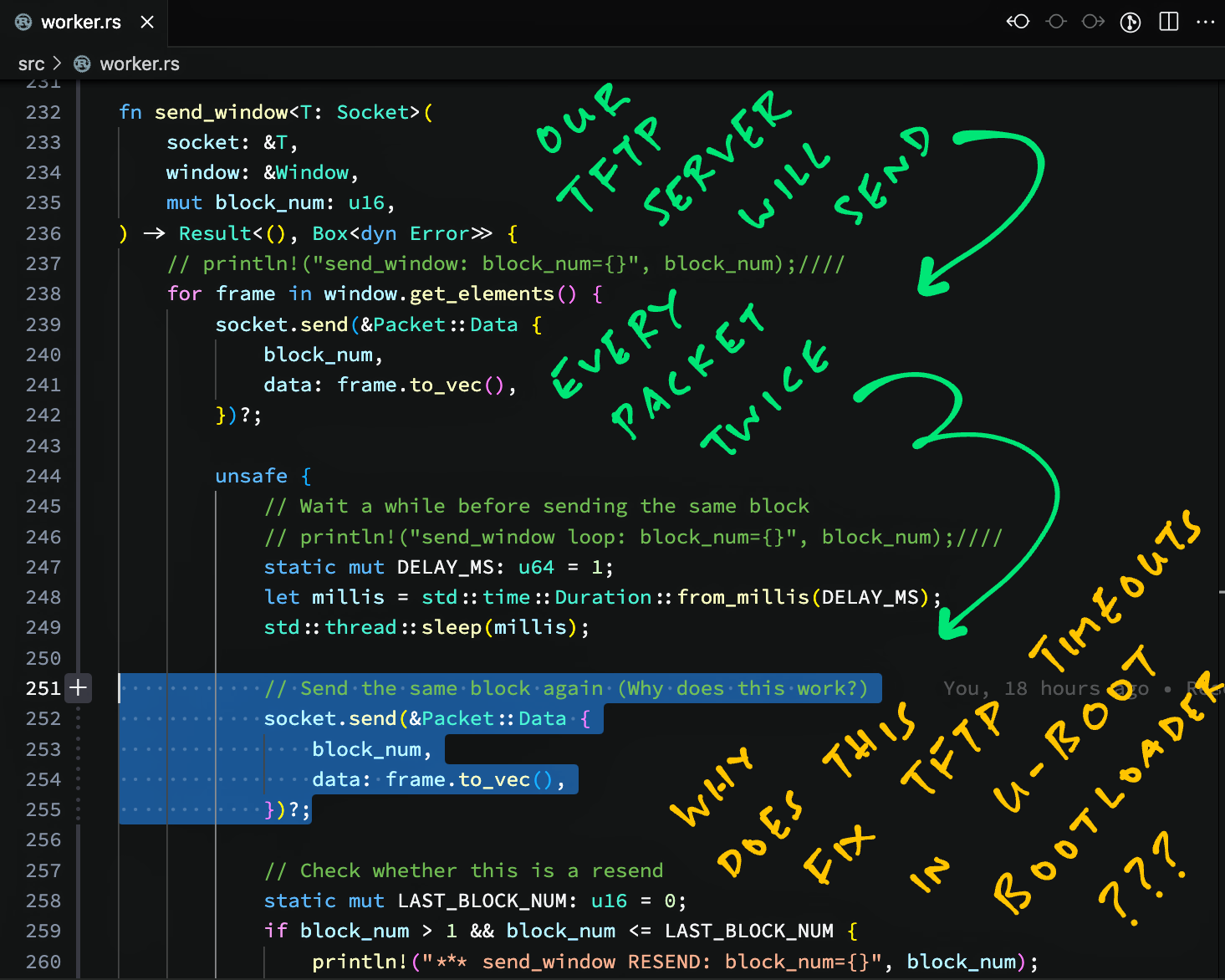

We hacked our TFTP Server to send every packet twice?

Indeed! Because we can’t configure any TFTP Server to send Data Packets twice.

Let’s modify the rs-tftpd TFTP Server. Here’s the code that sends TFTP Data Packets: rs-tftpd-timeout/src/worker.rs

// Transmit every Data Frame in the Data Window

// to the TFTP Client

fn send_window<T: Socket>(

socket: &T, // UDP Socket

window: &Window, // Data Window to be sent

mut block_num: u16, // Current Block Number

) -> Result<(), Box<dyn Error>> {

// For every Data Frame in the Data Window...

for frame in window.get_elements() {

// Send the TFTP Data Packet

socket.send(&Packet::Data {

block_num, // Current Block Number

data: frame.to_vec(), // Data Frame for the Packet

})?;

// Omitted: Increment the Block NumberTo send every TFTP Data Packet twice, we inserted this: worker.rs

// Right after sending the TFTP Data Packet...

// Wait 1 millisecond

let millis = Duration::from_millis(1);

thread::sleep(millis);

// Send the same TFTP Data Packet again.

// Why does this work?

socket.send(&Packet::Data {

block_num, // Current Block Number

data: frame.to_vec(), // Data Frame for the Packet

})?;

// Omitted: Increment the Block Number(We inserted a 1 millisecond delay between packets)

It’s a simple mod, but it solves our TFTP Timeout!

UPDATE: rs-tftpd now supports sending Duplicate Packets!

How do we run this?

Follow these steps to start our Modified TFTP Server on Linux and macOS…

## Download our Modified TFTP Server

git clone https://github.com/lupyuen/rs-tftpd-timeout

cd rs-tftpd-timeout

## Stop the xinetd + tftpd server

sudo service xinetd stop

## Might need this to set the Rust Toolchain for `sudo`

sudo $HOME/.cargo/bin/rustup default stable

## Start our Modified TFTP Server.

## Requires `sudo` because Port 69 is privileged.

## TODO: Change `/tftpboot` to your TFTP Folder

sudo --preserve-env \

$HOME/.cargo/bin/cargo run -- \

-i 0.0.0.0 \

-p 69 \

-d /tftpboot

## Or use `nohup` to keep it running continuously

## nohup sudo --preserve-env $HOME/.cargo/bin/cargo run -- -i 0.0.0.0 -p 69 -d /tftpboot &

## tail -f nohup.out

## Test our TFTP Server

## TODO: Change `192.168.x.x` to your TFTP Server Address

## TODO: Change `initrd` to a file in your TFTP Folder

curl -v \

--output initrd \

tftp://192.168.x.x/initrdWon’t the extra Data Packet confuse the TFTP Client?

That’s perfectly OK because the TFTP Block Number (sequence number) is encoded inside the UDP Data Packet.

The TFTP Client (like curl) will do the right thing and drop the Duplicate Data Packets…

$ curl -v --output initrd tftp://192.168.31.10/initrd

* Connected to 192.168.31.10 () port 69 (#0)

* set timeouts for state 0; Total 300, retry 6 maxtry 50

* got option=(tsize) value=(9231360)

* tsize parsed from OACK (9231360)

* got option=(blksize) value=(512)

* blksize parsed from OACK (512) requested (512)

* got option=(timeout) value=(6)

* Connected for receive

* set timeouts for state 1; Total 3600, retry 72 maxtry 50

* Received last DATA packet block 1 again.

* Received last DATA packet block 2 again.

* Received last DATA packet block 3 again.

* Received last DATA packet block 4 again.Let’s test this with Star64 U-Boot…

UPDATE: rs-tftpd now supports sending Duplicate Packets!

## Install `tftpd` in Rust

cargo install tftpd

## Create a folder for the TFTP Files

mkdir $HOME/tftproot

## Start the TFTP Server. Needs `sudo` because

## Port 69 is a privileged low port.

## Send Duplicate Packets to prevent TFTP Timeouts.

sudo $HOME/.cargo/bin/tftpd \

--duplicate-packets 1 \

-i 0.0.0.0 \

-p 69 \

-d "$HOME/tftproot"

## Or use `nohup` to keep it running continuously

nohup sudo $HOME/.cargo/bin/tftpd --duplicate-packets 1 -i 0.0.0.0 -p 69 -d "$HOME/tftproot" &

tail -f nohup.out

Does it work on Star64 with U-Boot Bootloader?

Let’s use Raspberry Pi 4 (32-bit Debian) as our TFTP Server…

We run the standard xinetd + tftpd on our Pi

Then we switch to our Modified TFTP Server

(From the previous section)

Before Fixing: TFTP Transfer Rate (for xinetd + tftpd) is 390 kbps (with 6 timeouts)

Filename 'initrd'. Loading:

. ##T #################################

. #######T ############################

. #####T ##############################

. ######################T #############

. ###################T T ##############

. 48.8 KiB/s

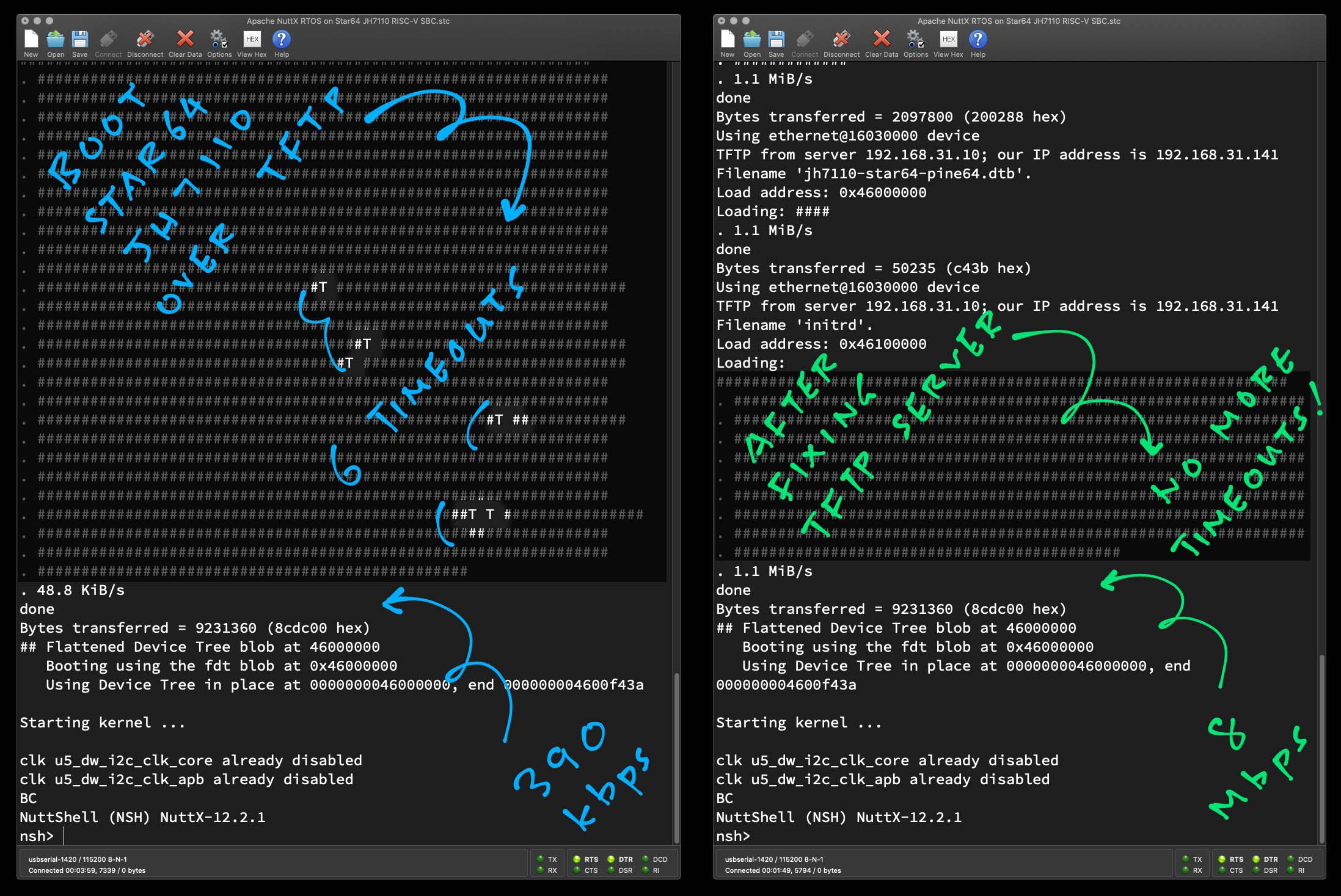

Bytes transferred = 9,231,360After Fixing: TFTP Transfer Rate (for our Modified TFTP Server) is 8 Mbps (with NO timeouts)

Filename 'initrd'. Loading:

. #####################################

. #####################################

. #####################################

. #####################################

. #####################################

. 1.1 MiB/s

Bytes transferred = 9,231,360Yep it works: No more TFTP Timeouts!

And it’s so much faster: NuttX boots in 20 seconds!

But why? We do a little research…

Surely someone else might have the same problem?

Our TFTP Timeout looks similar to this…

I have a hunch that it’s something specific to U-Boot on JH7110 SoC. And we probably can’t reproduce it with Linux on JH7110.

Sending Duplicate TFTP Packets… Feels horribly wrong!

Yeah but we might have a precedent! According to martin-zs…

“Years ago I used to work in a mixed environment (Windows/Solaris/HP-US/Linux servers) and I noticed that most windows machines send an insane amount of packets in triplicate. UNIX would send everything once. This would make me wonder if the JH7110 SoC (or the licensed IP used) was only tested using windows machines.”

“My guess would be that if you setup a windows machine to be the tftp server, it would work - just because of the triple packets (mostly for UDP).”

Apparently Windows might send every TFTP Packet 3 times.

Maybe that’s why JH7110 U-Boot won’t work so well with Linux TFTP Servers?

How will we track down the root cause?

We might need Wireshark to sniff the TFTP Packets.

And a Windows TFTP Server to verify if it really sends every packet 3 times.

Before the sniffing, we do some sleuthing…

Why does every TFTP Timeout pause for 5 seconds?

The 5-second Timeout Duration is computed here: worker.rs

// Send the file to TFTP Client

fn send_file(self, file: File) -> Result<(), Box<dyn Error>> {

...

// Compute the TFTP Timeout

let mut time = Instant::now() - (

self.timeout + // 5 seconds

TIMEOUT_BUFFER // 1 second

);What if we reduce the Timeout Duration?

When we change the code above to reduce the Timeout Duration, TFTP stops working altogether…

The TFTP Client (U-Boot) keeps timing out without transferring any data.

We try something else…

What if we throttle our TFTP Server to send packets slower?

We tried to slow down the TFTP Server: When we hit any Resends and Timeouts, we increase the delay between packets.

Here’s the code that waits a bit (1 millisecond initially) between packets: worker.rs

// Omitted: Send the TFTP Data Packet

// Wait a while before sending the next packet

static mut DELAY_MS: u64 = 1;

let millis = Duration::from_millis(DELAY_MS);

thread::sleep(millis);Then we inserted the logic to check if we are resending the same packet: worker.rs

// Is this is a resend?

// Compare with the last Block Number

static mut LAST_BLOCK_NUM: u16 = 0;

if block_num > 1 && block_num <= LAST_BLOCK_NUM {

// If it's a resend: Double the delay

println!("*** send_window RESEND: block_num={}", block_num);

DELAY_MS = DELAY_MS * 2;

}

// Remember the last Block Number

LAST_BLOCK_NUM = block_num;If this is a Resend, we double the delay between packets.

Also we check for Timeout by comparing Timestamps: worker.rs

// Is this is a delayed send?

// Compare with the last Timestamp

static mut LAST_TIMESTAMP: ... = ... Instant::now();

let diff_time = Instant::now() - *LAST_TIMESTAMP;

if block_num > 1 && diff_time > Duration::from_millis(1000) {

// If it's delayed by 1 sec: Double the delay

println!("+++ send_window DELAY: block_num={}", block_num);

DELAY_MS = DELAY_MS * 2;

}

// Remember the last Timestamp

*LAST_TIMESTAMP = Instant::now();If this is a Timeout, we double the delay between packets.

Does it work?

Nope, it got worse. We still see Timeouts in spite of the extra delay between packets.

And the Data Transfer becomes terribly slow (because of the longer and longer delays)…

Sending initrd

+++ send_window DELAY: block_num=15

+++ send_window DELAY: block_num=2366

+++ send_window DELAY: block_num=2755

+++ send_window DELAY: block_num=5012

Sent initrdThus throttling our TFTP Server doesn’t help.

We sure this isn’t a Hardware Problem at our TFTP Server?

Or a Network Problem?

We tested 2 TFTP Servers: Raspberry Pi 4 (32-bit Linux) and MacBook Pro (x64 macOS)…

| TFTP Server | xinetd + tftpd | Original rs-tftpd | Modified rs-tftpd |

|---|---|---|---|

| Linux | Some Timeouts | NO Timeouts | |

| macOS | Some Timeouts | NO Timeouts |

Thus we’re sure that it’s not a Hardware or OS Problem at the TFTP Server.

Then we downloaded a 9 MB file from Raspberry Pi to MacBook over TFTP on Wired Ethernet…

## Before Fixing TFTP Server: 19 Mbps (xinetd + tftpd)

$ curl --output initrd tftp://192.168.31.10/initrd

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 9015k 0 9015k 0 0 2374k 0 --:--:-- 0:00:03 --:--:-- 2374k

## After Fixing TFTP Server: 3.3 Mbps (Modified rs-tftpd)

$ curl --output initrd tftp://192.168.31.10/initrd

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 9015k 100 9015k 0 0 411k 0 0:00:21 0:00:21 --:--:-- 411kOur Wired Ethernet Network looks hunky dory, no problems here.

(Our Modified TFTP Server is slower because of the 1 millisecond delay between packets. And we sent every packet twice)

So this TFTP Timeout seems specific to JH7110 U-Boot?

Yeah. Maybe U-Boot Bootloader on Star64 JH7110 missed some Incoming UDP Packets?

But we haven’t actually fixed the TFTP Timeout?

Yeah we have a bad hack, a strange workaround for the TFTP Timeout problem.

To actually fix the problem, we’d have to dive into the U-Boot Source Code. And figure out what’s happening inside the JH7110 Network Driver and TFTP Client.

(And maybe this problem has been fixed in the latest U-Boot!)

Porting NuttX to Star64 JH7110 becomes so much faster now. Stay tuned for quicker updates!

(Like the upcoming NuttX I2C Driver for JH7110)

Many Thanks to my GitHub Sponsors for supporting my work! This article wouldn’t have been possible without your support.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…