📝 19 Nov 2023

What’s this MMU?

Memory Management Unit (MMU) is the hardware inside our Single-Board Computer (SBC) that does…

Memory Protection: Prevent Applications (and Kernel) from meddling with things (in System Memory) that they’re not supposed to

Virtual Memory: Allow Applications to access chunks of “Imaginary Memory” at Exotic Addresses (0x8000_0000!)

But in reality: They’re System RAM recycled from boring old addresses (like 0x5060_4000)

(Kinda like “The Matrix”)

Sv39 sounds familiar… Any relation to SVR4?

Actually Sv39 Memory Management Unit is inside many RISC-V SBCs…

Pine64 Ox64, Sipeed M1s

(Based on Bouffalo Lab BL808 SoC)

Pine64 Star64, StarFive VisionFive 2, Milk-V Mars

(Based on StarFive JH7110 SoC)

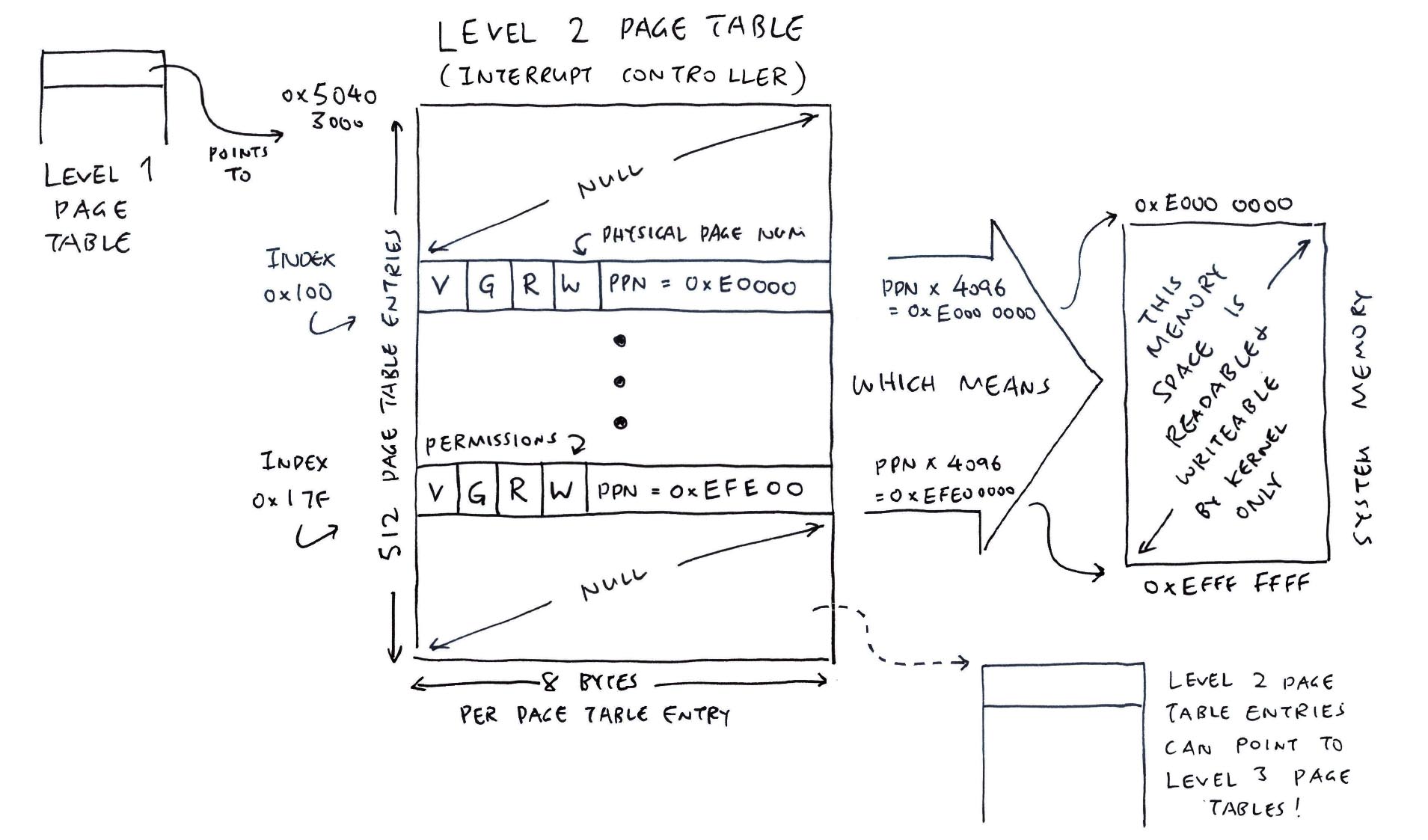

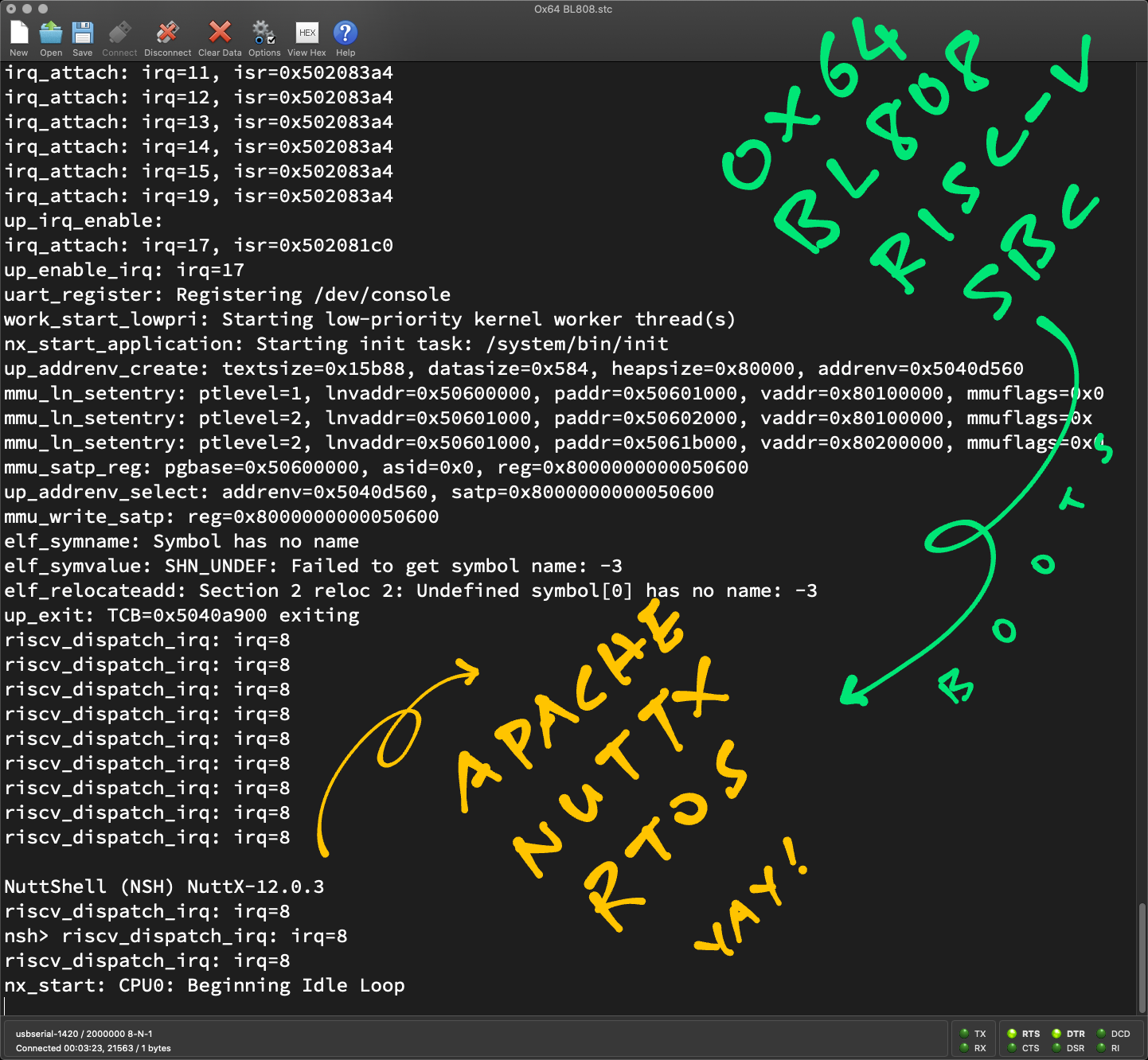

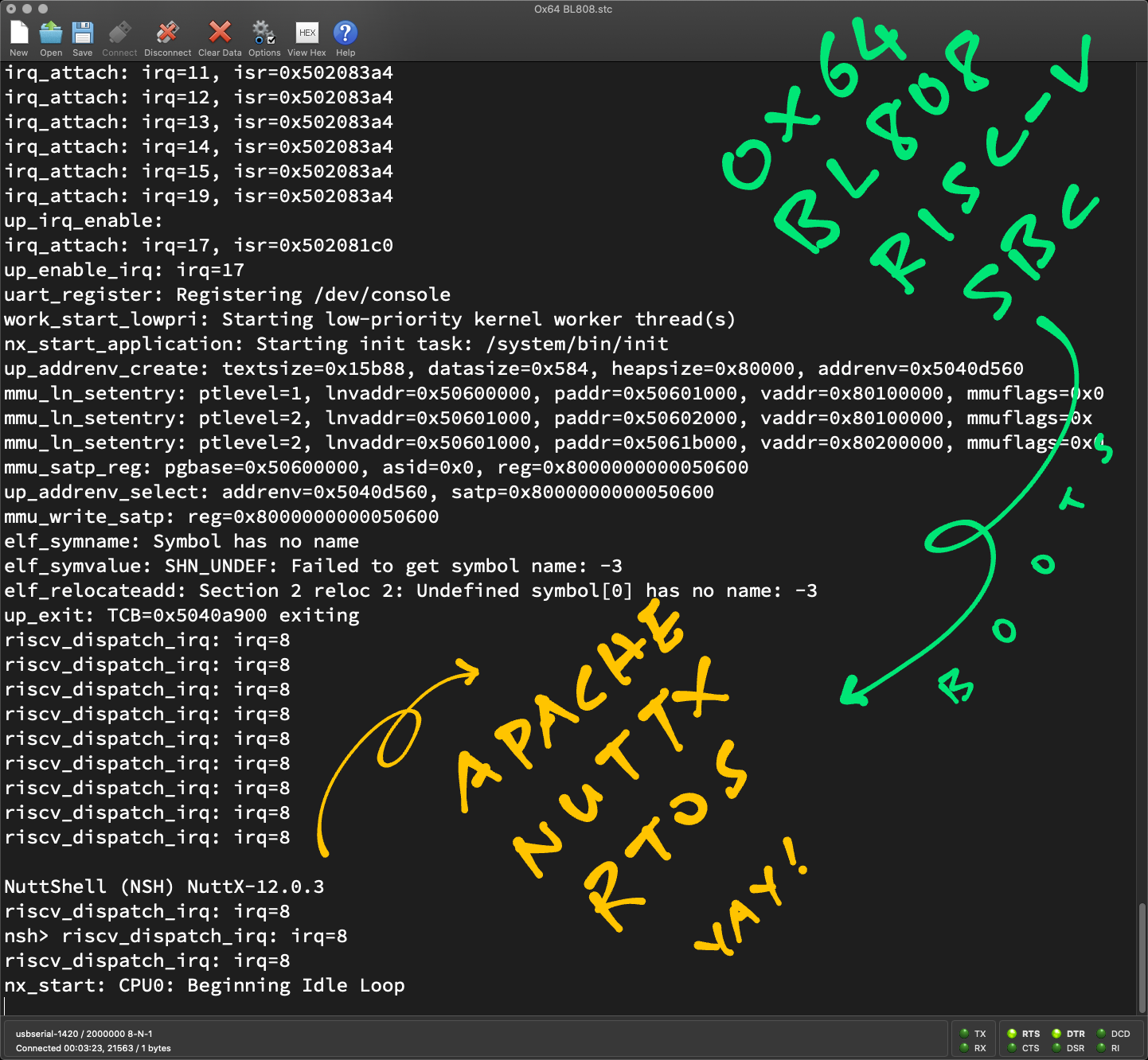

In this article, we find out how Sv39 MMU works on a simple barebones SBC: Pine64 Ox64 64-bit RISC-V SBC. (Pic below)

(Powered by Bouffalo Lab BL808 SoC)

We start with Memory Protection, then Virtual Memory. We’ll do this with Apache NuttX RTOS. (Real-Time Operating System)

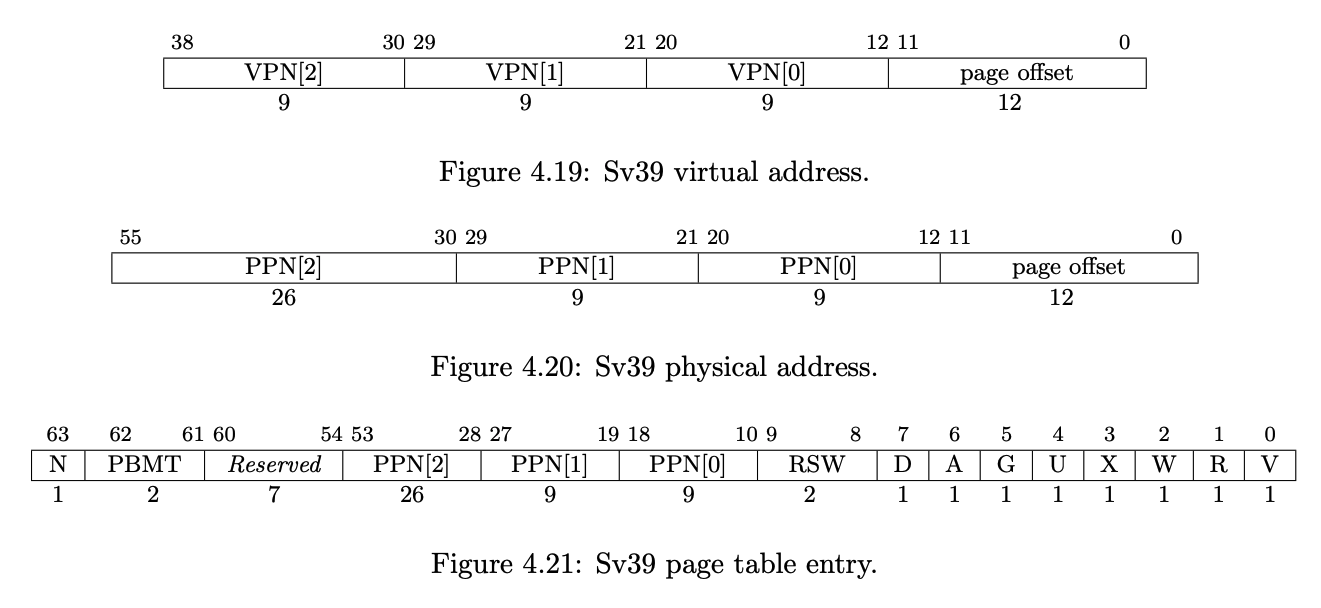

And “Sv39” means…

“Sv” signifies it’s a RISC-V Extension for Supervisor-Mode Virtual Memory

“39” because it supports 39 Bits for Virtual Addresses

(0x0 to 0x7F_FFFF_FFFF!)

Coincidentally it’s also 3 by 9…

3 Levels of Page Tables, each level adding 9 Address Bits!

Why NuttX?

Apache NuttX RTOS is tiny and easier to teach, as we walk through the MMU Internals.

And we’re documenting everything that happens when NuttX configures the Sv39 MMU for Ox64 SBC.

This stuff is covered in Computer Science Textbooks. No?

Let’s learn things a little differently! This article will read (and look) like a (yummy) tray of Chunky Chocolate Brownies… Because we love Food Analogies.

(Apologies to my fellow CS Teachers)

Pine64 Ox64 64-bit RISC-V SBC (Sorry for my substandard soldering)

What memory shall we protect on Ox64?

Ox64 SBC needs the Memory Regions below to boot our Kernel.

Today we configure the Sv39 MMU so that our Kernel can access these regions (and nothing else)…

| Region | Start Address | Size |

|---|---|---|

| Memory-Mapped I/O | 0x0000_0000 | 0x4000_0000 (1 GB) |

| Kernel Code (RAM) | 0x5020_0000 | 0x0020_0000 (2 MB) |

| Kernel Data (RAM) | 0x5040_0000 | 0x0020_0000 (2 MB) |

| Page Pool (RAM) | 0x5060_0000 | 0x0140_0000 (20 MB) |

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

Our (foodie) hygiene requirements…

Applications shall NOT be allowed to touch these Memory Regions

Kernel Code Region will allow Read and Execute Access

Other Memory Regions will allow Read and Write Access

Memory-Mapped I/O will be used by Kernel for controlling the System Peripherals: UART, I2C, SPI, …

(Same for Interrupt Controller)

Page Pool will be allocated (on-the-fly) by our Kernel to Applications

(As Virtual Memory)

Our Kernel runs in RISC-V Supervisor Mode

Applications run in RISC-V User Mode

Any meddling of Forbidden Regions by Kernel and Applications shall immediately trigger a Page Fault (RISC-V Exception)

We begin with the biggest chunk: I/O Memory…

[ 1 GB per Huge Chunk ]

How will we protect the I/O Memory?

| Region | Start Address | Size |

|---|---|---|

| Memory-Mapped I/O | 0x0000_0000 | 0x4000_0000 (1 GB) |

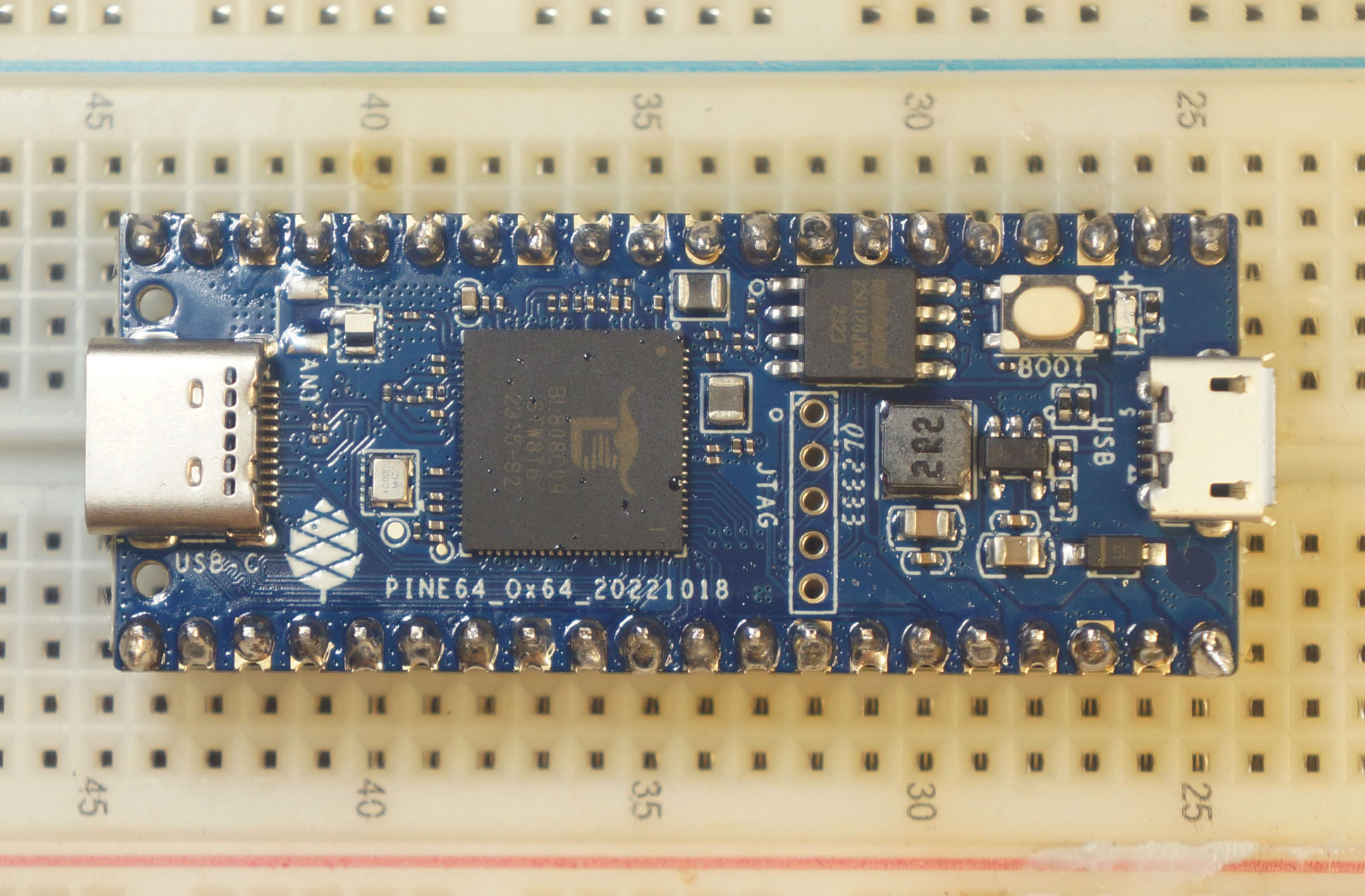

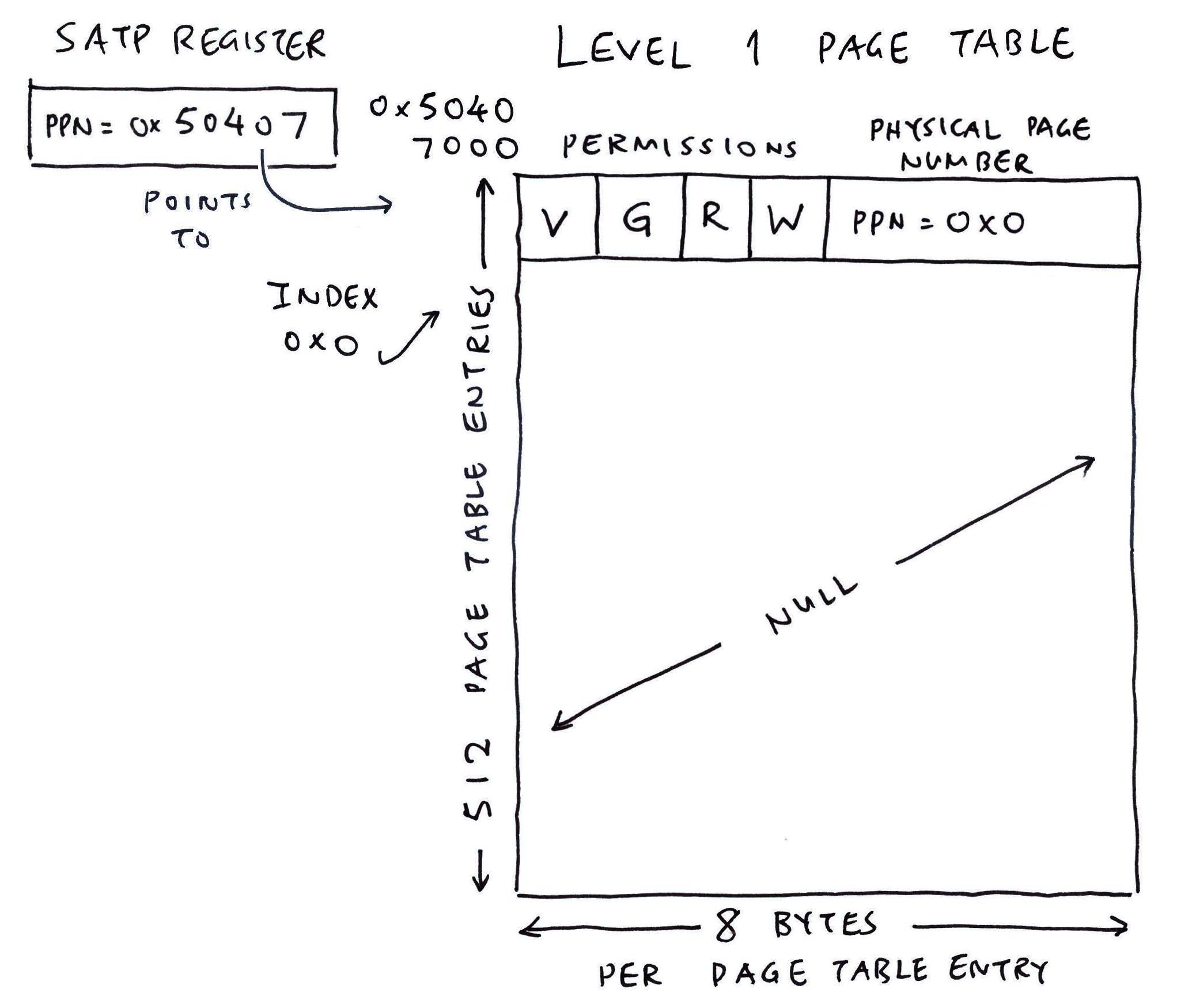

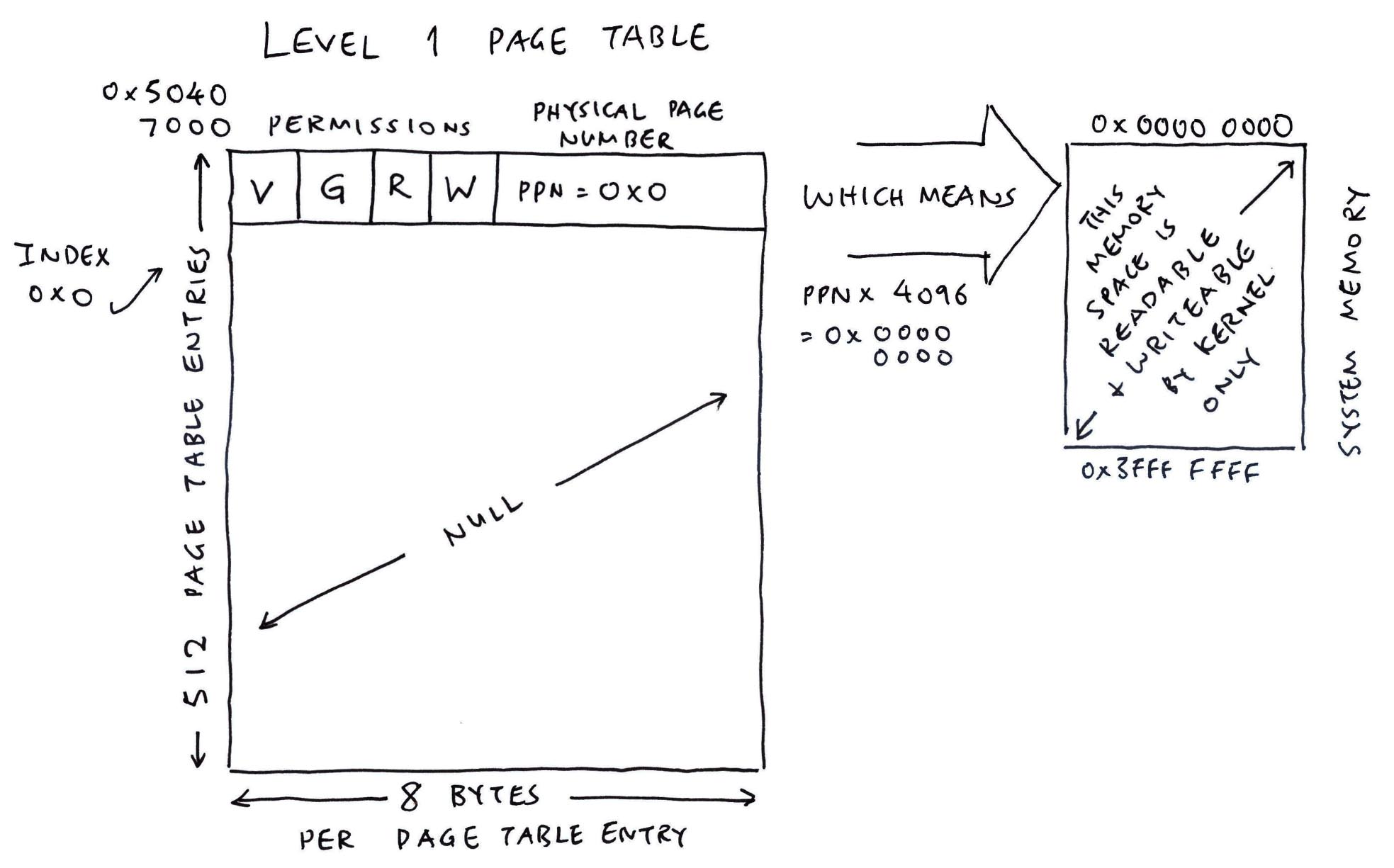

Here’s the simplest setup for Sv39 MMU that will protect the I/O Memory from 0x0 to 0x3FFF_FFFF…

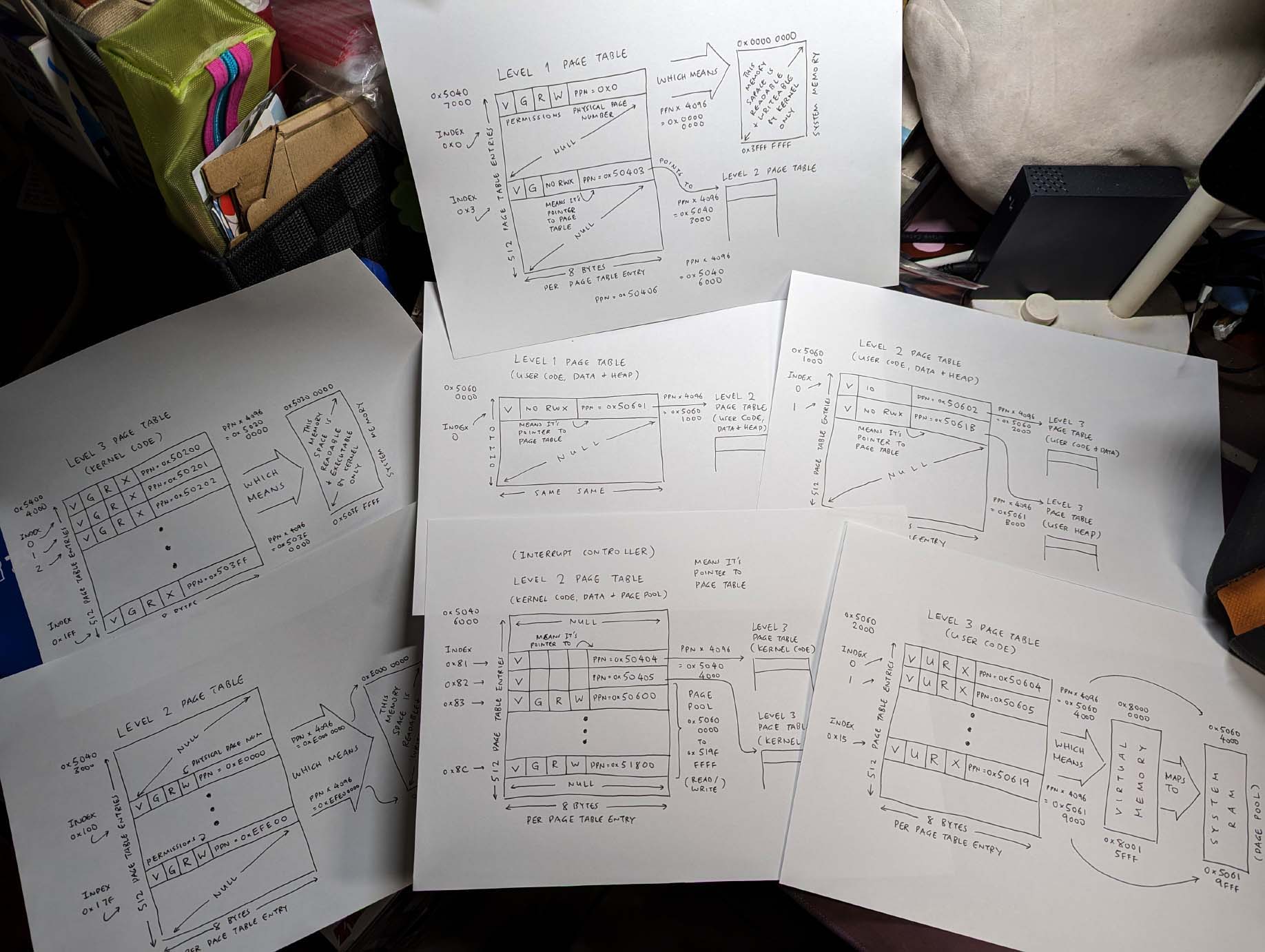

All we need is a Level 1 Page Table. (4,096 Bytes)

Our Page Table contains only one Page Table Entry (8 Bytes) that says…

V: It’s a Valid Page Table Entry

G: It’s a Global Mapping that’s valid for all Address Spaces

R: Allow Reads for 0x0 to 0x3FFF_FFFF

W: Allow Writes for 0x0 to 0x3FFF_FFFF

(We don’t allow Execute for I/O Memory)

SO: Enforce Strong-Ordering for Memory Access

S: Enforce Shareable Memory Access

PPN: Physical Page Number (44 Bits) is 0x0

(PPN = Memory Address / 4,096)

But we have so many questions…

Why 0x3FFF_FFFF?

We have a Level 1 Page Table. Every Entry in the Page Table configures a (huge) 1 GB Chunk of Memory.

(Or 0x4000_0000 bytes)

Our Page Table Entry is at Index 0. Hence it configures the Memory Range for 0x0 to 0x3FFF_FFFF. (Pic below)

How to allocate the Page Table?

In NuttX, we write this to allocate the Level 1 Page Table: bl808_mm_init.c

// Number of Page Table Entries (8 bytes per entry)

#define PGT_L1_SIZE (512) // Page Table Size is 4 KB

// Allocate Level 1 Page Table from `.pgtables` section

static size_t m_l1_pgtable[PGT_L1_SIZE]

locate_data(".pgtables");.pgtables comes from the NuttX Linker Script: ld.script

/* Page Tables (aligned to 4 KB boundary) */

.pgtables (NOLOAD) : ALIGN(0x1000) {

*(.pgtables)

. = ALIGN(4);

} > ksramThen GCC Linker helpfully allocates our Level 1 Page Table at RAM Address 0x5040_7000.

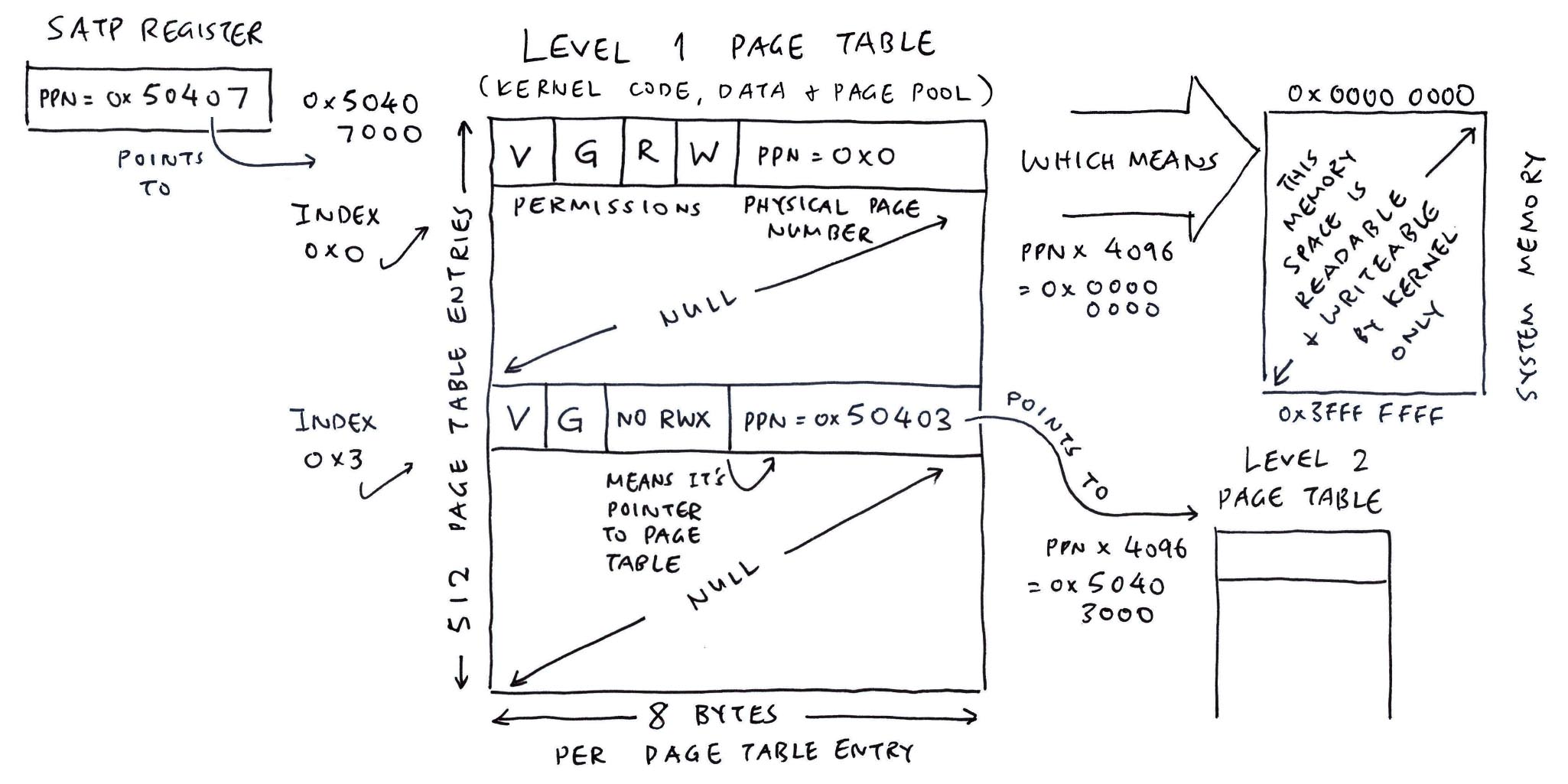

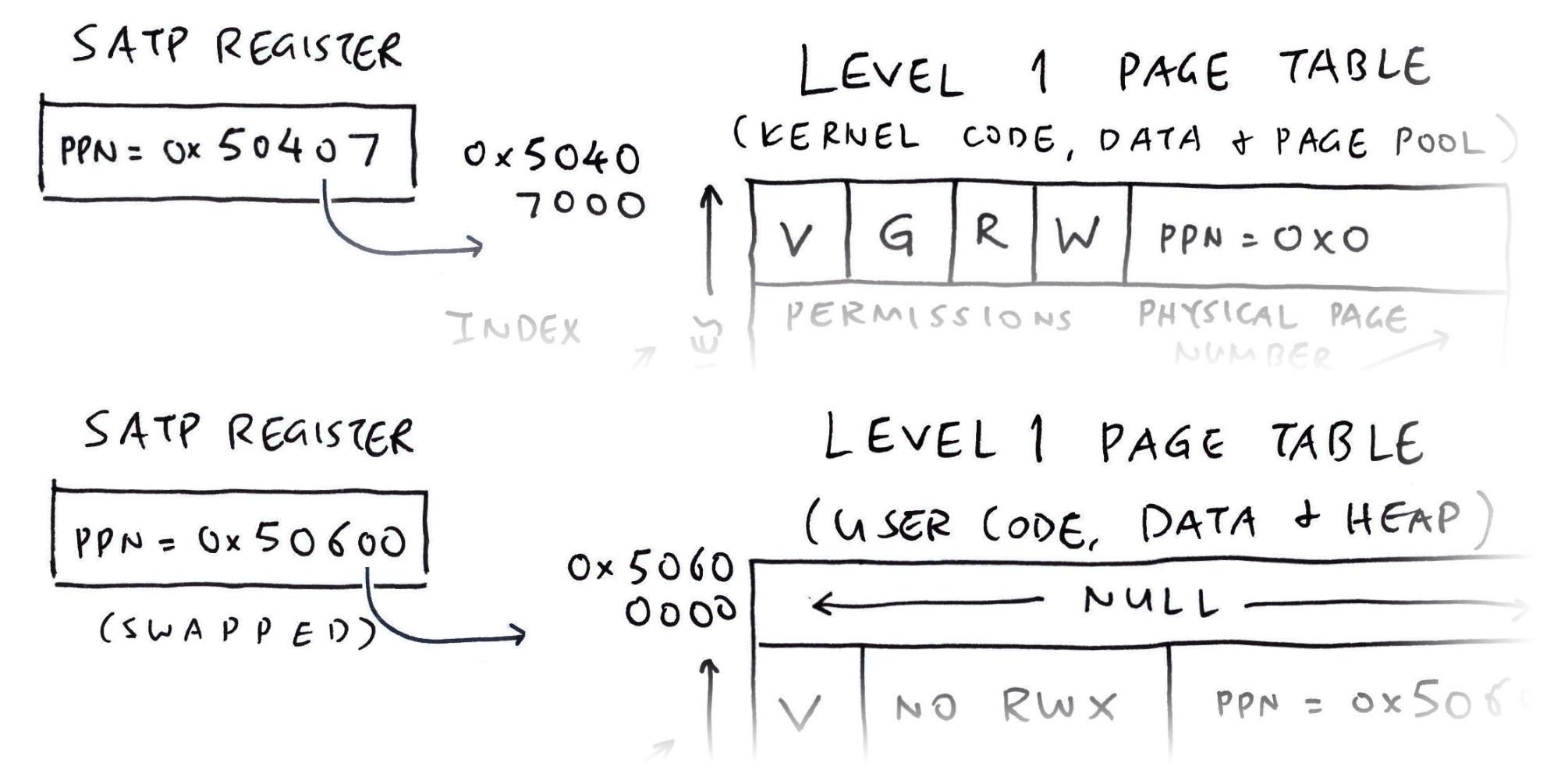

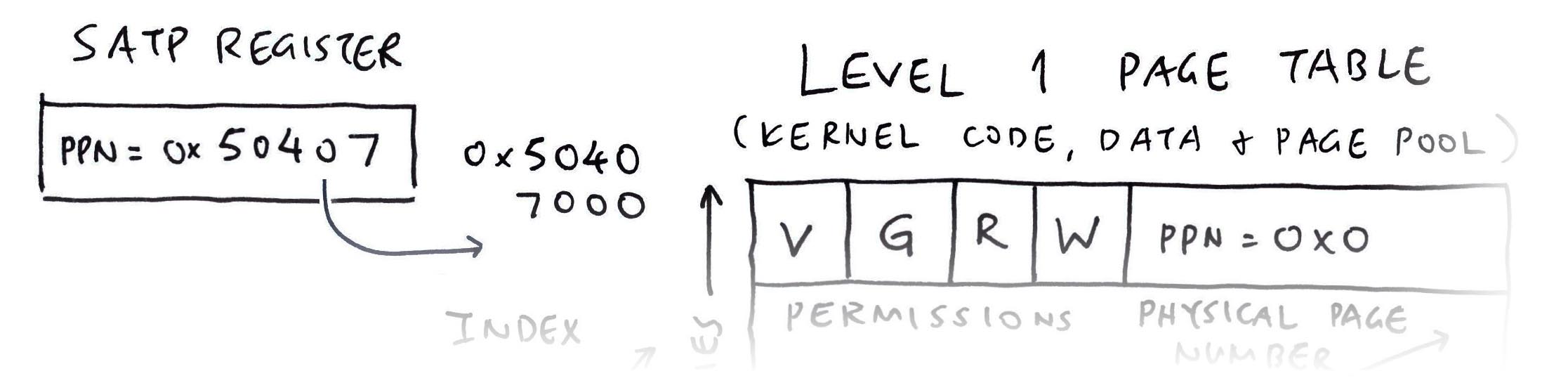

What is SATP?

SATP is the RISC-V System Register for Supervisor Address Translation and Protection.

To enable the MMU, we set SATP Register to the Physical Page Number (PPN) of our Level 1 Page Table…

PPN = Address / 4096

= 0x50407000 / 4096

= 0x50407This is how we set the SATP Register in NuttX: bl808_mm_init.c

// Set the SATP Register to the

// Physical Page Number of Level 1 Page Table.

// Set SATP Mode to Sv39.

mmu_enable(

g_kernel_pgt_pbase, // 0x5040 7000 (Page Table Address)

0 // Set Address Space ID to 0

);How to set the Page Table Entry?

To set the Level 1 Page Table Entry for 0x0 to 0x3FFF_FFFF: bl808_mm_init.c

// Map the I/O Region in Level 1 Page Table

mmu_ln_map_region(

1, // Level 1

PGT_L1_VBASE, // 0x5040 7000 (Page Table Address)

MMU_IO_BASE, // 0x0 (Physical Address)

MMU_IO_BASE, // 0x0 (Virtual Address)

MMU_IO_SIZE, // 0x4000 0000 (Size is 1 GB)

PTE_R | PTE_W | PTE_G | MMU_THEAD_STRONG_ORDER | MMU_THEAD_SHAREABLE // Read + Write + Global + Strong Order + Shareable

);(STRONG_ORDER and SHAREABLE are here)

Why is Virtual Address set to 0?

Right now we’re doing Memory Protection for the Kernel, hence we set…

Virtual Address = Physical Address = Actual Address of System Memory

Later when we configure Virtual Memory for the Applications, we’ll see interesting values.

Next we protect the Interrupt Controller…

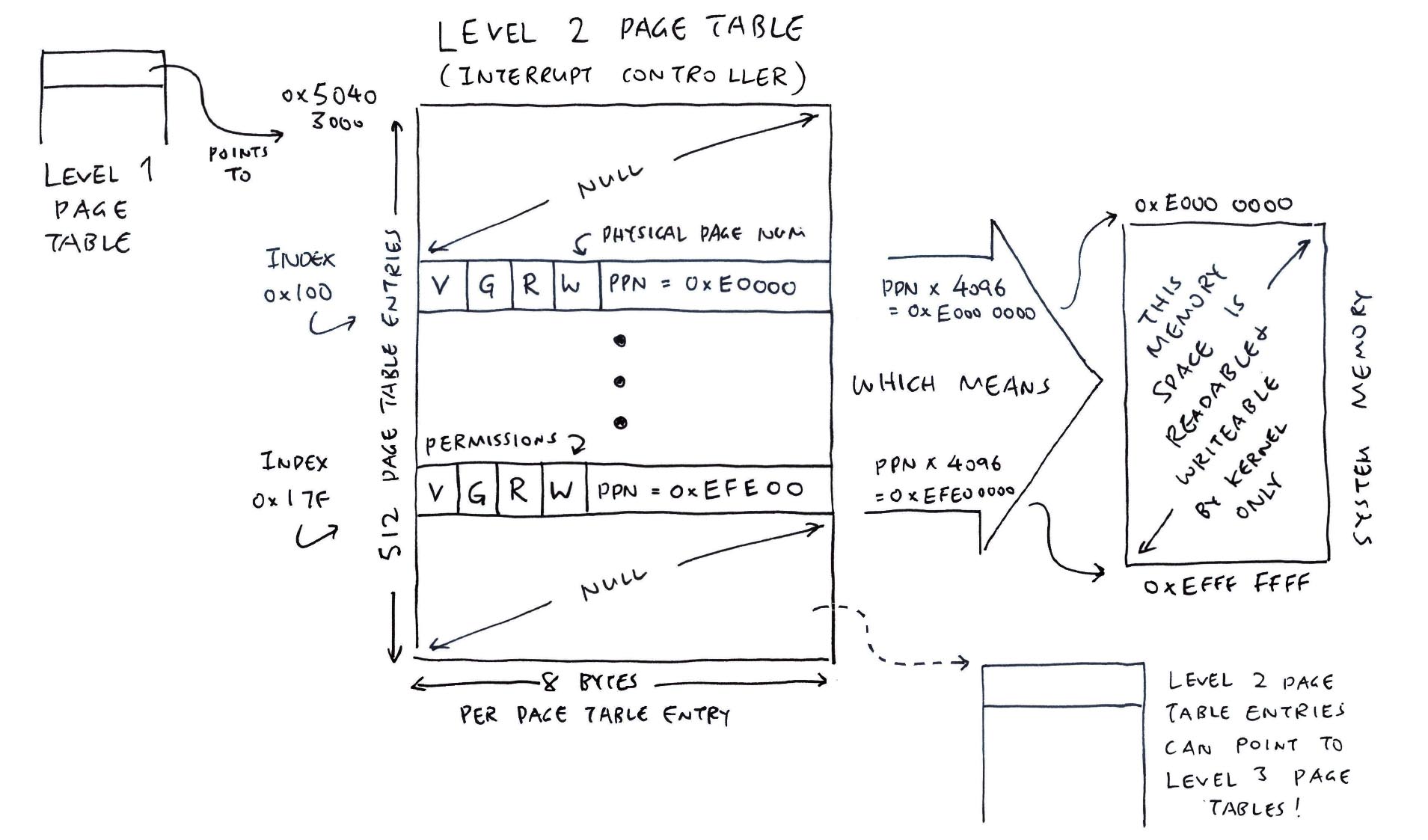

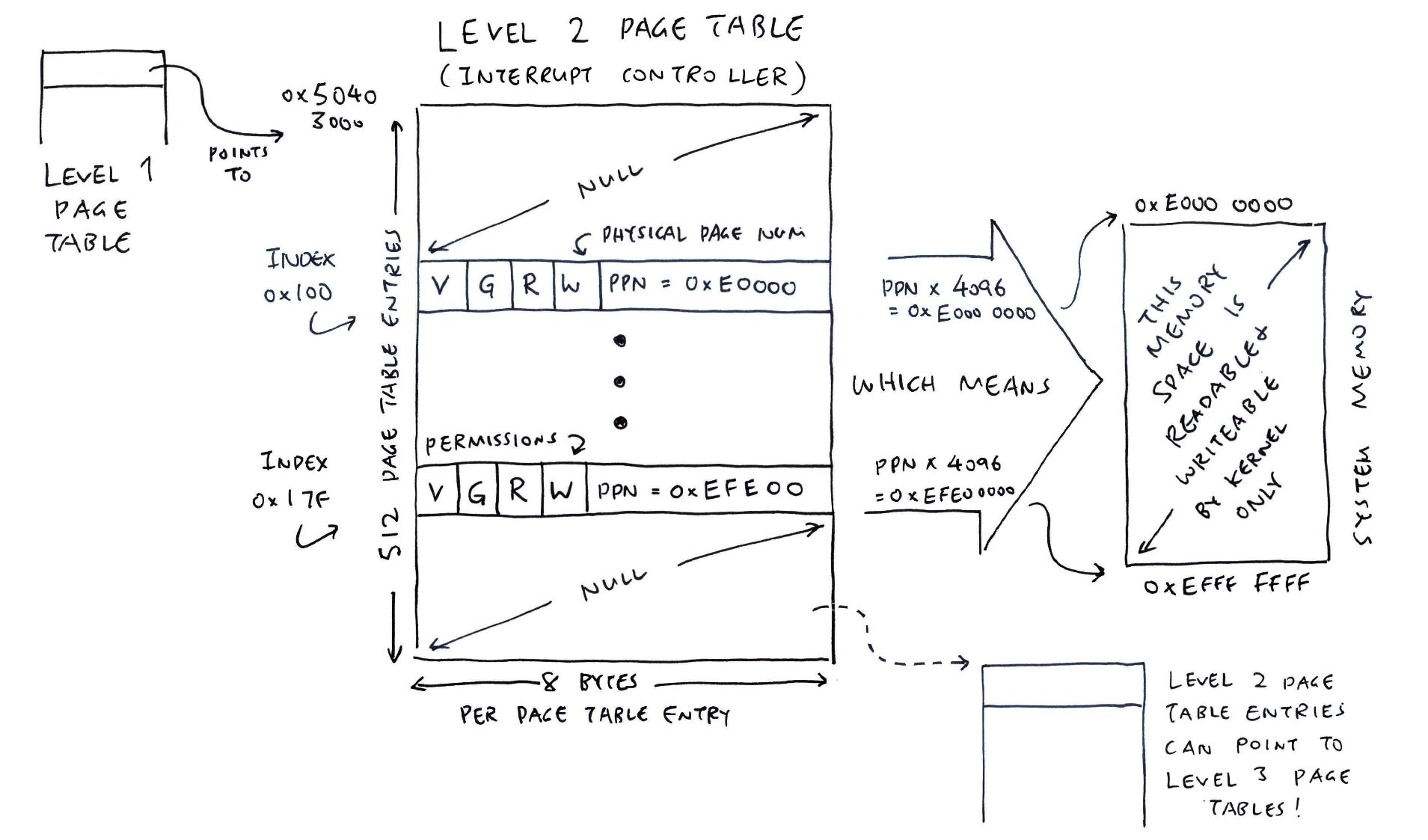

[ 2 MB per Medium Chunk ]

Our Interrupt Controller needs 256 MB of protection…

Surely a Level 1 Chunk (1 GB) is too wasteful?

| Region | Start Address | Size |

|---|---|---|

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

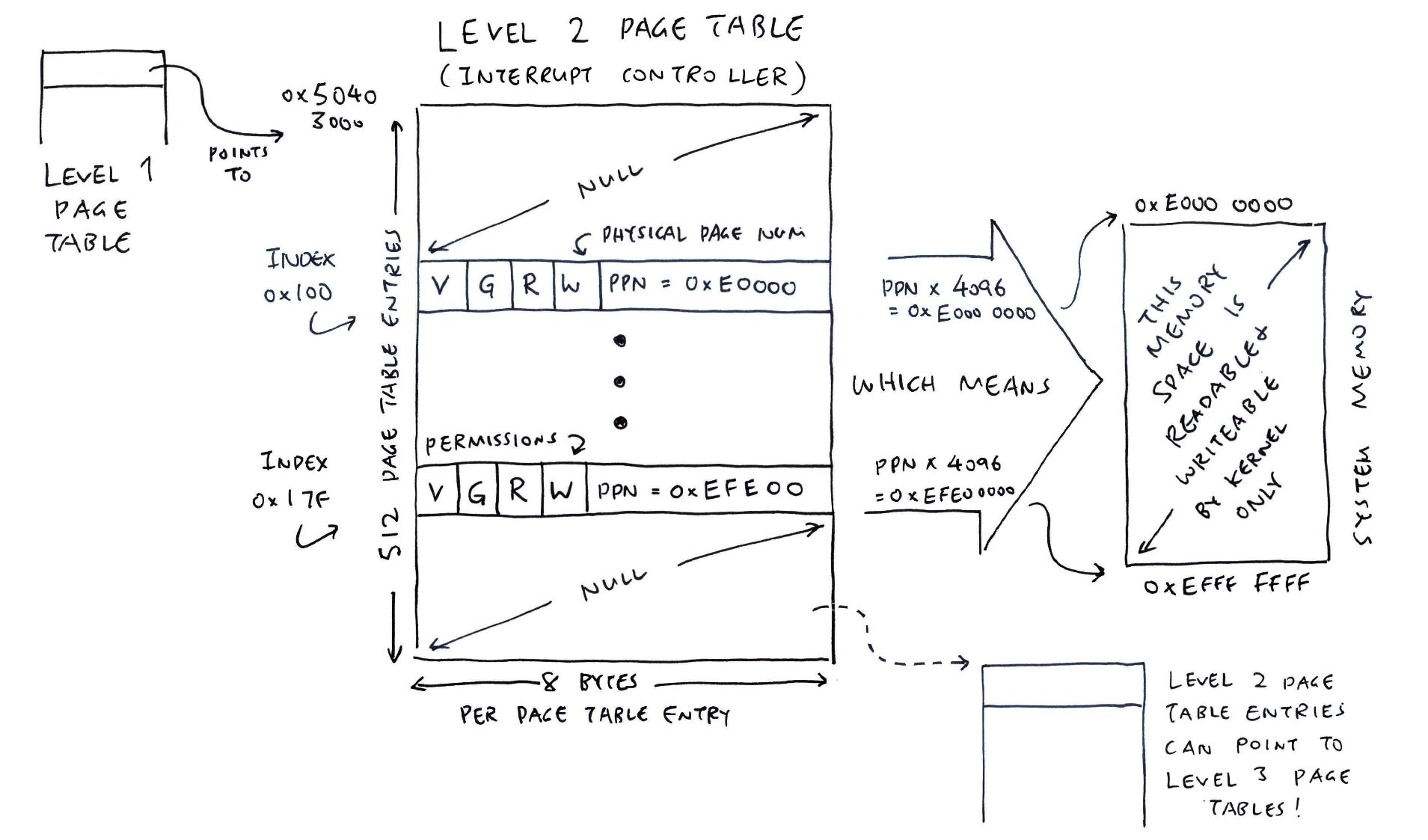

Yep that’s why Sv39 MMU gives us (medium-size) Level 2 Chunks of 2 MB!

For the Interrupt Controller, we need 128 Chunks of 2 MB.

Hence we create a Level 2 Page Table (also 4,096 bytes). And we populate 128 Entries (Index 0x100 to 0x17F)…

How did we get the Index of the Page Table Entry?

Our Interrupt Controller is at 0xE000_0000.

To compute the Index of the Level 2 Page Table Entry (PTE)…

Virtual Address: vaddr = 0xE000_0000

(For Now: Virtual Address = Actual Address)

Virtual Page Number: vpn

= vaddr >> 12

= 0xE0000

(4,096 bytes per Memory Page)

Level 2 PTE Index

= (vpn >> 9) & 0b1_1111_1111

= 0x100

(Extract Bits 9 to 17 to get Level 2 Index)

Do the same for 0xEFFF_FFFF, and we’ll get Index 0x17F.

Thus our Page Table Index runs from 0x100 to 0x17F.

How to allocate the Level 2 Page Table?

In NuttX we do this: bl808_mm_init.c

// Number of Page Table Entries (8 bytes per entry)

#define PGT_INT_L2_SIZE (512) // Page Table Size is 4 KB

// Allocate Level 2 Page Table from `.pgtables` section

static size_t m_int_l2_pgtable[PGT_INT_L2_SIZE]

locate_data(".pgtables");(.pgtables comes from the Linker Script)

Then GCC Linker respectfully allocates our Level 2 Page Table at RAM Address 0x5040 3000.

How to populate the 128 Page Table Entries?

Just do this in NuttX: bl808_mm_init.c

// Map the Interrupt Controller in Level 2 Page Table

mmu_ln_map_region(

2, // Level 2

PGT_INT_L2_PBASE, // 0x5040 3000 (Page Table Address)

0xE0000000, // Physical Address of Interrupt Controller

0xE0000000, // Virtual Address of Interrupt Controller

0x10000000, // 256 MB (Size)

PTE_R | PTE_W | PTE_G | MMU_THEAD_STRONG_ORDER | MMU_THEAD_SHAREABLE // Read + Write + Global + Strong Order + Shareable

);(STRONG_ORDER and SHAREABLE are here)

(mmu_ln_map_region is defined here)

We’re not done yet! Next we connect the Levels…

We’re done with the Level 2 Page Table for our Interrupt Controller…

But Level 2 should talk back to Level 1 right?

| Region | Start Address | Size |

|---|---|---|

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

Exactly! Watch how we connect our Level 2 Page Table back to Level 1…

3 is the Level 1 Index for Interrupt Controller 0xE000_0000 because…

Virtual Address: vaddr = 0xE000_0000

(For Now: Virtual Address = Actual Address)

Virtual Page Number: vpn

= vaddr >> 12

= 0xE0000

(4,096 bytes per Memory Page)

Level 1 PTE Index

= (vpn >> 18) & 0b1_1111_1111

= 3

(Extract Bits 18 to 26 to get Level 1 Index)

Why “NO RWX”?

When we set the Read, Write and Execute Bits to 0…

Sv39 MMU interprets the PPN (Physical Page Number) as a Pointer to Level 2 Page Table. That’s how we connect Level 1 to Level 2!

(Remember: Actual Address = PPN * 4,096)

In NuttX, we write this to connect Level 1 with Level 2: bl808_mm_init.c

// Connect the L1 and L2 Page Tables for Interrupt Controller

mmu_ln_setentry(

1, // Level 1

PGT_L1_VBASE, // 0x5040 7000 (L1 Page Table Address)

PGT_INT_L2_PBASE, // 0x5040 3000 (L2 Page Table Address)

0xE0000000, // Virtual Address of Interrupt Controller

PTE_G // Global Only

);(mmu_ln_setentry is defined here)

We’re done protecting the Interrupt Controller with Level 1 AND Level 2 Page Tables!

Wait wasn’t there something already in the Level 1 Page Table?

| Region | Start Address | Size |

|---|---|---|

| Memory-Mapped I/O | 0x0000_0000 | 0x4000_0000 (1 GB) |

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

Oh yeah: I/O Memory. When we bake everything together, things will look more complicated (and there’s more!)…

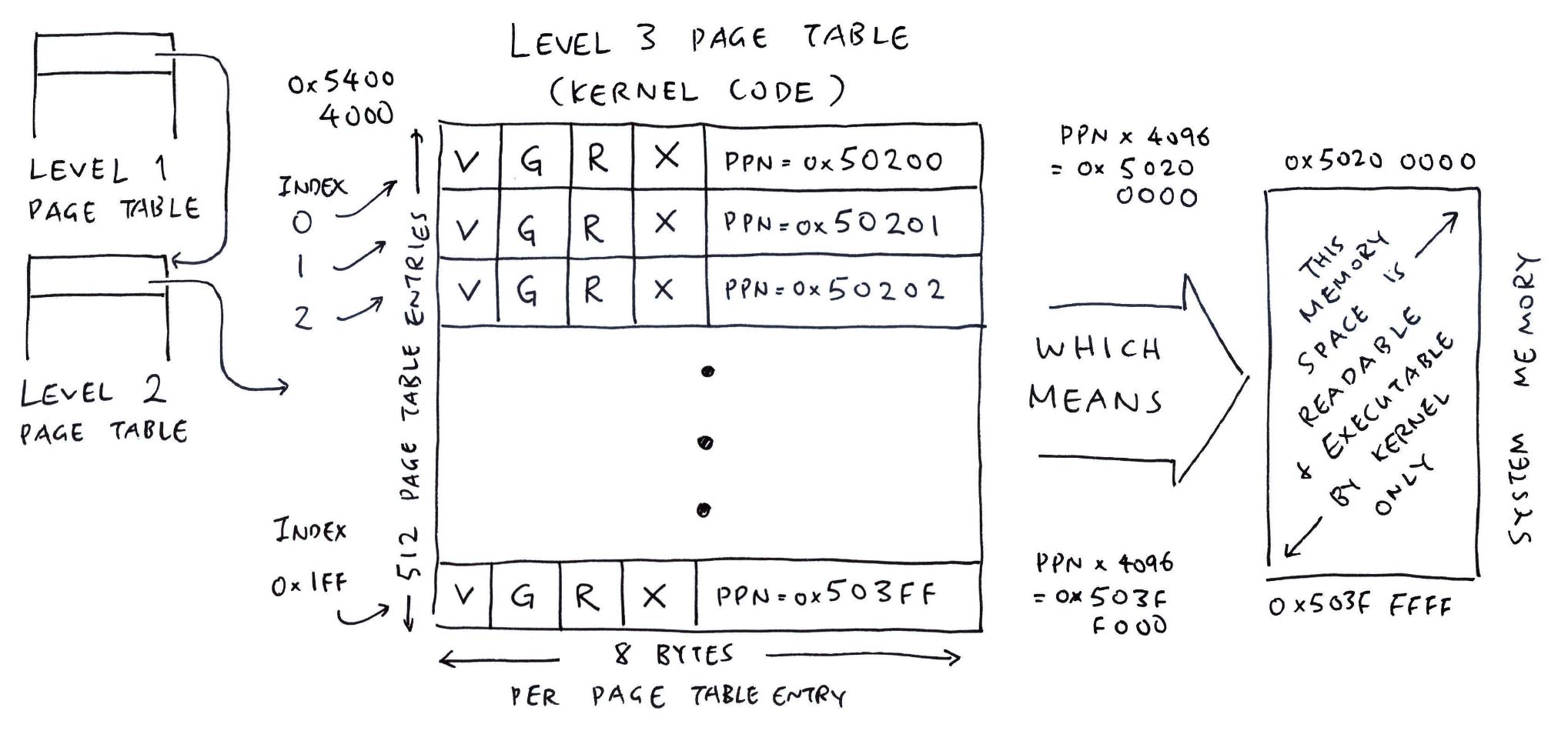

[ 4 KB per Smaller Chunk ]

Level 2 Chunks (2 MB) are still mighty big… Is there anything smaller?

Yep we have smaller (bite-size) Level 3 Chunks of 4 KB each.

We create a Level 3 Page Table for the Kernel Code. And fill it (to the brim) with 4 KB Chunks…

| Region | Start Address | Size |

|---|---|---|

| Kernel Code (RAM) | 0x5020_0000 | 0x20_0000 (2 MB) |

| Kernel Data (RAM) | 0x5040_0000 | 0x20_0000 (2 MB) |

(Kernel Data has a similar Level 3 Page Table)

How do we cook up a Level 3 Index?

Suppose we’re configuring address 0x5020_1000. To compute the Index of the Level 3 Page Table Entry (PTE)…

Virtual Address: vaddr = 0x5020_1000

(For Now: Virtual Address = Actual Address)

Virtual Page Number: vpn

= vaddr >> 12

= 0x50201

(4,096 bytes per Memory Page)

Level 3 PTE Index

= vpn & 0b1_1111_1111

= 1

(Extract Bits 0 to 8 to get Level 3 Index)

Thus address 0x5020_1000 is configured by Index 1 of the Level 3 Page Table.

To populate the Level 3 Page Table, our code looks a little different: bl808_mm_init.c

// Number of Page Table Entries (8 bytes per entry)

// for Kernel Code and Data

#define PGT_L3_SIZE (1024) // 2 Page Tables (4 KB each)

// Allocate Level 3 Page Table from `.pgtables` section

// for Kernel Code and Data

static size_t m_l3_pgtable[PGT_L3_SIZE]

locate_data(".pgtables");

// Map the Kernel Code in L2 and L3 Page Tables

map_region(

KFLASH_START, // 0x5020 0000 (Physical Address)

KFLASH_START, // 0x5020 0000 (Virtual Address)

KFLASH_SIZE, // 0x20 0000 (Size is 2 MB)

PTE_R | PTE_X | PTE_G // Read + Execute + Global

);

// Map the Kernel Data in L2 and L3 Page Tables

map_region(

KSRAM_START, // 0x5040 0000 (Physical Address)

KSRAM_START, // 0x5040 0000 (Virtual Address)

KSRAM_SIZE, // 0x20 0000 (Size is 2 MB)

PTE_R | PTE_W | PTE_G // Read + Write + Global

);That’s because map_region calls a Slab Allocator to manage the Level 3 Page Table Entries.

But internally it calls the same old functions: mmu_ln_map_region and mmu_ln_setentry

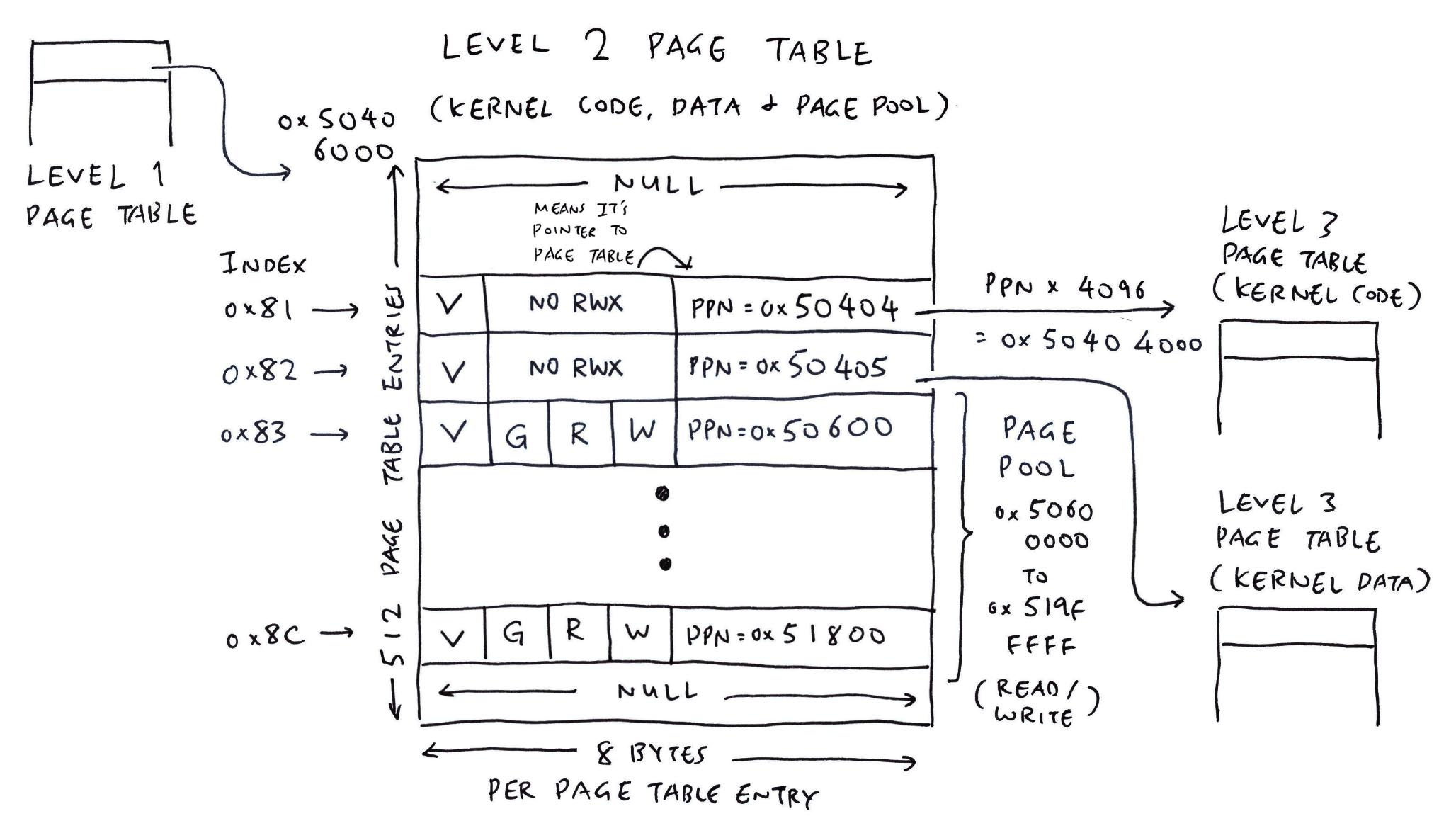

Level 3 will talk back to Level 2 right?

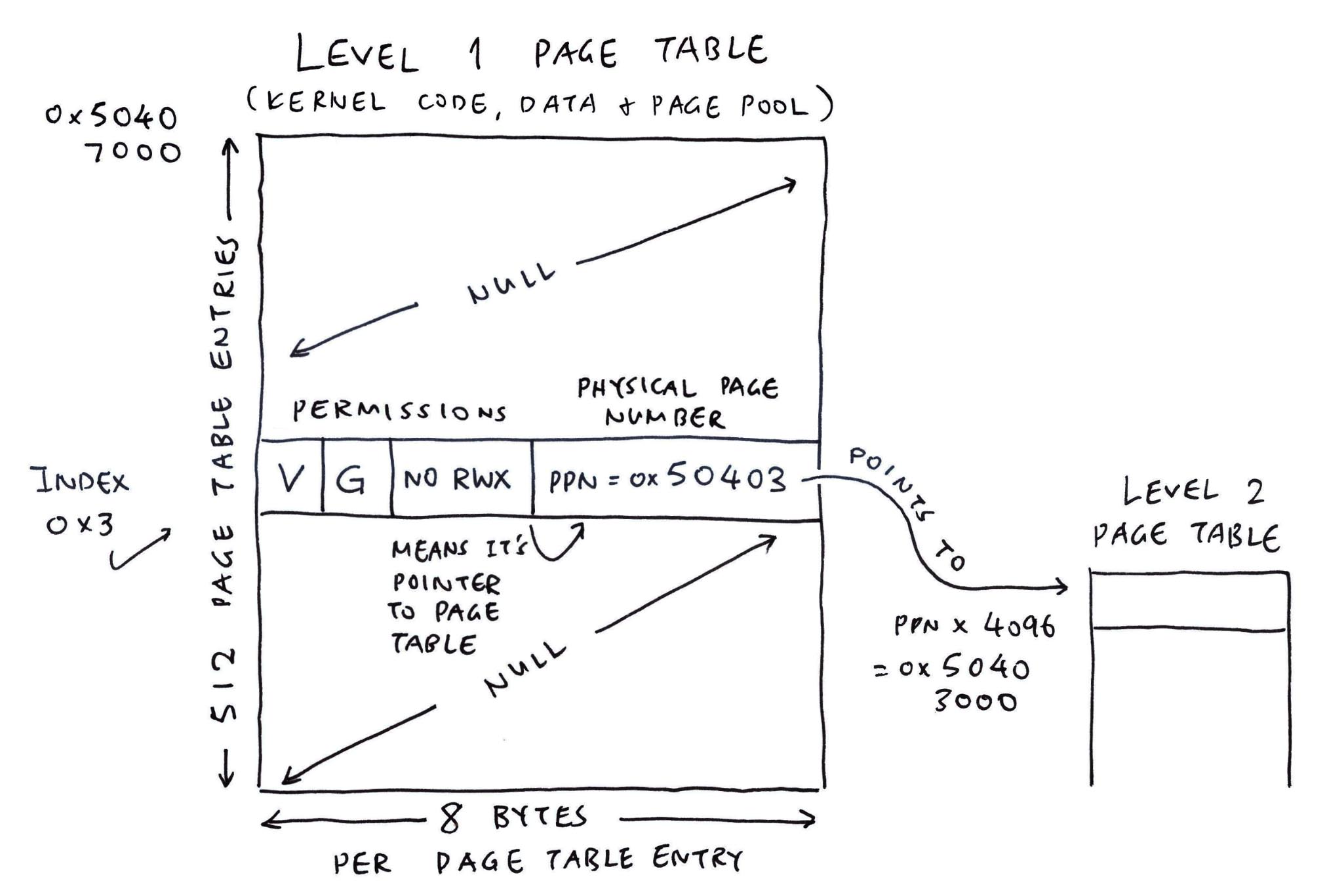

Correct! Finally we create a Level 2 Page Table for Kernel Code and Data…

| Region | Start Address | Size |

|---|---|---|

| Kernel Code (RAM) | 0x5020_0000 | 0x0020_0000 (2 MB) |

| Kernel Data (RAM) | 0x5040_0000 | 0x0020_0000 (2 MB) |

| Page Pool (RAM) | 0x5060_0000 | 0x0140_0000 (20 MB) |

(Not to be confused with the earlier Level 2 Page Table for Interrupt Controller)

And we connect the Level 2 and 3 Page Tables…

Page Pool goes into the same Level 2 Page Table?

Yep, that’s because the Page Pool contains Medium-Size Chunks (2 MB) of goodies anyway.

(Page Pool will be allocated to Applications in a while)

This is how we populate the Level 2 Entries for the Page Pool: bl808_mm_init.c

// Map the Page Pool in Level 2 Page Table

mmu_ln_map_region(

2, // Level 2

PGT_L2_VBASE, // 0x5040 6000 (Level 2 Page Table)

PGPOOL_START, // 0x5060 0000 (Physical Address of Page Pool)

PGPOOL_START, // 0x5060 0000 (Virtual Address of Page Pool)

PGPOOL_SIZE, // 0x0140_0000 (Size is 20 MB)

PTE_R | PTE_W | PTE_G // Read + Write + Global

);(mmu_ln_map_region is defined here)

Did we forget something?

Oh yeah, remember to connect the Level 1 and 2 Page Tables: bl808_mm_init.c

// Connect the L1 and L2 Page Tables

// for Kernel Code, Data and Page Pool

mmu_ln_setentry(

1, // Level 1

PGT_L1_VBASE, // 0x5040 7000 (Level 1 Page Table)

PGT_L2_PBASE, // 0x5040 6000 (Level 2 Page Table)

KFLASH_START, // 0x5020 0000 (Kernel Code Address)

PTE_G // Global Only

);(mmu_ln_setentry is defined here)

Our Level 1 Page Table becomes chock full of toppings…

| Index | Permissions | Physical Page Number |

|---|---|---|

| 0 | VGRWSO+S | 0x00000 (I/O Memory) |

| 1 | VG (Pointer) | 0x50406 (L2 Kernel Code & Data) |

| 3 | VG (Pointer) | 0x50403 (L2 Interrupt Controller) |

But it tastes very similar to our Kernel Memory Map!

| Region | Start Address | Size |

|---|---|---|

| I/O Memory | 0x0000_0000 | 0x4000_0000 (1 GB) |

| RAM | 0x5020_0000 | 0x0180_0000 (24 MB) |

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

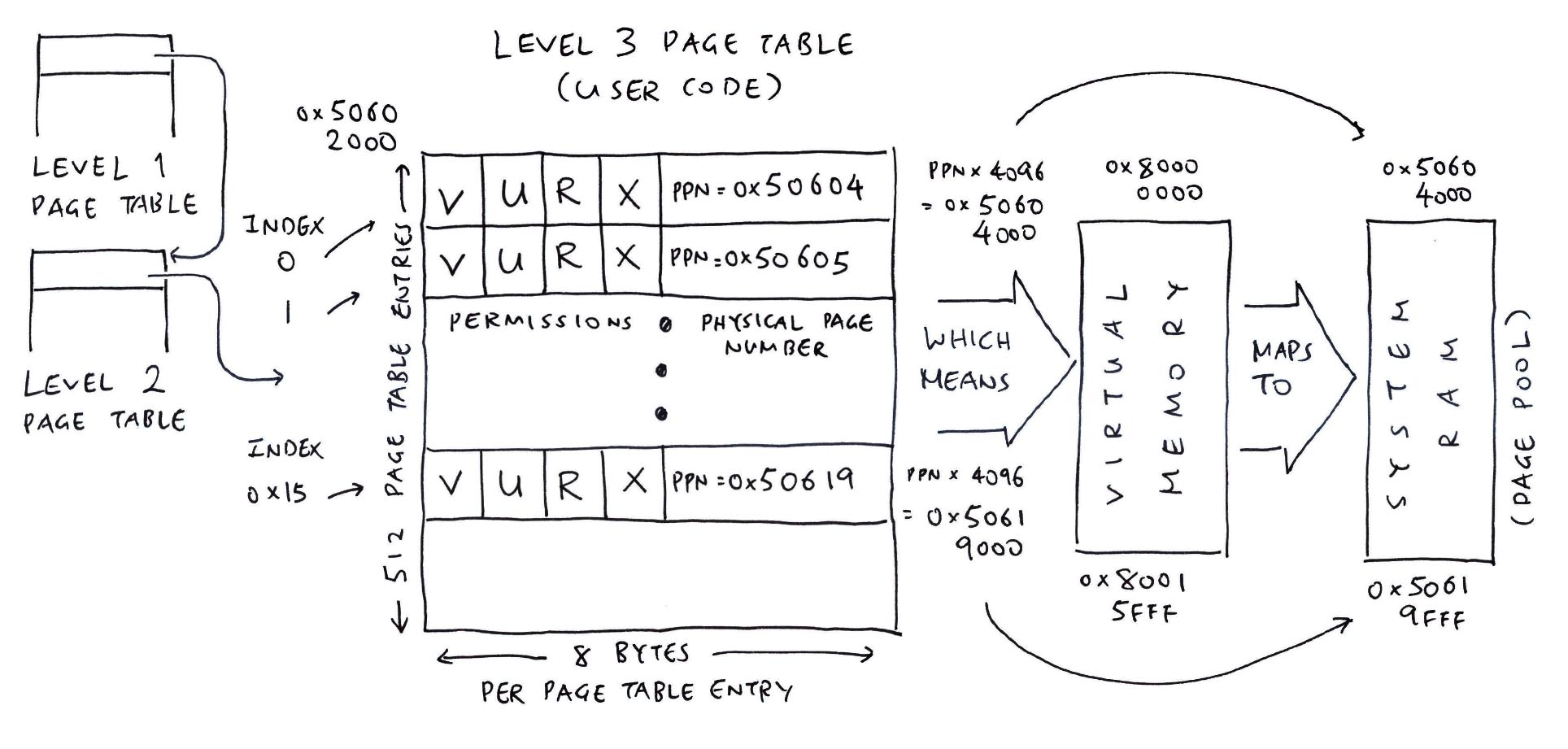

Now we switch course to Applications and Virtual Memory…

(Who calls mmu_ln_map_region and mmu_ln_setentry at startup)

Earlier we talked about Sv39 MMU and Virtual Memory…

Allow Applications to access chunks of “Imaginary Memory” at Exotic Addresses (

0x8000_0000!)

But in reality: They’re System RAM recycled from boring old addresses (like

0x5060_4000)

Let’s make some magic!

What are the “Exotic Addresses” for our Application?

NuttX will map the Application Code (Text), Data and Heap at these Virtual Addresses: nsh/defconfig

CONFIG_ARCH_TEXT_VBASE=0x80000000

CONFIG_ARCH_TEXT_NPAGES=128

CONFIG_ARCH_DATA_VBASE=0x80100000

CONFIG_ARCH_DATA_NPAGES=128

CONFIG_ARCH_HEAP_VBASE=0x80200000

CONFIG_ARCH_HEAP_NPAGES=128Which says…

| Region | Start Address | Size |

|---|---|---|

| User Code | 0x8000_0000 | (Max 128 Pages) |

| User Data | 0x8010_0000 | (Max 128 Pages) |

| User Heap | 0x8020_0000 | (Max 128 Pages) (Each Page is 4 KB) |

“User” refers to RISC-V User Mode, which is less privileged than our Kernel running in Supervisor Mode.

And what are the boring old Physical Addresses?

NuttX will map the Virtual Addresses above to the Physical Addresses from…

The Kernel Page Pool that we saw earlier! The Pooled Pages will be dished out dynamically to Applications as they run.

Will Applications see the I/O Memory, Kernel RAM, Interrupt Controller?

Nope! That’s the beauty of an MMU: We control everything that the Application can meddle with!

Our Application will see only the assigned Virtual Addresses, not the actual Physical Addresses used by the Kernel.

We watch NuttX do its magic…

Our Application (NuttX Shell) requires 22 Pages of Virtual Memory for its User Code.

NuttX populates the Level 3 Page Table for the User Code like so…

Something smells special… What’s this “U” Permission?

The “U” User Permission says that this Page Table Entry is accesible by our Application. (Which runs in RISC-V User Mode)

Note that the Virtual Address 0x8000_0000 now maps to a different Physical Address 0x5060_4000.

(Which comes from the Kernel Page Pool)

That’s the tasty goodness of Virtual Memory!

But where is Virtual Address 0x8000_0000 defined?

Virtual Addresses are propagated from the Level 1 Page Table, as we’ll soon see.

Anything else in the Level 3 Page Table?

Page Table Entries for the User Data will appear in the same Level 3 Page Table.

We move up to Level 2…

(See the NuttX Virtual Memory Log)

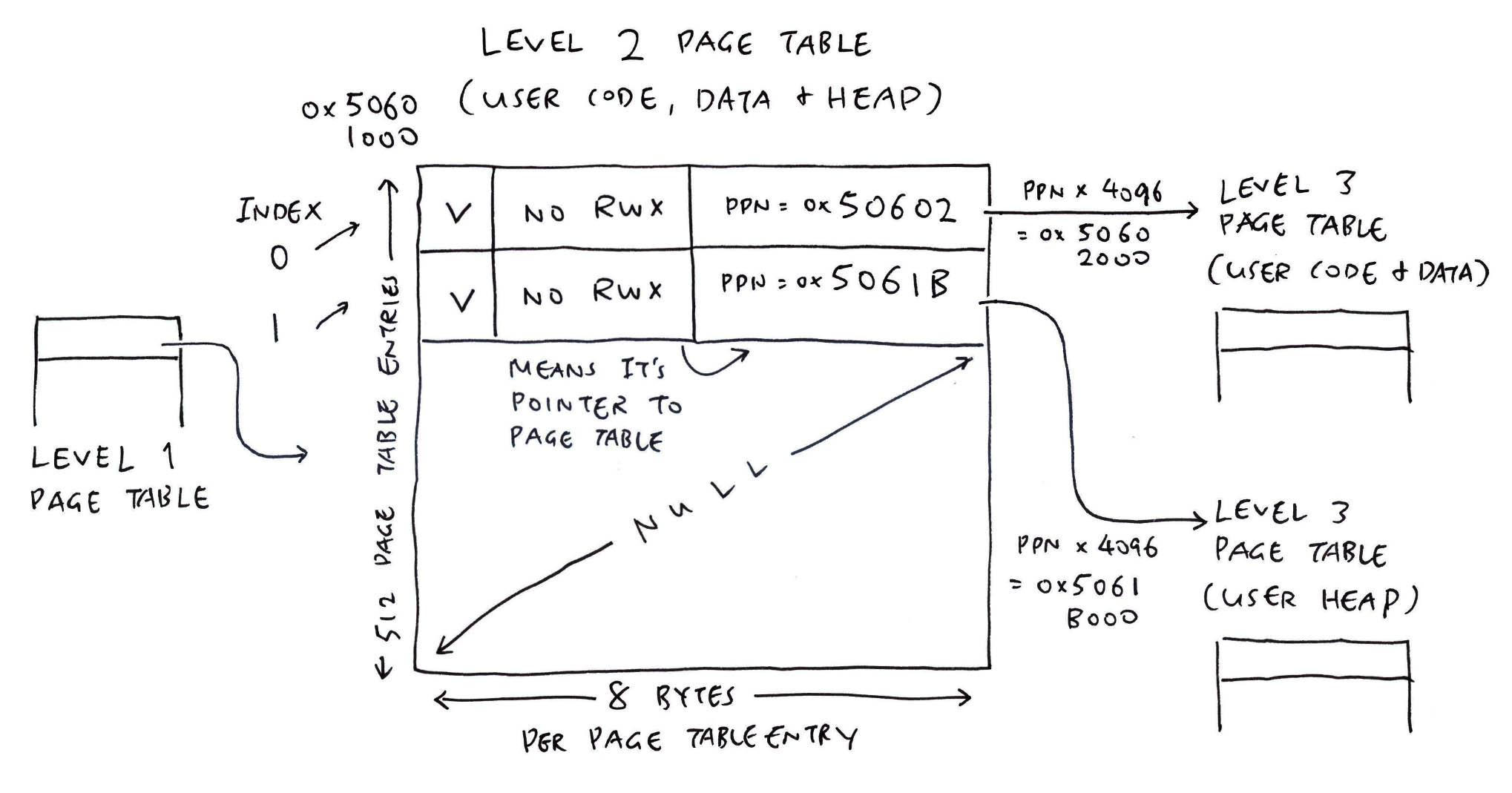

NuttX populates the User Level 2 Page Table (pic above) with the Physical Page Numbers (PPN) of the…

Level 3 Page Table for User Code and Data

(From previous section)

Level 3 Page Table for User Heap

(To make malloc work)

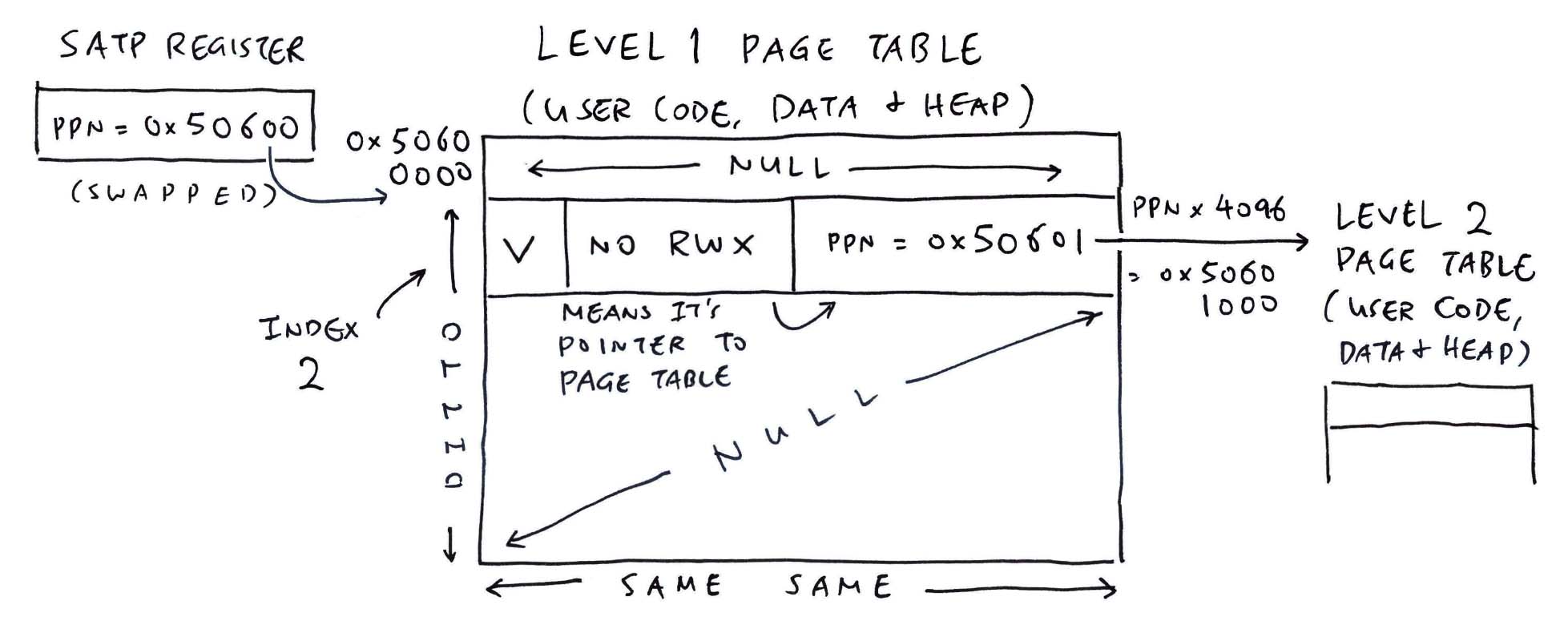

Ultimately we track back to User Level 1 Page Table…

Which points PPN to the User Level 2 Page Table.

And that’s how User Levels 1, 2 and 3 are connected!

Each Application will have its own set of User Page Tables for…

| Region | Start Address | Size |

|---|---|---|

| User Code | 0x8000_0000 | (Max 128 Pages) |

| User Data | 0x8010_0000 | (Max 128 Pages) |

| User Heap | 0x8020_0000 | (Max 128 Pages) (Each Page is 4 KB) |

Once again: Where is Virtual Address 0x8000_0000 defined?

From the pic above, we see that the Page Table Entry has Index 2.

Recall that each Entry in the Level 1 Page Table configures 1 GB of Virtual Memory. (0x4000_0000 Bytes)

Since the Entry Index is 2, then the Virtual Address must be 0x8000_0000. Mystery solved!

(More about Address Translation)

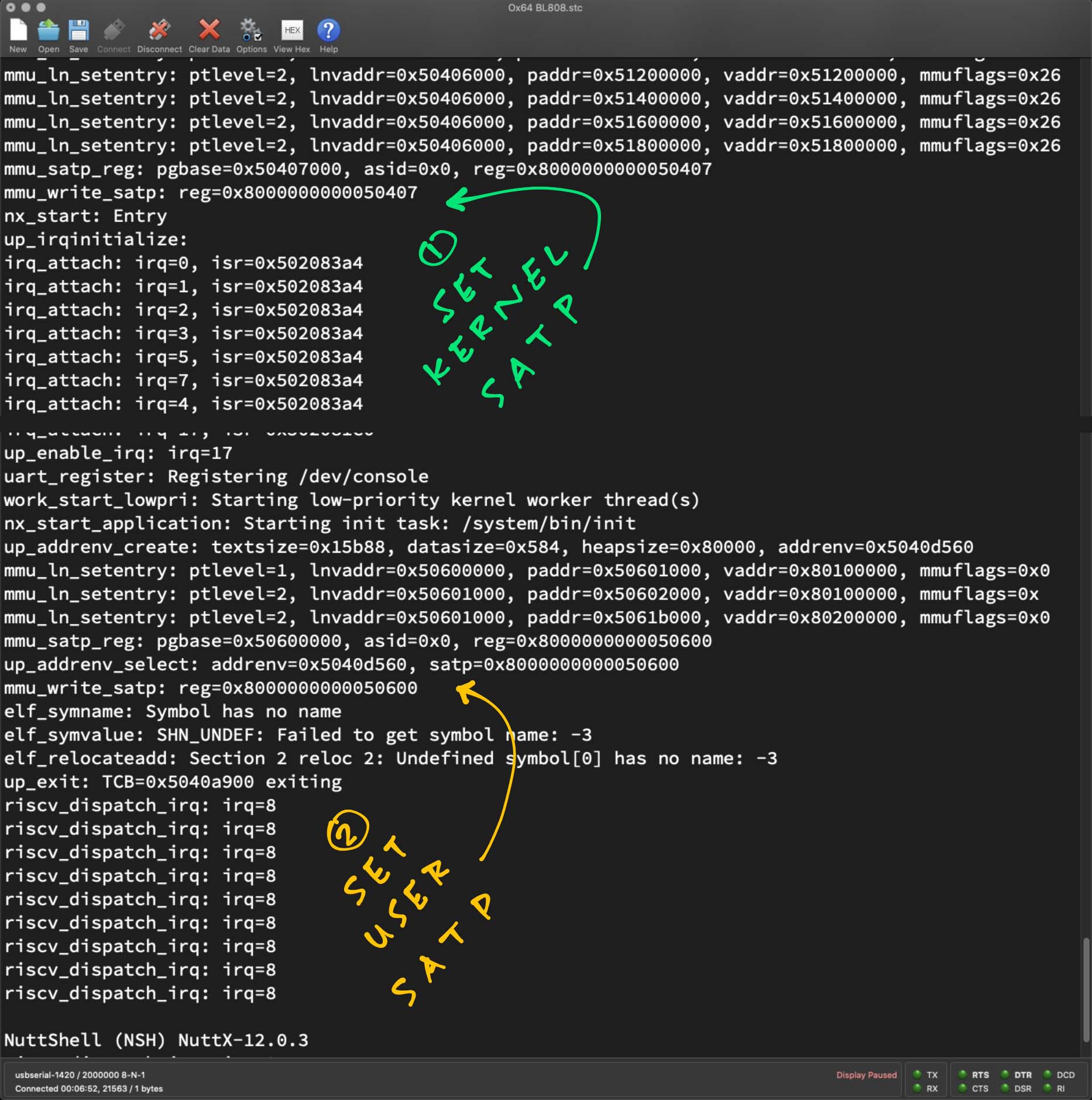

There’s something odd about the SATP Register…

Yeah the SATP Register has changed! We investigate…

(Who populates the User Page Tables)

(See the NuttX Virtual Memory Log)

SATP Register looks different from the earlier one in the Kernel…

Are there Multiple SATP Registers?

We saw two different SATP Registers, each pointing to a different Level 1 Page Table…

But actually there’s only one SATP Register!

(SATP is for Supervisor Address Translation and Protection)

Here’s the secret: NuttX uses this nifty recipe to cook up the illusion of Multiple SATP Registers…

Earlier we wrote this to set the SATP Register: bl808_mm_init.c

// Set the SATP Register to the

// Physical Page Number of Level 1 Page Table.

// Set SATP Mode to Sv39.

mmu_enable(

g_kernel_pgt_pbase, // Page Table Address

0 // Set Address Space ID to 0

);When we switch the context from Kernel to Application: We swap the value of the SATP Register… Which points to a Different Level 1 Page Table!

The Address Space ID (stored in SATP Register) can also change. It’s a handy shortcut that tells us which Level 1 Page Table (Address Space) is in effect.

(NuttX doesn’t seem to use Address Space)

We see NuttX swapping the SATP Register as it starts an Application (NuttX Shell)…

// At Startup: NuttX points the SATP Register to

// Kernel Level 1 Page Table (0x5040 7000)

mmu_satp_reg:

pgbase=0x50407000, asid=0x0, reg=0x8000000000050407

mmu_write_satp:

reg=0x8000000000050407

nx_start: Entry

...

// Later: NuttX points the SATP Register to

// User Level 1 Page Table (0x5060 0000)

Starting init task: /system/bin/init

mmu_satp_reg:

pgbase=0x50600000, asid=0x0, reg=0x8000000000050600

up_addrenv_select:

addrenv=0x5040d560, satp=0x8000000000050600

mmu_write_satp:

reg=0x8000000000050600(SATP Register begins with 0x8 to enable Sv39)

Always remember to Flush the MMU Cache when swapping the SATP Register (and switching Page Tables)…

So indeed we can have “Multiple” SATP Registers sweet!

Ah there’s a catch… Remember the “G” Global Mapping Permission from earlier?

This means that the Page Table Entry will be effective across ALL Address Spaces! Even in our Applications!

Huh? Our Applications can meddle with the I/O Memory?

Nope they can’t, because the “U” User Permission is denied. Therefore we’re all safe and well protected!

Can NuttX Kernel access the Virtual Memory of NuttX Apps?

Yep! Here’s how…

I hope this article has been a tasty treat for understanding the inner workings of…

Memory Protection

(For our Kernel)

Virtual Memory

(For the Applications)

And the Sv39 Memory Management Unit

…As we documented everything that happens when Apache NuttX RTOS boots on Ox64 SBC!

(Actually we wrote this article to fix a Troubling Roadblock for Ox64 NuttX)

We’ll do much more for NuttX on Ox64 BL808, stay tuned for updates!

Many Thanks to my GitHub Sponsors (and the awesome NuttX Community) for supporting my work! This article wouldn’t have been possible without your support.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…

Ox64 BL808 crashes with a Page Fault when we run getprime then hello…

nsh> getprime

getprime took 0 msec

nsh> hello

riscv_exception: EXCEPTION: Store/AMO page fault.

MCAUSE: 0f,

EPC: 50208fcc,

MTVAL: 80200000The Invalid Address 0x8020_0000 is the Virtual Address of the Dynamic Heap (malloc) for the hello app: boards/risc-v/bl808/ox64/configs/nsh/defconfig

## NuttX Config for Ox64

CONFIG_ARCH_DATA_NPAGES=128

CONFIG_ARCH_DATA_VBASE=0x80100000

CONFIG_ARCH_HEAP_NPAGES=128

CONFIG_ARCH_HEAP_VBASE=0x80200000

CONFIG_ARCH_TEXT_NPAGES=128

CONFIG_ARCH_TEXT_VBASE=0x80000000We discover that T-Head C906 MMU is incorrectly accessing the MMU Page Tables of the Previous Process (getprime) while starting the New Process (hello)…

## Virtual Address 0x8020_0000 is OK for Previous Process

nsh> getprime

Virtual Address 0x80200000 maps to Physical Address 0x506a6000

*0x506a6000 is 0

*0x80200000 is 0

## Virtual Address 0x8020_0000 crashes for New Process

nsh> hello

Virtual Address 0x80200000 maps to Physical Address 0x506a4000

*0x506a4000 is 0

riscv_exception: EXCEPTION: Load page fault. MCAUSE: 000000000000000d, EPC: 000000005020c020, MTVAL: 0000000080200000Which means that the T-Head C906 MMU Cache needs to be flushed. This should be done right after we swap the MMU SATP Register.

To fix the problem, we refer to the T-Head Errata for Linux Kernel…

// th.dcache.ipa rs1 (invalidate, physical address)

// | 31 - 25 | 24 - 20 | 19 - 15 | 14 - 12 | 11 - 7 | 6 - 0 |

// 0000001 01010 rs1 000 00000 0001011

// th.dcache.iva rs1 (invalidate, virtual address)

// 0000001 00110 rs1 000 00000 0001011

// th.dcache.cpa rs1 (clean, physical address)

// | 31 - 25 | 24 - 20 | 19 - 15 | 14 - 12 | 11 - 7 | 6 - 0 |

// 0000001 01001 rs1 000 00000 0001011

// th.dcache.cva rs1 (clean, virtual address)

// 0000001 00101 rs1 000 00000 0001011

// th.dcache.cipa rs1 (clean then invalidate, physical address)

// | 31 - 25 | 24 - 20 | 19 - 15 | 14 - 12 | 11 - 7 | 6 - 0 |

// 0000001 01011 rs1 000 00000 0001011

// th.dcache.civa rs1 (clean then invalidate, virtual address)

// 0000001 00111 rs1 000 00000 0001011

// th.sync.s (make sure all cache operations finished)

// | 31 - 25 | 24 - 20 | 19 - 15 | 14 - 12 | 11 - 7 | 6 - 0 |

// 0000000 11001 00000 000 00000 0001011

#define THEAD_INVAL_A0 ".long 0x02a5000b"

#define THEAD_CLEAN_A0 ".long 0x0295000b"

#define THEAD_FLUSH_A0 ".long 0x02b5000b"

#define THEAD_SYNC_S ".long 0x0190000b"

#define THEAD_CMO_OP(_op, _start, _size, _cachesize) \

asm volatile( \

"mv a0, %1\n\t" \

"j 2f\n\t" \

"3:\n\t" \

THEAD_##_op##_A0 "\n\t" \

"add a0, a0, %0\n\t" \

"2:\n\t" \

"bltu a0, %2, 3b\n\t" \

THEAD_SYNC_S \

: : "r"(_cachesize), \

"r"((unsigned long)(_start) & ~((_cachesize) - 1UL)), \

"r"((unsigned long)(_start) + (_size)) \

: "a0")For NuttX: We flush the MMU Cache whenever we swap the MMU SATP Register to the New Page Tables.

We execute 2 RISC-V Instructions that are specific to T-Head C906…

DCACHE.IALL: Invalidate all Page Table Entries in the D-Cache

(From C906 User Manual, Page 551)

SYNC.S: Ensure that all Cache Operations are completed

(Undocumented, comes from the T-Head Errata above)

(Looks similar to SYNC and SYNC.I from C906 User Manual, Page 559)

This is how we Flush the MMU Cache for T-Head C906: arch/risc-v/src/bl808/bl808_mm_init.c

// Flush the MMU Cache for T-Head C906. Called by mmu_write_satp() after

// updating the MMU SATP Register, when swapping MMU Page Tables.

// This operation executes RISC-V Instructions that are specific to

// T-Head C906.

void weak_function mmu_flush_cache(void) {

__asm__ __volatile__ (

// DCACHE.IALL: Invalidate all Page Table Entries in the D-Cache

".long 0x0020000b\n"

// SYNC.S: Ensure that all Cache Operations are completed

".long 0x0190000b\n"

);

}mmu_flush_cache is called by mmu_write_satp, whenever the MMU SATP Register is updated (and the MMU Page Tables are swapped): arch/risc-v/src/common/riscv_mmu.h

// Update the MMU SATP Register for swapping MMU Page Tables

static inline void mmu_write_satp(uintptr_t reg) {

__asm__ __volatile__ (

"csrw satp, %0\n"

"sfence.vma x0, x0\n"

"fence rw, rw\n"

"fence.i\n"

:

: "rK" (reg)

: "memory"

);

// Flush the MMU Cache if needed (T-Head C906)

if (mmu_flush_cache != NULL) {

mmu_flush_cache();

}

}With this fix, the hello app now accesses the Heap Memory correctly…

## Virtual Address 0x8020_0000 is OK for Previous Process

nsh> getprime

Virtual Address 0x80200000 maps to Physical Address 0x506a6000

*0x506a6000 is 0

*0x80200000 is 0

## Virtual Address 0x8020_0000 is OK for New Process

nsh> hello

Virtual Address 0x80200000 maps to Physical Address 0x506a4000

*0x506a4000 is 0

*0x80200000 is 0

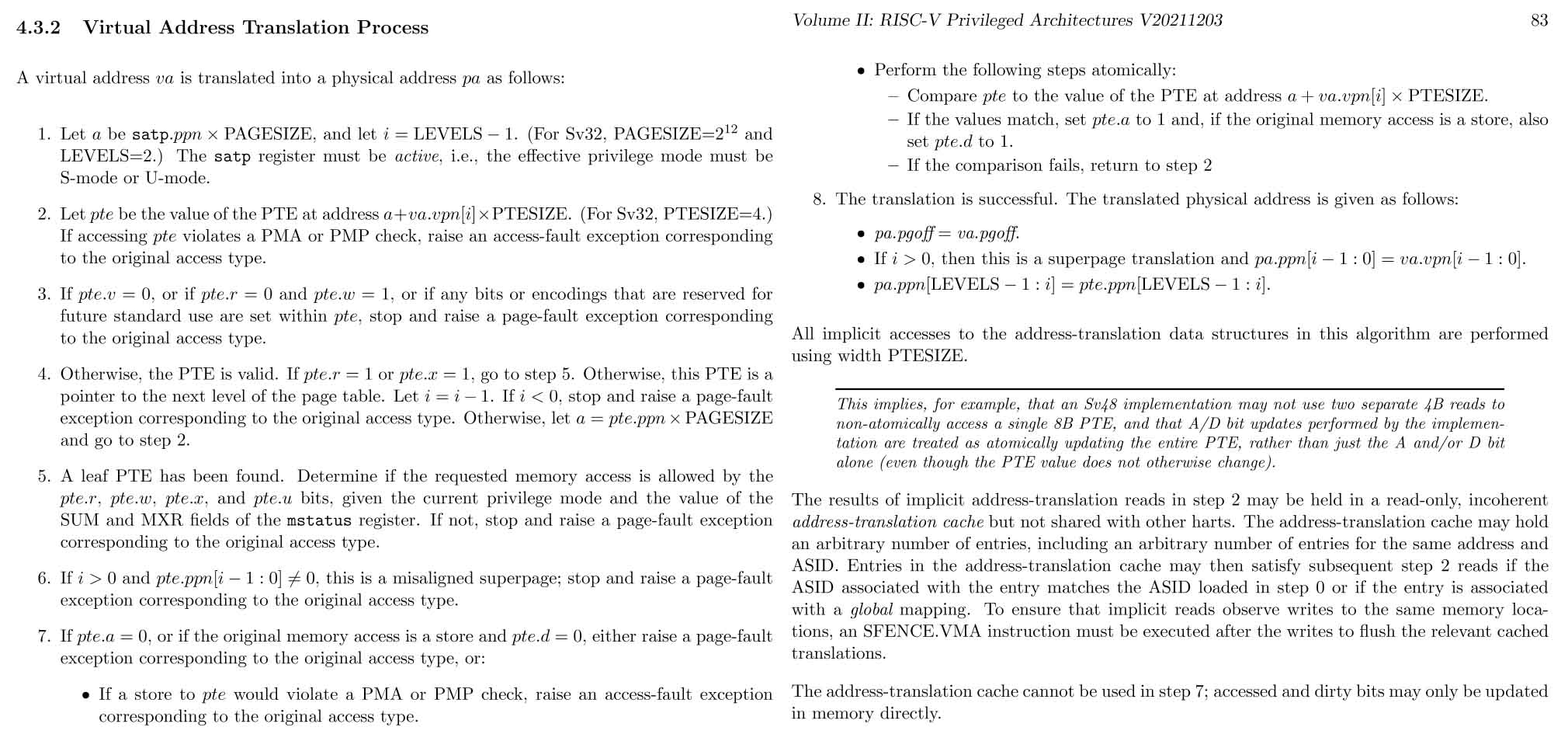

Virtual Address Translation Process (Page 82)

How does Sv39 MMU translate a Virtual Address to Physical Address?

Sv39 MMU translates a Virtual Address to Physical Address by traversing the Page Tables as described in…

“RISC-V ISA: Privileged Architectures” (Page 82)

Section 4.3.2: “Virtual Address Translation Process”

(Pic above)

For Sv39 MMU: The parameters are…

PAGESIZE = 4,096

LEVELS = 3

PTESIZE = 8

vpn[i] and ppn[i] refer to these Virtual and Physical Address Fields (Page 85)…

What if the Address Translation fails?

The algo above says that Sv39 MMU will trigger a Page Fault. (RISC-V Exception)

Which is super handy for implementing Memory Paging.

What about mapping a Physical Address back to Virtual Address?

Well that would require an Exhaustive Search of all Page Tables!

OK how about Virtual / Physical Address to Page Table Entry (PTE)?

Given a Virtual Address vaddr…

(Or a Physical Address, assuming Virtual = Physical like our Kernel)

Virtual Page Number: vpn

= vaddr >> 12

(4,096 bytes per Memory Page)

Level 1 PTE Index

= (vpn >> 18) & 0b1_1111_1111

(Extract Bits 18 to 26 to get Level 1 Index)

Level 2 PTE Index

= (vpn >> 9) & 0b1_1111_1111

(Extract Bits 9 to 17 to get Level 2 Index)

Level 3 PTE Index

= vpn & 0b1_1111_1111

(Extract Bits 0 to 8 to get Level 3 Index)

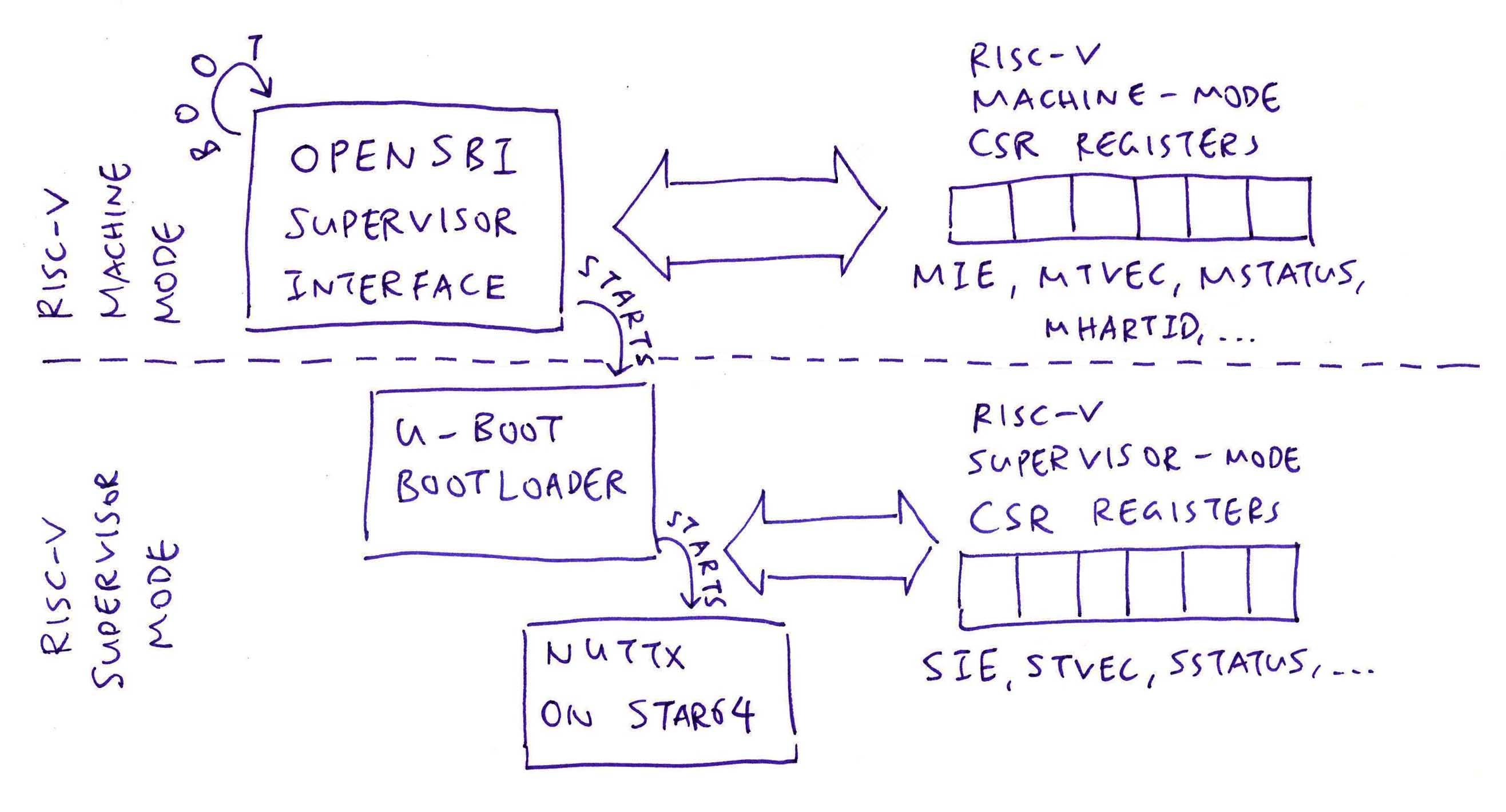

Isn’t there another kind of Memory Protection in RISC-V?

Yes RISC-V also supports Physical Memory Protection.

But it only works in RISC-V Machine Mode (pic above), the most powerful mode. (Like for OpenSBI)

NuttX and Linux run in RISC-V Supervisor Mode, which is less poweful. And won’t have access to this Physical Memory Protection.

That’s why NuttX and Linux use Sv39 MMU instead for Memory Protection.

(See the Ox64 settings for Physical Memory Protection)

In this article, we ran a Work-In-Progress Version of Apache NuttX RTOS for Ox64, with added MMU Logging.

(Console Input is not yet supported)

This is how we download and build NuttX for Ox64 BL808 SBC…

## Download the WIP NuttX Source Code

git clone \

--branch ox64a \

https://github.com/lupyuen2/wip-nuttx \

nuttx

git clone \

--branch ox64a \

https://github.com/lupyuen2/wip-nuttx-apps \

apps

## Build NuttX

cd nuttx

tools/configure.sh star64:nsh

make

## Export the NuttX Kernel

## to `nuttx.bin`

riscv64-unknown-elf-objcopy \

-O binary \

nuttx \

nuttx.bin

## Dump the disassembly to nuttx.S

riscv64-unknown-elf-objdump \

--syms --source --reloc --demangle --line-numbers --wide \

--debugging \

nuttx \

>nuttx.S \

2>&1(Remember to install the Build Prerequisites and Toolchain)

(And enable Scheduler Info Output)

Then we build the Initial RAM Disk that contains NuttX Shell and NuttX Apps…

## Build the Apps Filesystem

make -j 8 export

pushd ../apps

./tools/mkimport.sh -z -x ../nuttx/nuttx-export-*.tar.gz

make -j 8 import

popd

## Generate the Initial RAM Disk `initrd`

## in ROMFS Filesystem Format

## from the Apps Filesystem `../apps/bin`

## and label it `NuttXBootVol`

genromfs \

-f initrd \

-d ../apps/bin \

-V "NuttXBootVol"

## Prepare a Padding with 64 KB of zeroes

head -c 65536 /dev/zero >/tmp/nuttx.pad

## Append Padding and Initial RAM Disk to NuttX Kernel

cat nuttx.bin /tmp/nuttx.pad initrd \

>ImageNext we prepare a Linux microSD for Ox64 as described in the previous article.

(Remember to flash OpenSBI and U-Boot Bootloader)

Then we do the Linux-To-NuttX Switcheroo: Overwrite the microSD Linux Image by the NuttX Kernel…

## Overwrite the Linux Image

## on Ox64 microSD

cp Image \

"/Volumes/NO NAME/Image"

diskutil unmountDisk /dev/disk2Insert the microSD into Ox64 and power up Ox64.

Ox64 boots OpenSBI, which starts U-Boot Bootloader, which starts NuttX Kernel and the NuttX Shell (NSH). (Pic above)

What’s wrong with the Interrupt Controller?

Earlier we had difficulty configuring the Sv39 MMU for the Interrupt Controller at 0xE000_0000…

| Region | Start Address | Size |

|---|---|---|

| I/O Memory | 0x0000_0000 | 0x4000_0000 (1 GB) |

| RAM | 0x5020_0000 | 0x0180_0000 (24 MB) |

| Interrupt Controller | 0xE000_0000 | 0x1000_0000 (256 MB) |

Why not park the Interrupt Controller as a Level 1 Page Table Entry?

| Index | Permissions | Physical Page Number |

|---|---|---|

| 0 | VGRWSO+S | 0x00000 (I/O Memory) |

| 1 | VG (Pointer) | 0x50406 (L2 Kernel Code & Data) |

| 3 | VGRWSO+S | 0xC0000 (Interrupt Controller) |

Uh it’s super wasteful to reserve 1 GB of Address Space (Level 1 at 0xC000_0000) for our Interrupt Controller that requires only 256 MB.

But there’s another problem: Our User Memory was originally assigned to 0xC000_0000…

| Region | Start Address | Size |

|---|---|---|

| User Code | 0xC000_0000 | (Max 128 Pages) |

| User Data | 0xC010_0000 | (Max 128 Pages) |

| User Heap | 0xC020_0000 | (Max 128 Pages) (Each Page is 4 KB) |

Which would collide with our Interrupt Controller!

OK so we move our User Memory elsewhere?

Yep that’s why we moved the User Memory from 0xC000_0000 to 0x8000_0000…

| Region | Start Address | Size |

|---|---|---|

| User Code | 0x8000_0000 | (Max 128 Pages) |

| User Data | 0x8010_0000 | (Max 128 Pages) |

| User Heap | 0x8020_0000 | (Max 128 Pages) (Each Page is 4 KB) |

Which won’t conflict with our Interrupt Controller.

(Or maybe we should have moved the User Memory to another Exotic Address: 0x1_0000_0000)

But we said that Level 1 is too wasteful for Interrupt Controller?

Once again: It’s super wasteful to reserve 1 GB of Address Space (Level 1 at 0xC000_0000) for our Interrupt Controller that requires only 256 MB.

Also we hope MMU will stop the Kernel from meddling with the memory at 0xC000_0000. Because it’s not supposed to!

Move the Interrupt Controller to Level 2 then!

That’s why we wrote this article: To figure out how to move the Interrupt Controller to a Level 2 Page Table. (And connect Level 1 with Level 2)

And that’s how we arrived at this final MMU Mapping…

| Index | Permissions | Physical Page Number |

|---|---|---|

| 0 | VGRWSO+S | 0x00000 (I/O Memory) |

| 1 | VG (Pointer) | 0x50406 (L2 Kernel Code & Data) |

| 3 | VG (Pointer) | 0x50403 (L2 Interrupt Controller) |

That works hunky dory for Interrupt Controller and for User Memory!

Table full of… RISC-V Page Tables!